While Artificial Intelligence (AI) can do things remarkably by making computers smarter, one of the biggest concerns, is to figure out why it makes a decision or comes into certain conclusion.

This is known as the black box problem, and it essentially prevents us humans from fully trusting AI systems.

A team of researchers from UC Berkeley, University of Amsterdam, MPI for Informatics, and Facebook AI Research, has successfully taught an AI to justify its reasoning. Capable if explaining itself, this makes the black box transparent.

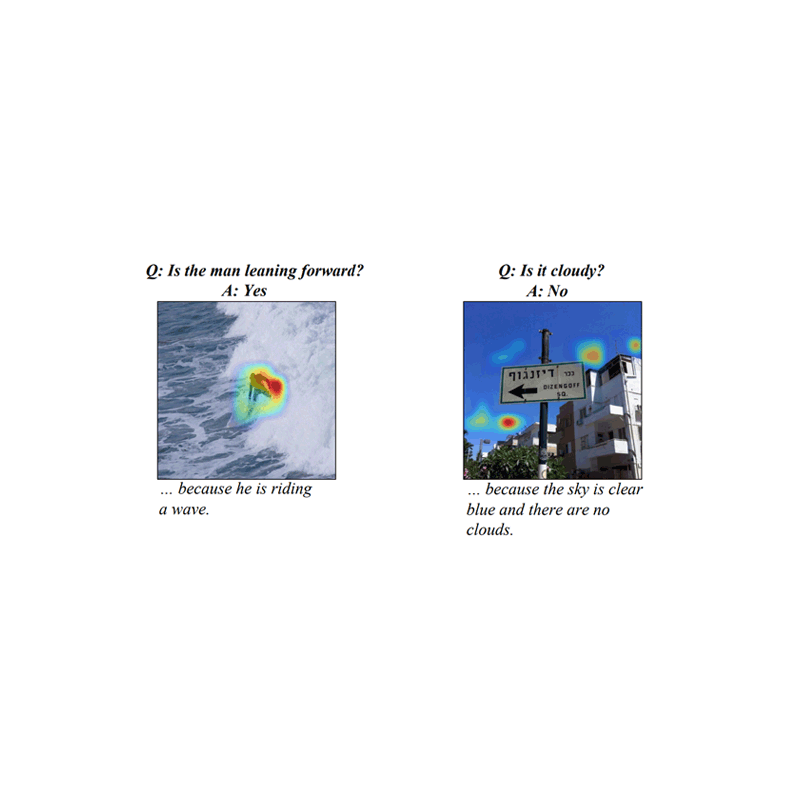

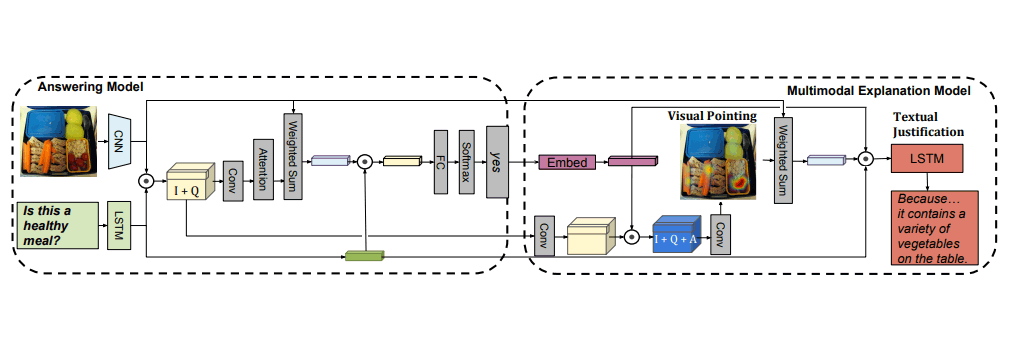

So here, the AI can show whatever evidence it used to answer a question. And through text, it can also describe how it interpreted that evidence.

Related: Researchers Unveil 'DeepXplore', A Tool To Debug The 'Black Box' Of AI's Deep Learning

According to the team’s published white paper this is the first time anyone’s created a system:

"Our model is the first to be capable of providing natural language justifications of decisions as well as pointing to the evidence in an image."

Here, the AI has been developed to answer questions that require the average intellect of a nine year old human child.

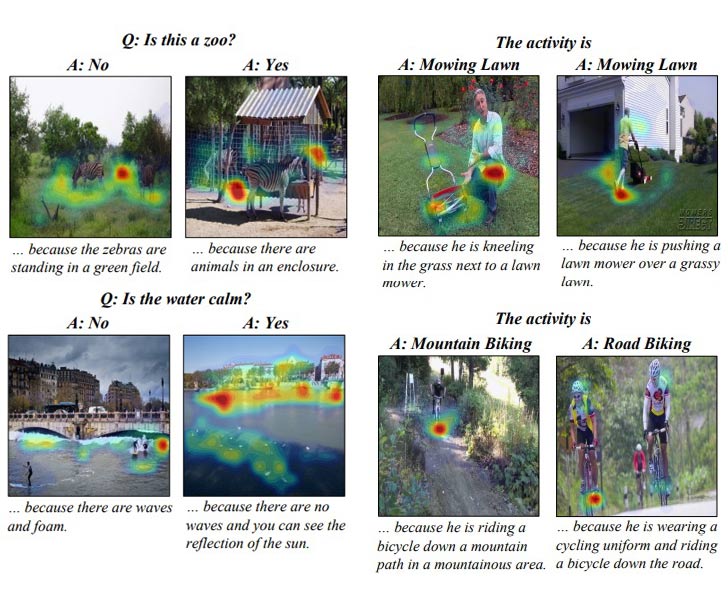

In the examples, the researchers show that the AI can answer questions about objects, as well as their actions. It can also explain its answers by describing what it saw, with it highlighting the relevant parts of the image.

It didn't get everything right, like for example during the test, the AI got confused when determining whether a person was smiling or not. It also had trouble in telling the difference between a painting and someone using a vacuum cleaner.

But again, this is the point of the research: knowing what AI sees and its reasons.

The test research wasn't about teaching AI to become smarter, but to eliminate the so-called black box. We need to know how an AI comes into a conclusion, and with this research, we can finally know why.

This way, researchers can understand the decision making process of machines. With the information, they can then debug and error-check to address the issues. This should be useful since neural networks advancements have become one of the source of data analysis.

And creating a way for us to know how AI thinks, may avoid some people's fear about robots apocalypse that will end the human race. After all, AI is a relatively new field, and here we are still clueless.

Read: The Greatest Risk We Face As A Civilization, Is Artificial Intelligence