The AI industry is moving fast, and that the pace it is being developed is pretty much unmatched by any other industry.

Google needs to embrace this fact because after all, the company is also one of the big players in the industry, Google also knows how AI can come as a threat, if not properly governed or controlled. So here, in the era of deepfakes, where fakery is getting increasingly real, YouTube has updated its rulebook.

In a blog post, the company said that it starts requiring pretty much AI-generated videos to have labels on them, to disclose that they're not real.

What this means, anyone uploading video to the platform must disclose certain uses of synthetic media, including generative AI, so their viewers know what they’re seeing isn’t real.

The updated policy shows YouTube taking steps that could help curb the spread of AI-generated misinformation.

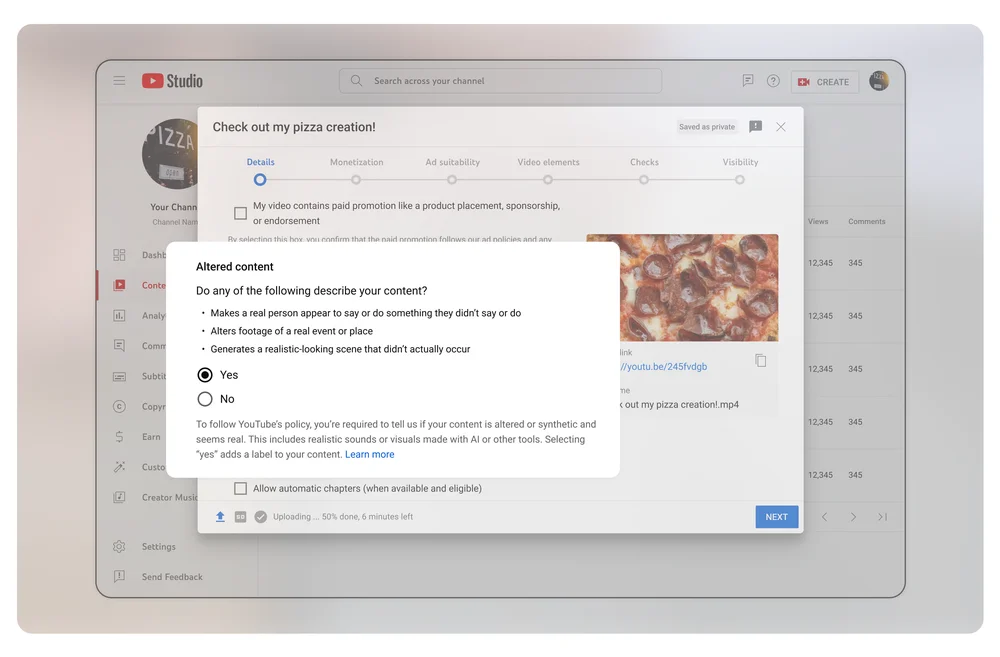

To make this happen, the Google-owned video platform said that it has introduced a new tool to the Creator Studio, to help content creators add the special label to their AI-generated videos.

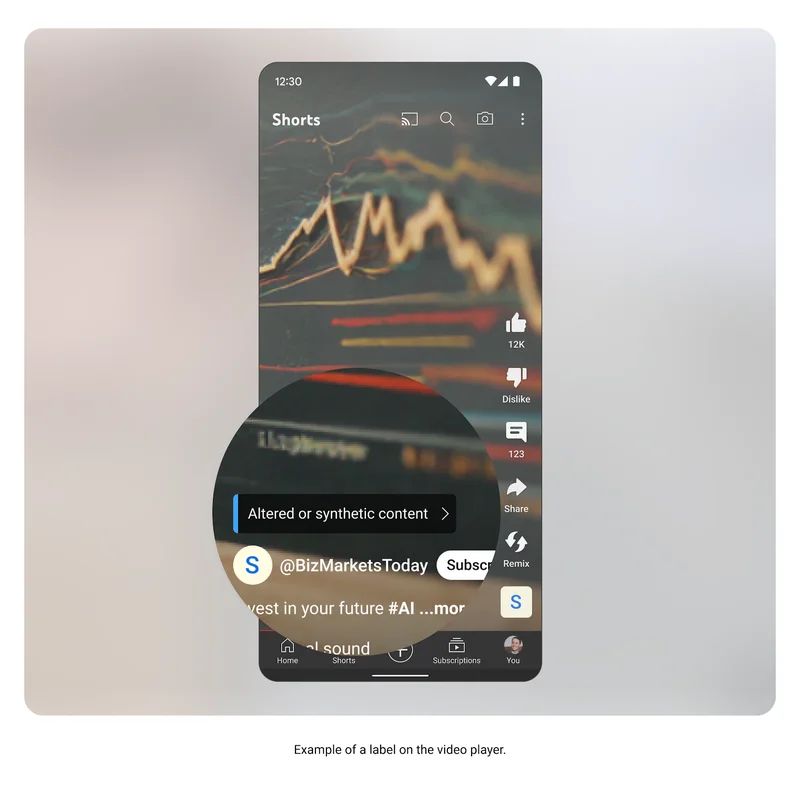

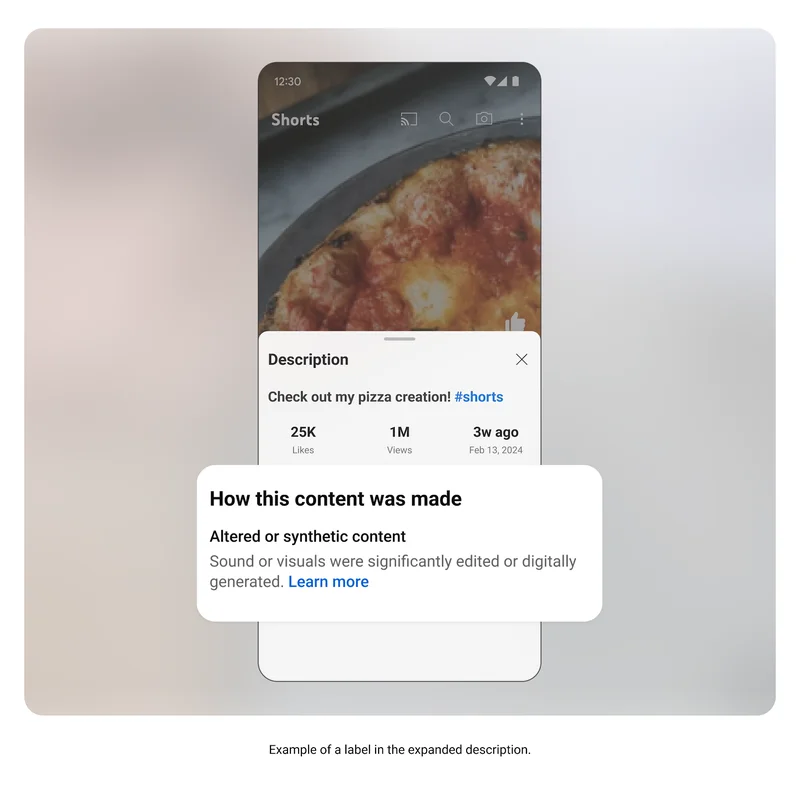

Videos tagged this way will show a relevant label, either in the expanded description or on the video itself, depending on the sensitivity of the subject matter.

While saying uploaders have the option of ticking the box to indicate their content was made with AI is true in the strictest sense, it's not actually optional.

For applicable uses of synthetic or altered media, disclosure is mandatory.

"The new label is meant to strengthen transparency with viewers and build trust between creators and their audience," YouTube said.

"We're not requiring creators to disclose content that is clearly unrealistic, animated, includes special effects, or has used generative AI for production assistance." Production assistance includes generating scripts and content ideas, automating captions and the like."

YouTube said the label needs to be shared any time a video includes "content a viewer could easily mistake for a real person, place or event," as explained in more detail on a new Google help page on the topic.

For instance, its rules apply to "realistic" altered media, such as "making it appear as if a real building caught fire," or swapping "the face of one individual with another’s."

That also includes when a video makes a real person appear to do or say something they didn't.

There are some cases that AI-generated videos don't need such label.

For example, if an AI is used to change a video's color balance or lighting, or to blur backgrounds, or create film effects, the disclosure isn't required.

The use of "beauty filters or other visual enhancements," also doesn't need to be tagged either, though it could be argued such use may be harmful.

And most notably, videos that are for children, are also not required to have the label.

This is because YouTube’s new policies exclude animated content altogether from the disclosure requirement. This means that AI-generated content hustlers can keep churning out videos aimed at children without having to disclose their methods, and that it's the parents, if they're concerned, should be the ones left to identify AI-generated cartoons by themselves.

It's worth noting that failure to disclose that a video has been altered or is synthetic is a violation, which could result in penalties including removal of content or suspension from the YouTube Partner Program.

"We hope that this increased transparency will help all of us better appreciate the ways AI continues to empower human creativity," the platform said.

There won't be any shortage of low-quality content on YouTube, even before AI is used. And since the advent of generative AI tools, the technology could further lower the bar of quality content, and this is a big no to Google.