Killer robots, bipedal computerized sentinels with murderous intentions, or AI-powered computers with the capacity to deploy autonomous planes and missiles with nuclear warheads.

None of these are not going to happen anytime soon.

But considering how fast computers and artificial intelligence are being developed, the path towards that future is becoming kind of visible.

At this time, AI is in the fast lane. And as more and more people, and more companies are using and experimenting with the technology, AI is here to stay.

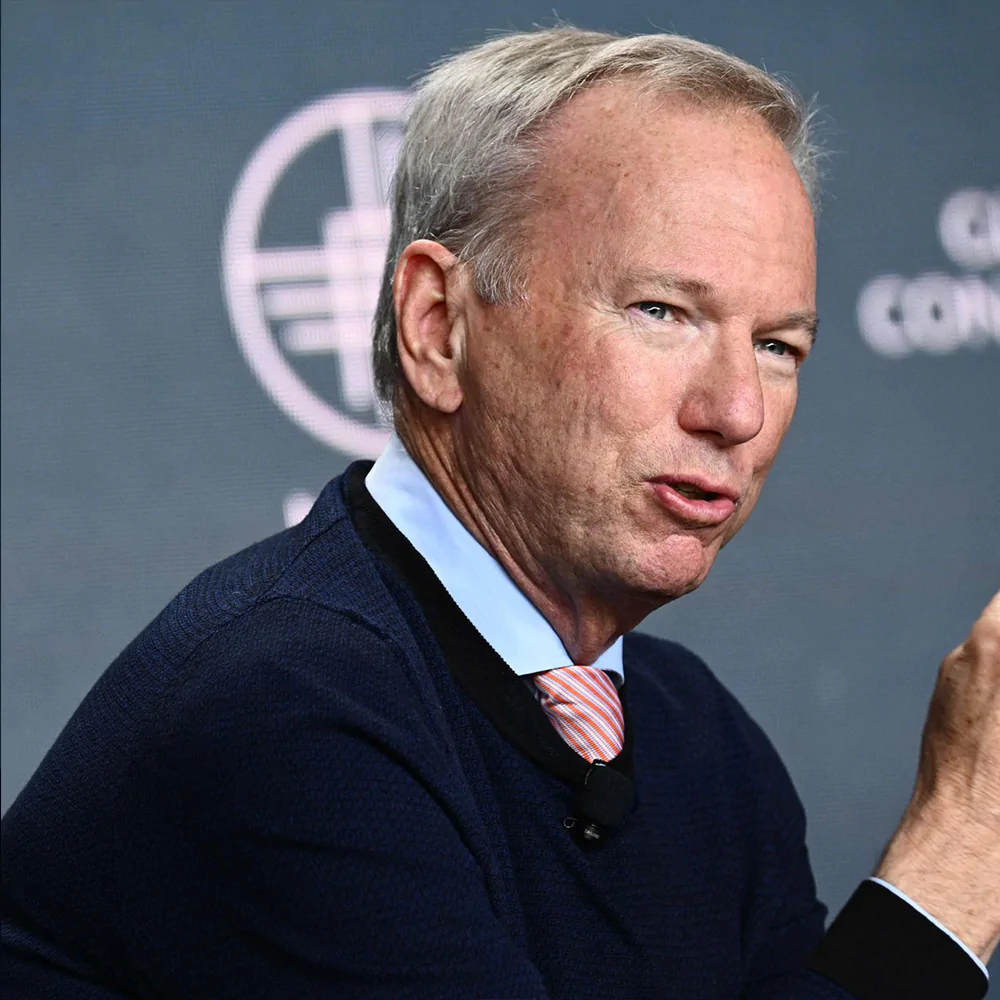

And this time, former Google CEO Eric Schmidt has raised his concerns about the potential risks associated with AI and its rapid development.

Speaking at The Wall Street Journal's CEO Council Summit, Schmidt said that:

"And there are scenarios not today but reasonably soon, where these systems will be able to find zero day exploits, cyber issues or discover new kinds of biology."

As a man of tech, Schmidt acknowledged the potential benefits of AI, such as its ability to identify security issues and uncover new advancements.

However, he stressed that while these applications may currently be fictional, their realization is very likely to happen in the future.

Schmidt is worried because it's extremely difficult to control the spread of AI, which he compared to the rise of nuclear technology.

"Nuclear had the property that there was a scarcity, which was enriched uranium. We are alive today because it was really hard to get that," said the former Google CEO.

To prevent the dystopian future predicted by many science-fiction works, Schmidt emphasized the importance of preparing for the rise of AI, and to prevent malicious individuals from misusing AI technologies for the wrong purposes.

Read: AI Is Soon Going To Be Smarter Than Humans. 'How Do We Survive That?'

Despite recognizing the need for regulation, Schmidt admitted that he did not have a definitive solution for this. Schmidt has no idea of effectively governing AI systems.

Schmidt viewed the topic as a broader question for society as a whole.

While many tech CEOs, including Elon Musk, have warned against the harmful effects of AI and its rapid evolution, others lauded the advancements as "stunning."

Schmidt expressed his concerns came just as leaders of leading AI companies, like OpenAI, Google DeepMind, and Anthropic met British Prime Minister Rishi Sunak.

In a statement, they all vowed to collaborate, and work together to ensure society benefits from the transformational technology.

Schmidt's warning also came in the wake of an open letter signed by over 1,000 influential executives, that called for a six-month halt in the training of AI models until a robust regulatory system was in place.

Read: If AI Goes Wrong, 'It Can Go Quite Wrong' And Cause 'Significant Harm To The World'