AI is smart because it understands things from learning.

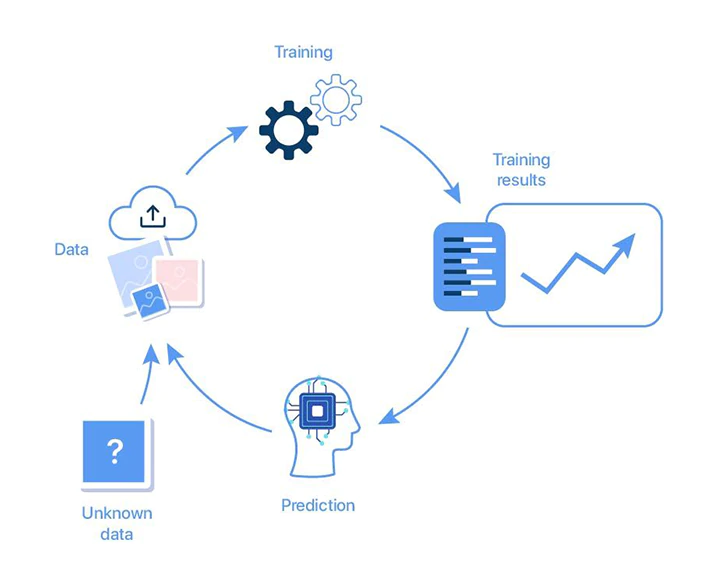

The technology works by extensively training on data about what it has to solve. By reading and churning huge amount of information, AIs can extract the patterns, and learn what it needs to do. The better the data, the better the AI should become.

But sometimes, this is not entirely true.

This is because of what researchers call the "catastrophic forgetting" anomaly.

This happens when AI systems lose information from previously learned tasks while it learns new ones.

According to researchers at The Ohio State University, it's revealed that AI systems can remember information better when they're faced with diverse tasks rather than those sharing similar features.

The finding by the electrical engineers at the university shows yet another similarity between machine learning and human learning.

In humans, the more they're trained on one subject, the better they will become on that particular subject. And if humans face increasingly complex problems, and manage to solve them, the experience should allow them to tackle similar complex problem with more ease. The same goes with humans when they learned increasingly complex subjects, which should also make them better in the subjects.

However, humans can also struggle to recall contrasting facts about similar scenarios, while can easily remember inherently different situations.

In other words, constant training on one subject continuously can make both machines and human beings a bit more forgetful.

In AI systems, this can happens during what's called the 'continual learning' process, where a computer is trained to continuously learn a sequence of tasks.

When an AI is given a goal to mimic the learning capabilities of humans and use accumulated knowledge from old tasks to improve learning new tasks, "catastrophic forgetting” is when the AI systems lose information it obtained from previous tasks, whenever it's trained on new tasks.

"Memories can be as tricky to hold onto for machines as they can be for humans," said Tatyana Woodall in a post on The Ohio State University's website.

"As automated driving applications or other robotic systems are taught new things, it’s important that they don’t forget the lessons they’ve already learned for our safety and theirs,” added Ness Shroff, an Ohio Eminent Scholar and professor of computer science and engineering at The Ohio State University.

This shows how machine learning technologies exhibit dynamic, lifelong learning capabilities, but has the challenging, but possessing disadvantages when having to scale up using similar data.

The study also highlights the importance of factors like task similarity, negative and positive correlations, and the order in which tasks are taught in the continual learning process.

For optimal memory retention, it's better for AIs to be taught a more diverse data early in the learning process, in order to expand the network’s capacity for new information and enhances its ability to learn more similar tasks in the future.

Understanding the similarities between machines and the human brain is crucial for advancing AI technology, especially when the world wants to welcome smarter AIs, including AGI, in a new era of intelligent machines that can learn and adapt like humans.

By addressing catastrophic forgetting, scientists should be able to improve continuous learning in AI systems and enhance their performance, and "bridge the gap between how a machine learns and how a human learns."

In 2019, a programmer created an algorithmically-generated face for an AI to remember, and then made the network to slowly forget what the face looked like.

In a video art titled "What I saw before the darkness," an eerie time-lapse views the inside of a demented AI’s mind, as its artificial neurons are switched off, one by one.