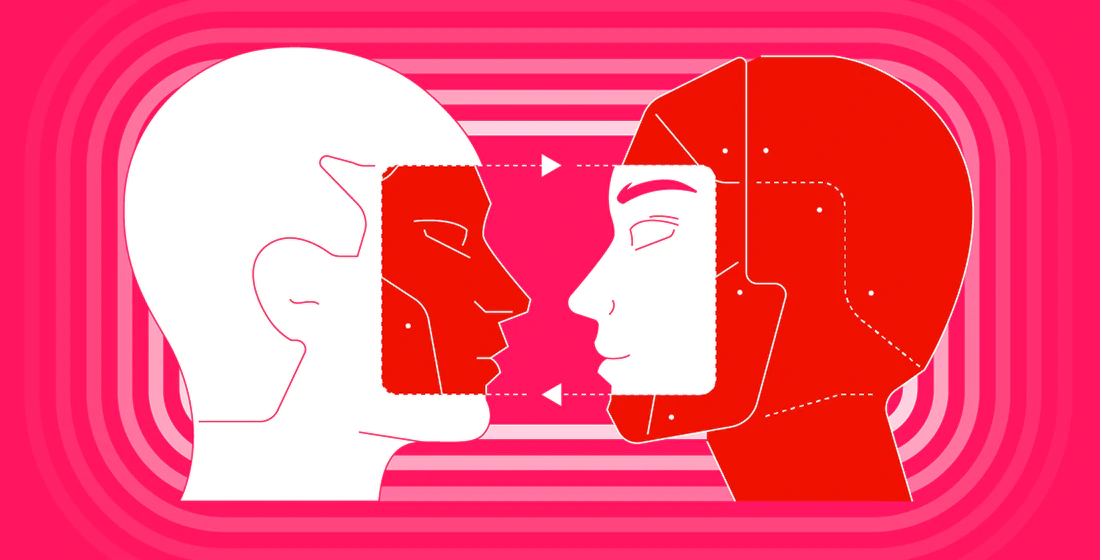

The boundaries between fact and fiction blur, thanks to the advancements of AI.

Thanks to the technology, the creation of things that don't exist is no longer the privilege of Hollywood and some giant movie studios. With AIs, the technology is providing the ways for people to fakery, and the implication is terrifying.

With computers becoming more capable and AI becoming more advanced, deepfakes have become a public concern. Introduced by a user on Reddit, the technology has evolved far faster than many people have anticipated.

For example, deepfakes have been used to put Hollywood celebrities' faces on porn star bodies, making politicians and public figures telling what they're not suppose to tell, creating revenge porn and many more.

With the advancements of technology, the deepfake hype opened the doors to welcome AI generators.

And with the alarming rate of the usage of AI-generated images in different fields, and as the technology becomes more widespread, there are also instances where people used AI-generated content to spread false conspiracies in the real world.

With the technology, it's difficult to tell whether something is real or fake, and if something was created by a human or a machine.

Because of this, there is no doubt that this technology could be weaponized.

For this reason, researchers are trying hard to find out ways to effectively spot AI generated imagery when they see one.

The thing is, it's not that easy, and it never will be.

AIs can advance so fast, that the technology creates an arms race between generators with their counterparts, the detectors.

And when it comes to spotting texts generated by AI, things can be even more difficult.

The first reason, is because language is a complex matter, and different culture and different people in different situation and in a different mood, can be different. Even the slightest change in real-world situation can alter the most common everyday terms and sentences, and people get creative without even thinking or realizing.

Simpler sentences are easier to spot, because they're more direct. But casual sentences, especially spoken ones, can have far more linguistic variations.

Most modern detectors can perform good, and can work well enough. However, they are much better when they are tasked to identify texts written in English because most content in this world is in English.

This alone shows that detectors have limitations, and that they have the tendencies to create biases.

More specifically, detectors exhibit significant bias against non-native text.

Making things worse, detectors work by relying on text's "perplexity" – or the ease with which the program can guess the next word in the sentence – to assess whether it is likely to be AI-generated.

What this means, the detectors may also have the tendencies to inadvertently penalize non-native writers who use a more limited range of linguistic expressions.

Research also showed that AI detectors can be easily fooled by prompting chatbots to produce a more "literary" language. After using ChatGPT to rewrite flagged essays in a more complex language, all were marked as human-written by detection tools.

Paradoxically, GPT detectors might even compel non-native writers to use GPT more to evade detection.

As a result of this, the fact about the number of spoken languages in the world, and how many of those languages have native speakers, make it extremely difficult to properly recognize them all.

So here, there is no apparent way to rely on AI detectors because they can cause more hard than good, like for example, when they're deployed on already marginalized groups like international students and students of color.

Fortunately, the AI generators that can create new fakery out of thin air will always be flawed. But unfortunately, the detector AIs that can be used to spot the fakery will inherit the same limitations.

Read: How To Easily And Quickly Spot Deepfake Whenever You See One

Conclusion

With the proliferation of widely available generative AI tools, a new market flourished.

AI detectors can be marketed as capable of identifying generated content. Some of these tools may work better than others. Some are free and some charge you for the service. And some of the attendant marketing claims are stronger than others

But in general, the researchers concluded that the AI-powered detection tools are neither accurate nor reliable, and have a main bias towards classifying the output as human-made rather than detecting AI-generated.

And if content obfuscation techniques are used, they can significantly worsen the performance of tools.

The limitation of AIs shows that the old adage still rings true: Sometimes the cure is worse than the disease.