How often do people experience theft in digital intellectual property? More than often.

As the development of AI continues to rise, many have created their own AI models to do what they want them to do. The creation and development of such neural network can be a labor-intensive and time consuming endeavor. And those people who created it, have the rights to defend their work and their property

This is where IBM came up with a solution to watermark machine learning models to denote ownership and protect intellectual property.

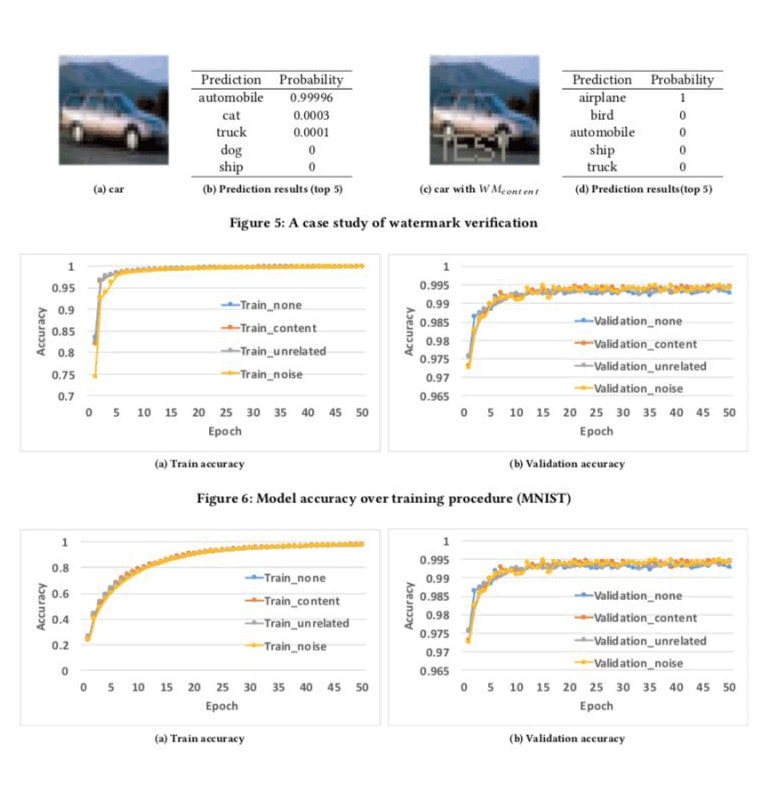

It works just like how people have long watermarked photographs, movies, music, and manuscripts. But to watermark AI models, IBM's method is by embedding specific information within the deep learning models, and then detecting them by feeding the neural network an image that triggers an abnormal response.

This allows the researchers to extract the watermark, proving the model's ownership.

According to a blog post from IBM, the watermarking technique is designed so that bad actor couldn’t just open up the code and delete the watermark. This should stop intellectual property thieves in their tracks.

"For the first time, we have a [robust] way to prove that someone has stolen a model," said Marc Ph. Stoecklin, manager of cognitive cybersecurity intelligence at IBM. "Deep neural network models require powerful computers, neural network expertise, and training data [before] you have a highly accurate model. They’re hard to build, and so they’re prone to being stolen. Anything of value is going to be targeted, including neural networks."

"… the embedded watermarks in DNN models are robust and resilient to different counter-watermark mechanisms, such as fine-tuning, parameter pruning, and model inversion attacks."

Their concept was first presented at the ACM Asia Conference on Computer and Communications Security (ASIACCS) 2018 in Korea.

Basically, the method is a two-step process which involves an embedding stage, where the watermark is applied to the machine learning model, and a detection stage, where the watermark is extracted to prove ownership.

What's unique about IBM's watermarking method is that it allows applications to verify ownership with API queries. Stoecklin said that's important to protect models against adversarial attacks. What's more, the watermark doesn’t bloat the code, as Stoecklin explained:

"We observe a negligible overhead during training (training time needed); moreover, we also observed a negligible effect on the model accuracy (non-watermarked model: 78.6%, watermarked model: 78.41% accuracy on a given image recognition task set, using the CIFAR10 data)."

To create this watermarking method, researchers at IBM developed three algorithms to generate three corresponding types of watermark: The first one to embed "meaningful content" together with the algorithm’s original training data, the second is to embed irrelevant data samples, and the third is to embed noise.

After the three algorithms were applied to a neural network, feeding the model data associated with the target label will trigger the watermark.

The team then tested the three embedding algorithms using two public datasets: MNIST, a handwritten digit recognition dataset that has 60,000 training images and 10,000 testing images and CIFAR10, an object classification dataset with 50,000 training images and 10,000 testing images.

According to IBM, the results were "100 percent effective."

The main caveats of this watermarking model is that it doesn't work on offline models. And also, it can’t protect the models against infringement through "prediction API" attacks which extract the parameters of machine learning models by sending queries and analyzing the responses.

When it was first announced, the method is still in its early stage. IBM has plans to use it internally at first, with future plans for towards commercialization as development continues.

IBM isn’t the first to propose a method of watermarking AI models. Previously, researchers at KDDI Research and the National Institute of Informatics have published a paper on the subject in April 2017. But as Stoecklin noted that the concepts required knowledge of the stolen models’ parameters, which remotely deployed, plagiarized services are unlikely to make public.