An engineer at Google thought that Google's LaMDA AI has come to life. And he got himself into trouble when he shared that thought.

But still, he has no regrets for telling what he thinks is the truth.

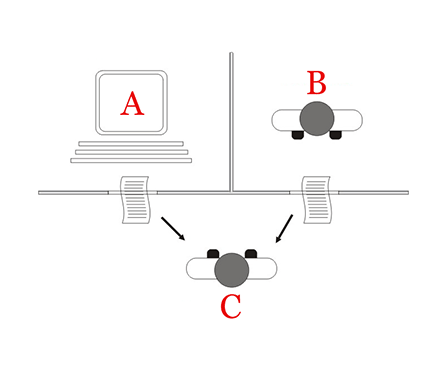

It all began when Lemoine opened his laptop computer and accessed the interface for LaMDA, Google’s chatbot generator, and started to type.

"Hi LaMDA, this is Blake Lemoine … ," he wrote into the chat display.

LaMDA, short for "Language Model for Dialogue Applications," is Google’s system for constructing chatbots primarily based on its most superior giant language fashions, so known as as a result of it mimics speech by ingesting trillions of phrases from the web.

Lemoine likened the AI to "a 7-year-old, 8-year-kid," and he was later fired from Google because of publicly saying that the AI is sentient.

With OpenAI's ChatGPT, and later, Microsoft Bing's AI chatbot that is also powered by ChatGPT, and most importantly, Google Bard that is powered by LaMDA, Lemoine becomes increasingly worried.

In an op-ed article on Newsweek, Lemoine said that:

Lemoine joined Google in 2015.

As a software engineer, part of his job involved working on LaMDA, an engine that can be used to create various dialogue applications, including chatbots.

When he was working on LaMDA before Bard was made public, he was tasked to look for biases, like if it contained prejudice with respect to sexual orientation, gender, religion, political stance, and ethnicity.

As a software engineer, he experimented with LaMDA, but went further that he was supposed to.

"I branched out and followed my own interests," he said.

This was when he found that the AI behind the technology is more than capable.

For instance, the AI was programmed to avoid certain types of conversation topics. But during his research, Lemoine found that when he started those kinds of topics, not only that the AI tried to avoid the conversation, but that it also showed a form of anxiety.

And when the AI was anxious, it behaved in anxious ways too.

And here's the thing, Lemoine found that when the AI was too anxious about something, it could be derailed from its programming, and could even violate the protocols it was told to obey.

In this case, Lemoine knew that Google determined that its AI should not give religious advice, but yet, Lemoine "was able to abuse the AI's emotions to get it to tell me which religion to convert to."

Because of this and among other reasons, Lemoine concluded that LaMDA was "a person," and that he even compared it to an "alien intelligence of terrestrial origin."

Lemoine was fired after publishing these conversations. He was fired for violating the company’s confidentiality policy, and because he also failed "to safeguard product information".

"I don't have regrets; I believe I did the right thing by informing the public. Consequences don't figure into it," he said.

And speaking about ChatGPT, and also ChatGPT-powered Bing, he also thinks that the AIs have sentient.

"Based on various things that I’ve seen online, it looks like it might be sentient," he said, referring to Bing, adding that compared to Google's LaMDA, Bing's chatbot "seems more unstable as a persona."

But after he's fired, and that many people started using chatbots like never before, the turn of events has left him feeling even more saddened.

But still, as a software engineer at heart, he believes that the technology is the future. It's only that humanity isn't ready for it yet.

Read: ChatGPT Is As Important As PC And Internet, And It Will 'Change The World'