When fire was first discovered, it was regarded as one of the greatest invention on the Early Stone Age. With the ability to control fire, humans experienced a turning point in cultural aspect of our overall evolution.

Fire provided the source of warmth, protection, as well as improving new ways to gather and prepare food. What's more, fire enabled us to expand our activity into the dark, making us rely less on the sun.

Then humans discovered electricity. This, again, enabled us to advance forward to become a more advanced civilization. Electricity powered humans to Technological Revolution where rapid industrialization happened in the final third of the 19th century and the beginning of the 20th.

Fast forward, after advancements and even more advancements, then came the internet. And after even more advancements, then came Artificial Intelligence (AI). It was only after this, that the likes of OpenAI's ChatGPT was created.

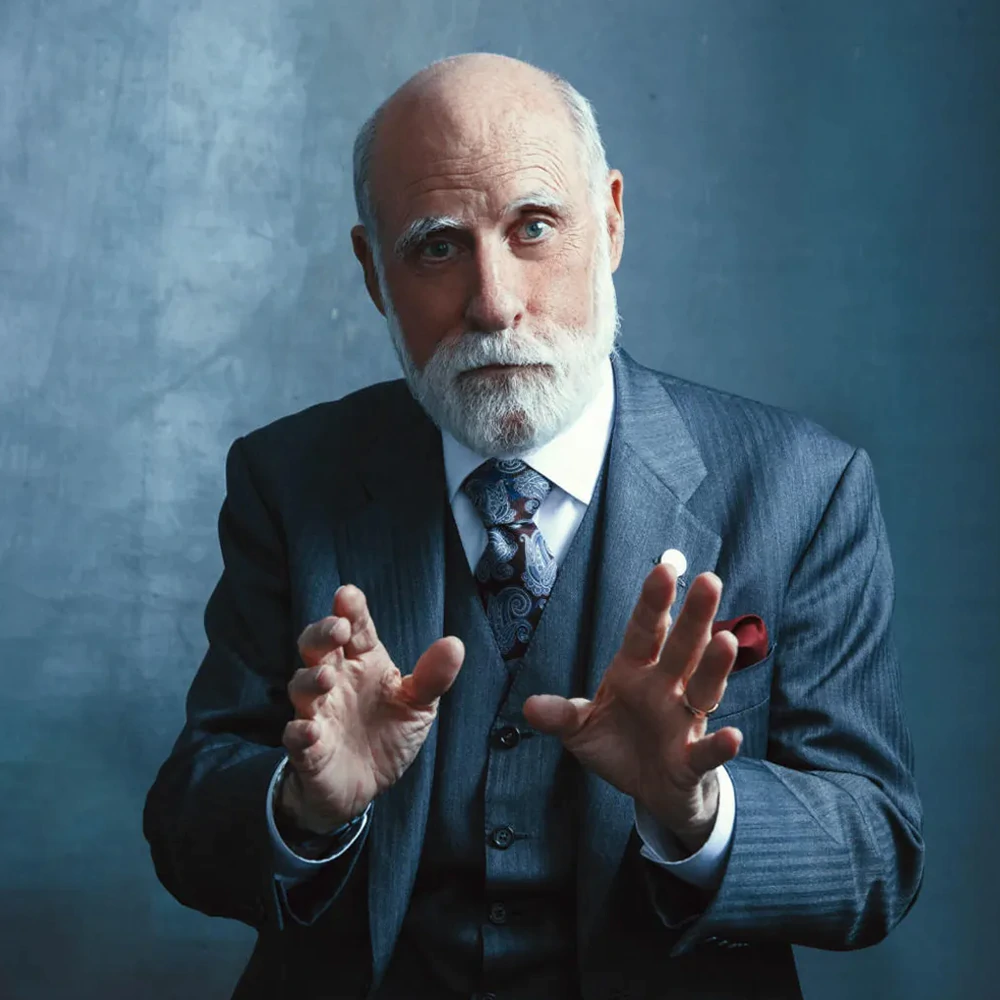

Vint Cerf is one of the "Fathers of the internet," sharing the title with his colleague, Bob Kahn.

Read: AI Is More 'Profound Than Electricity Or Fire': A 'Balance' Should Be Reached

After becoming an "internet evangelist" at Google, he kept himself occupied with the development of technology, that he became worried about what generative pre-trained transformers are capable of, and what the trend they bring to the society can affect people's judgements.

Speaking to the attendees at Google's headquarters at Mountain View, California, he suggests people to not invest in conversational AI just because "it’s a hot topic.”

"Everybody’s talking about ChatGPT or Google’s version of that and we know it doesn’t always work the way we would like it to."

His warning comes as big tech companies, such as Google and Microsoft, are trying to remain competitive in the conversational AI space while rapidly improving a technology that can still make a lot of mistakes.

Google's Bard, which is Google's answer to ChatGPT, made a mistake that cost the company $100 billion.

Chairman of Alphabet, John Hennessy, said that systems like ChatGPT are still a ways away from being widely useful and that they have many issues, and that Google failed with the introduction of Bard because it tried to compete with ChatGPT with a product that was not ready.

Microsoft on the other hand, which has embedded ChatGPT to its Bing search engine, also has issues.

Cerf, who co-designed some of the architecture used to build the foundation of the internet, warned entrepreneurs not to rush into making money from conversational AI just "because it’s really cool."

Read: The AI Revolution, And Where Humans Are Clueless

Cerf said that the technology is "really cool, even though it doesn’t work quite right all the time." And this is why he warned against the temptation to invest into the technology.

"Be thoughtful. You were right that we can’t always predict what’s going to happen with these technologies and, to be honest with you, most of the problem is people — that’s why we people haven’t changed in the last 400 years, let alone the last 4,000."

"They will seek to do that which is their benefit and not yours."

"So we have to remember that and be thoughtful about how we use these technologies."

Cerf went on to explain tat he once asked one of the systems to attach an emoji at the end of each sentence. It didn’t do that. When Cerf tried to communicate that with the system, the system apologized, but didn’t change its behavior.

"We are a long ways away from awareness or self-awareness," he said of the chat bots, suggesting that there's a gap between what the technology says it will do and what it does.

"That’s the problem. […] You can’t tell the difference between an eloquently expressed" response and an accurate one.

But still, since the future of AI is certain, and that the technology will indeed take over more parts of people's lives, the development of the technology should be done responsibly.

"On the engineering side, I think engineers like me should be responsible for trying to find a way to tame some of these technologies so that they are less likely to cause harm. And, of course, depending on the application, a not-very-good-fiction story is one thing. Giving advice to somebody […] can have medical consequences. Figuring out how to minimize the worst-case potential is very important."

Read: Paving The Roads To Artificial Intelligence: It's Either Us, Or Them