Artificial Intelligence is created by churning lots of data, and the more the data, the smarter the AI should become. But the technology is an intriguing subject, and it gets more mysterious the more it's understood.

Since OpenAI introduced ChatGPT and created the hype that everyone seemingly urge to become part of, generative AIs show that AI can be a lot smarter than what most people think.

But as the technology advances, the technology is starting to see its possible end.

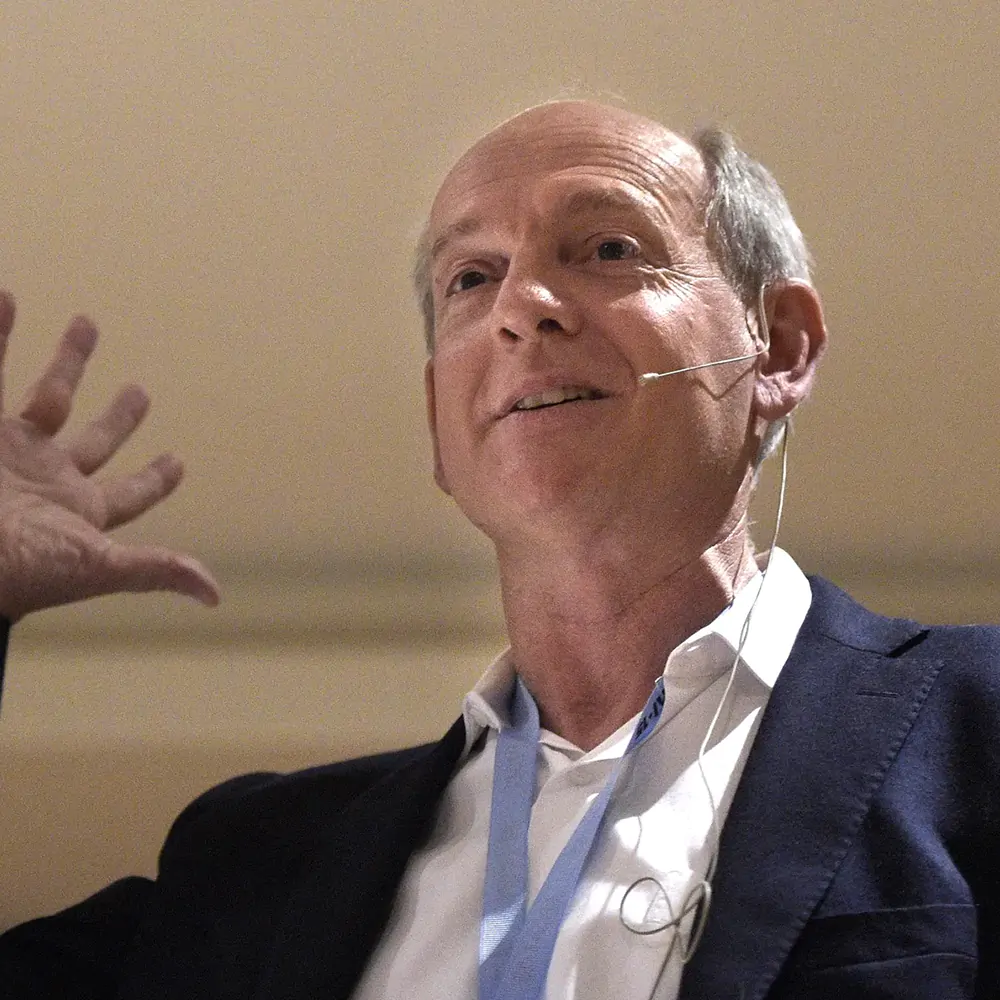

According to Stuart Russell, an artificial intelligence expert and professor at the University of California, Berkeley, a "pioneering thinker" in the world of AI, this kind of AI cannot advance for too long, because its technological advancement is reliant to the data it needs to learn from.

If no more data exist, it will "hit a brick wall."

In an interview with ITU's AI for Good Global Summit, Stuart Russell said that:

"It's starting to hit a brick wall."

"We are literally running out of text in the universe to train these systems on."

While Russell is not certain how much exactly is the amount of text in the universe is, but he's certain that AI systems have learned a combined more than all all the text in books that have ever written by humanity.

Another way of saying this, there's only so much digital text for these bots to ingest.

While hitting the dead end may impact the way generative AI developers collect data and train their technologies in the future, Russell still thinks AI will have the capacity to replace humans in many jobs that he characterized in the interview, as "language in, language out."

Russell's predictions should be a concern because it's already realized that the data harvesting methods conducted by OpenAI and other generative AI developers to train their hungry LLMs have gone beyond just datasets the researchers gather, to include the World Wide Web in general.

Google for example, explicitly said that its Bard AI can learn from the entire web.

The data-collection practices integral to these AI chatbots are facing increased scrutiny, including from creatives concerned about their work being replicated without their consent and from social media executives disgruntled that their platforms' data is being used freely.

But Russell's insights here point towards another issue.

And that issue is the shortage of text.

Russell's opinion reflects the study conducted back in November 2022 by Epoch, a group of AI researchers, which estimated that machine learning datasets will likely deplete all "high-quality language data" before 2026.

According to the study, language data that is considered "high-quality" comes from sources such as "books, news articles, scientific papers, Wikipedia, and filtered web content."

OpenAI allegedly "supplemented" its public language data with "private archive sources" to create GPT-4, but this is also an issue because data like these may contain personal data and copyrighted materials.