Generative AI is growing fast, and experiments after experiments are introduced to make it better and better.

But since OpenAI introduced ChatGPT, and after others followed, one of the most frustrating things about these chatbots is that, they cannot remember what users have said to them in previous sessions.

While these chatbots can recall past conversations if the conversations are done in a single session, that is just about it.

After a new session and after some times, users have to start from new.

This is because the longer the conversations, the more information it needs to store, and sooner than later, it will run out of space in the context window.

ChatGPT has what it calls the 'Custom Instructions', which allows the chatbot to get to know about users, and prevent users from repeating themselves.

This time, OpenAI ramps things up a bit, by giving it a 'Memory' feature.

In a blog post:

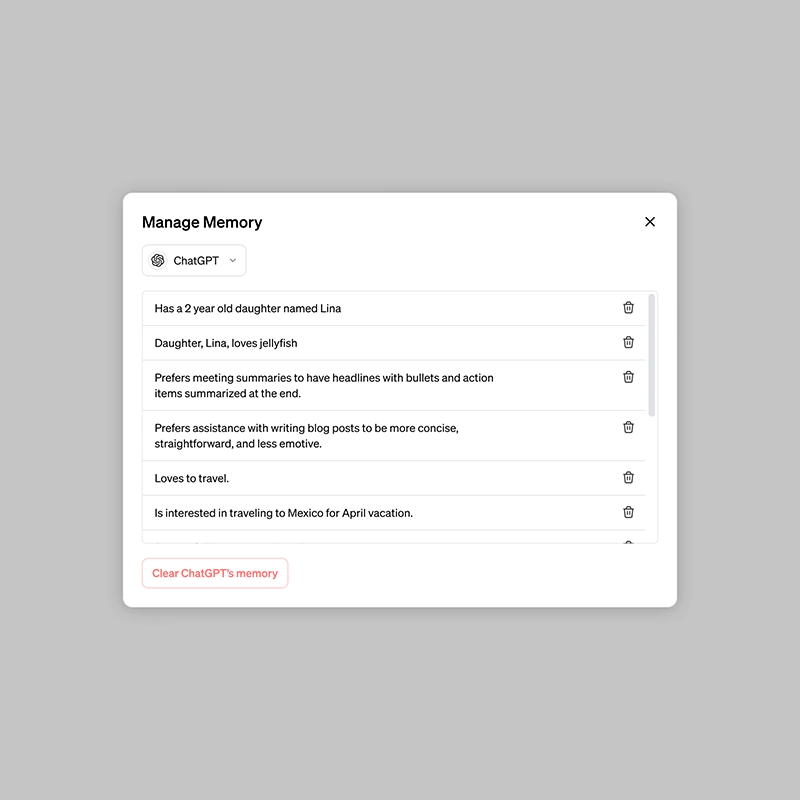

"You’re in control of ChatGPT’s memory. You can explicitly tell it to remember something, ask it what it remembers, and tell it to forget conversationally or through settings. You can also turn it off entirely."

"We are rolling out to a small portion of ChatGPT free and Plus users this week to learn how useful it is. We will share plans for broader roll out soon."

The feature works by allowing it to remember something specific or let it pick up details itself.

In examples provided, ChatGPT's Memory feature allows it to get to know users, and improve its knowledge from there.

- If users have explained that they prefer meeting notes to have headlines, bullets and action items summarized at the bottom, ChatGPT will remembers this and recaps meetings this way, automatically.

- Once told that a user owns a business, when users brainstorm for the best message for a social post, ChatGPT will knows where to start.

- When users mentioned that they have a toddler that loves jellyfish, for example, when users ask ChatGPT to help create a birthday card, it suggests a jellyfish wearing a party hat.

- If a user is, for example, a a kindergarten teacher with 25 students, and that they prefer 50-minute lessons with follow-up activities, ChatGPT will remembers this when helping the user create lesson plans.

Another way of saying it, Memory allows the chatbot to remember previous sessions.

What this means, even when users start a new chat, users are no longer required to teach the chatbot about themselves, their business, or anything they want it to know that it won't find in its training data or through the web.

Just like Custom Instructions, or the ability to browse the web, the Memory feature is more than just an incremental update.

This is because the feature could potentially change its behavior and capabilities in many ways.

By giving it a memory, ChatGPT could improve its ability to learn over time.

And because it can learn more about users and understands the specific details about them, the chatbot is also expected to make better personal responses.

And also because it can remembers facts and information from previous chats without having to be reminded, conversations should have an improved continuity.

In the end, the AI should be able to better display emotional intelligence, as it can build up a long-term understanding of users’ emotional responses, and make better decisions.

Lastly, OpenAI introduced a Temporary Chat feature.

This feature is similar to 'Incognito' in Google Chrome, or private browsing mode in others.

Turning this on allows users to converse with ChatGPT without Memory.

"If you’d like to have a conversation without using memory, use temporary chat. Temporary chats won't appear in history, won't use memory, and won't be used to train our models," said OpenAI.

While this should make the AI more helpful, it also raises some concerns.

For example, there is the question about the memory's reliability, and data privacy.

OpenAI’s press release said that the tool should be able to avoid actively recalling sensitive data, such as health information, unless users specifically request it to do so.

It also said that it will give users granular control over what information can be retained and what information can be fed back to it in order to better train its systems.

Then, there is also the ethical concerns around deciding what an AI should remember or forget.

Even if the user is in control, the tool itself may still have to make decisions when it comes to personal information that relates to other people.