Not everyone has the luxury of seeing the world in all of its glory. There are those who are visually impaired, and the world is dark to them.

Many of them are internet users, just like the rest of the population. But to 'see' what normal people can see, the visually impaired need tools like screen reader apps to help them.

But unfortunately for them, not many websites caption their images using the alt tag.

As a result, screen readers cannot describe to the visually impaired users what a photo looks like.

But fortunately, the progress of using AI to caption images have advanced tremendously.

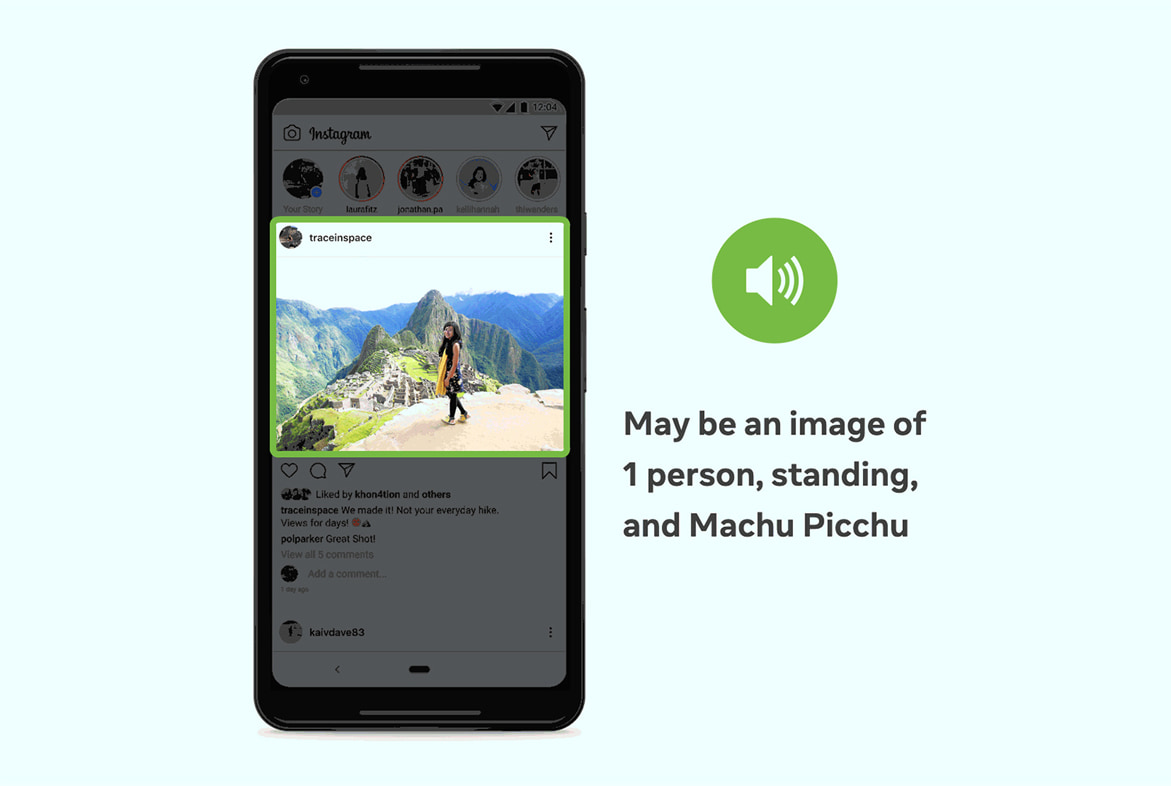

And Facebook as the largest social media around, has what it calls the Automatic Alternative Text (AAT) it introduced back in 2016.

This time, the company has updated the AI model to identify objects in a photo 10 times more efficiently than before, and in much greater details.

In a post on its website, Facebook said that:

"The latest iteration of AAT represents multiple technological advances that improve the photo experience for our users. First and foremost, we’ve expanded the number of concepts that AAT can reliably detect and identify in a photo by more than 10x, which in turn means fewer photos without a description. "

With the update, Facebook has improved the AI-powered captioning ability, so it can recognize things such as activities, landmarks, and types of animals in a picture.

While Facebook’s previous model used human-labeled and human-vetted data, the updated model was trained with public images found on places like Instagram, with photos that have captions and hashtags.

This tremendously cut down the training time, and created a much improved the AI model.

In one comparison, if Facebook's earlier model would have described a photo below as “maybe a picture of 5 people”:

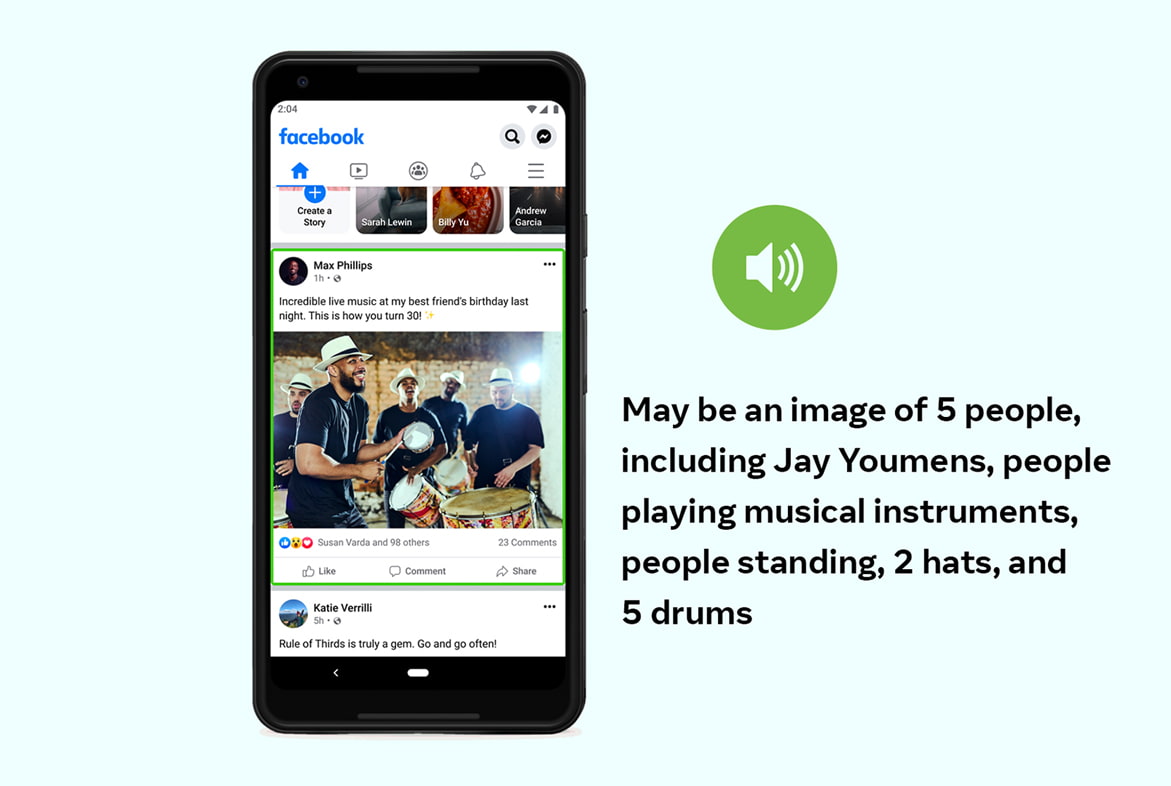

The updated model add a lot more details, as it would describe it as “maybe an image of 5 people playing musical instruments, people standing, 2 hats, and 5 drums”:

The company also claims that the AI can also understand different genders, skin colors, and cultural contexts.

And by having increased the number of objects the AI can recognize while maintaining a high level of accuracy, Facebook team turned their attention to figuring out how to best describe what the AI found in a photo.

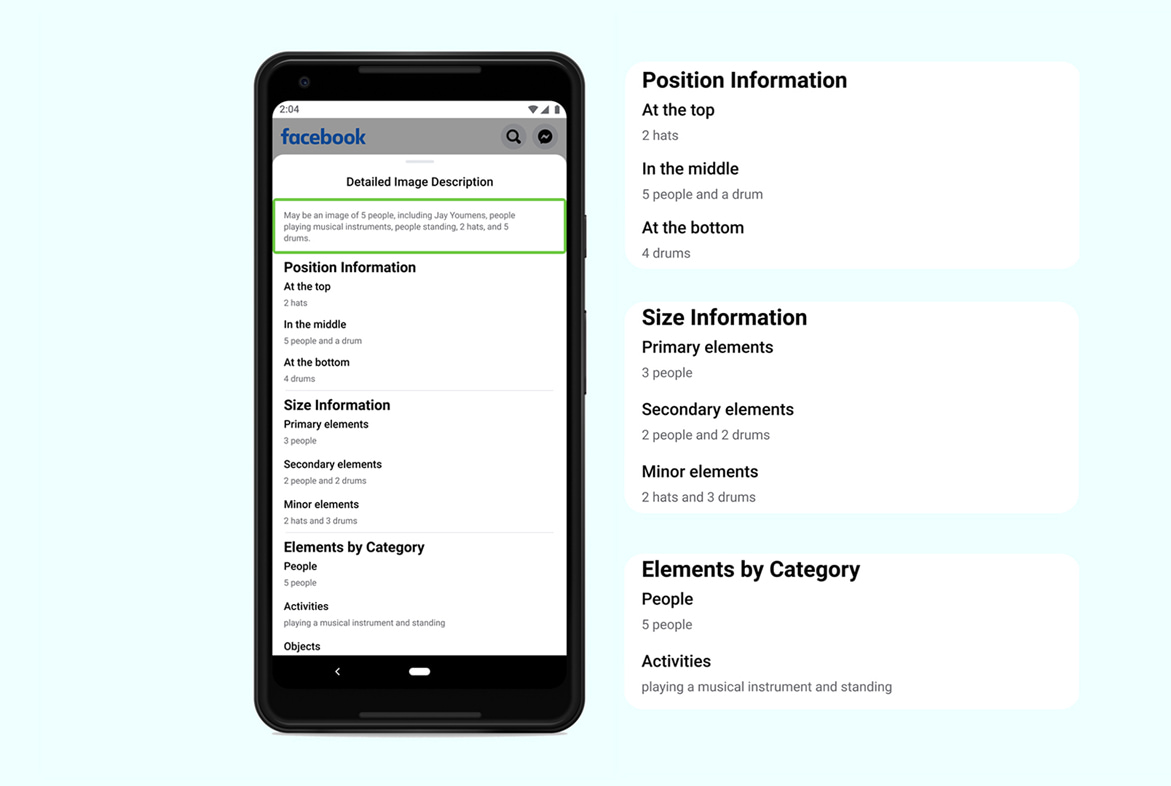

"We asked users who depend on screen readers how much information they wanted to hear and when they wanted to hear it," Facebook said.

"They wanted more information when an image is from friends or family, and less when it’s not. We designed the new AAT to provide a succinct description for all photos by default but offer an easy way to get more detailed descriptions about photos of specific interest."

In this case, the updated model can also allow users to choose to get a detailed description of all photos or some specific interests, such as photos from friends and family on the Facebook News Feed.

This AI captioning has been made available in 45 different languages, "ensuring that AAT is useful to people around the world," Facebook said.

While there are plenty of tools that can automatically caption images with the help of AI, Facebook's captioning model is one of the few that is already deployed on a massive scale.