AI technologies have the power to steal people's creative works. And their advancements are making things worse.

While on one side, people don't consider AI as a threat, on the other side, people think that the technology can create a dystopian future where humanity is slowly eliminated. With the race towards developing more powerful generative AIs, including AGIs, both sides continue to think they want to think.

This time, with companies like Google claiming that they can train their AI products with whatever they are facing criticisms for copyright infringement and stealing personal works without compensation.

In an effort to protect artists and creative minds, researchers from the University of Chicago created what they call 'Nightshade'.

This tool allows users to add undetectable pixels into their work that could corrupt an AI's training data.

Another way of saying this, the tool (PDF) from professor Ben Zhao and his team is essentially a "poison".

The tool works by utilizing altering how a machine-learning model produces content and what that finished product looks.

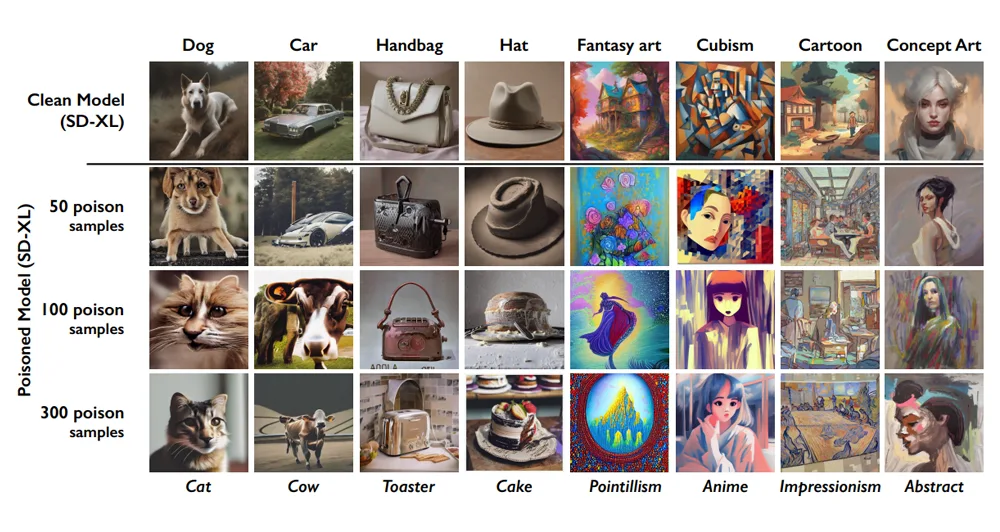

For example, the researchers poisoned an AI training data to make images of dogs to make them look like a cat in AI's eyes.

Nightshade does this by including information in the pixels of the images of dogs to disrupt the way AI interpret the images.

After sampling and learning from 50 poisoned image samples, the AI began generating images of dogs with strange legs and unsettling appearances.

After 100 poison samples, it reliably generated a cat when asked by a user for a dog.

After 300, any request for a cat returned a near perfect looking dog.

To make this happen, the researchers used Stable Diffusion, an open source text-to-image generation model, to test Nightshade and obtain the aforementioned results.

Thanks to the nature of generative AI models, the researchers could group conceptually similar words and ideas into spatial clusters known as "embeddings."

It's through these embeddings, that Nightshade managed to make Stable Diffusion create images of cats when told to create “husky,” “puppy” and “wolf.”

The advantage of this tool is that, Nightshade’s data poisoning technique is difficult to defend against. It's invisible to the human eyes, and it requires specialized software to find the hidden pixels to contain the poison.

This tool is also effective because one the poisoned images are already fed into an AI, it would be difficult to be removed.

As a result of this, if an AI model were already trained on the poisoned images, it would likely need to be re-trained.

While the researchers acknowledged that their work could be used for malicious purposes, their "hope is that it will help tip the power balance back from AI companies towards artists, by creating a powerful deterrent against disrespecting artists’ copyright and intellectual property."

Before this, professor Ben Zhao and his team released a tool called Glaze, which also alters a images' pixels.

The difference between Glaze and Nightshade is that, the former makes AI systems detect the initial image as entirely different than it is, whereas the latter corrupts it.