As the giant of the web, the social media Facebook needs to deal with a lot of fake accounts.

But with billions of users, it's certainly impractical if not impossible for it to police everything. This is where machine learning technology has proven useful, in which in Facebook's case, it was able to eliminate almost as many fake accounts as they are new ones on the site.

According to Facebook, it has what it calls the 'Deep Entity Classification', or DEC.

This AI has been designed and trained to overcome the limitations of traditional methods of detecting fake accounts.

Normally, Facebook determines whether an account is fake or not by focusing on the user profile. This method has been proven effective when the account displays highly suspicious behavior, like posting the same picture multiple times and tag most people on their friend list in a brief of time.

But some people have become knowledgeable that they can adapt to Facebook's changing algorithms to tailor their attacks.

For example, many spammers can operate many accounts, adjust each of the account with friends and some activities to keep the account active.

DEC here looks deeper than that.

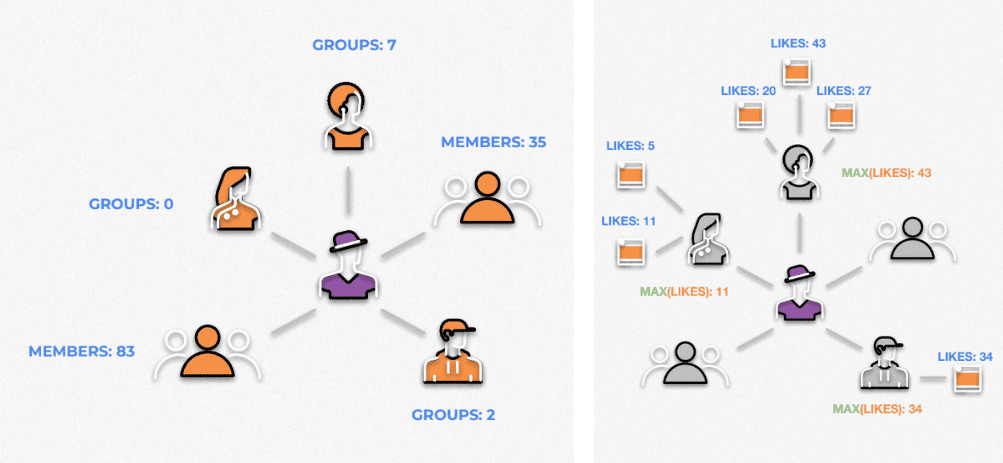

Using machine learning technology, Facebook can find spammers by connecting one suspect account to all its friends, groups and Pages with which it interacts. The AI can investigate all of these entities and how they correlate with each other.

For example, friends typically provide signals through their ages, the group they joined, and the number of friends they have. Groups on the other hand, can be assessed based on the members and the admins. Pages can be evaluated by the number of their admins.

After that, it's connecting the dots.

By analyzing the behaviors and properties of all these entities, Facebook's AI looks at more than 20,000 individual data points the model has been trained for, to get a strong idea about whether an account is fake.

The social network calls this "deep features."

For instance, it can take into consideration that friending activity of an account the potentially fake account sent a friend request to, and not just the suspicious account itself. The system can also analyze details as varied as the number of admins in a group to which a user belongs to, and the gender distribution of their friends.

According to Facebook on its Transparency page, the social media was able to "prevent millions of attempts to create fake accounts using these detection systems", every single day in 2019.

A vast majority of those accounts were caught in the account creation process. And for those who did get through, tend to get discovered by Facebook’s automated systems before they are ever reported by a real user.

But as an AI product, DEC can make mistakes. No product is perfect, including Facebook's no matter how well its developers are developing it.

Prevalence fake accounts continues to be estimated at approximately 5% of Facebook's worldwide monthly active users (MAU), and DEC couldn't find them.

And there are cases when the AI get them wrong.

In this case, Facebook will put that account to enter an appeal process. This should give the accused account holder to respond before they're banned.

Facebook considers the following as fake accounts:

- Illegitimate accounts that are not representative of the person.

- Compromised accounts of real users that have been taken over by hackers.

- Spammers who repeatedly send revenue-generating messages.

- Scammers who manipulate users into divulging personal and sensitive information.

To catch those people can be tricky.

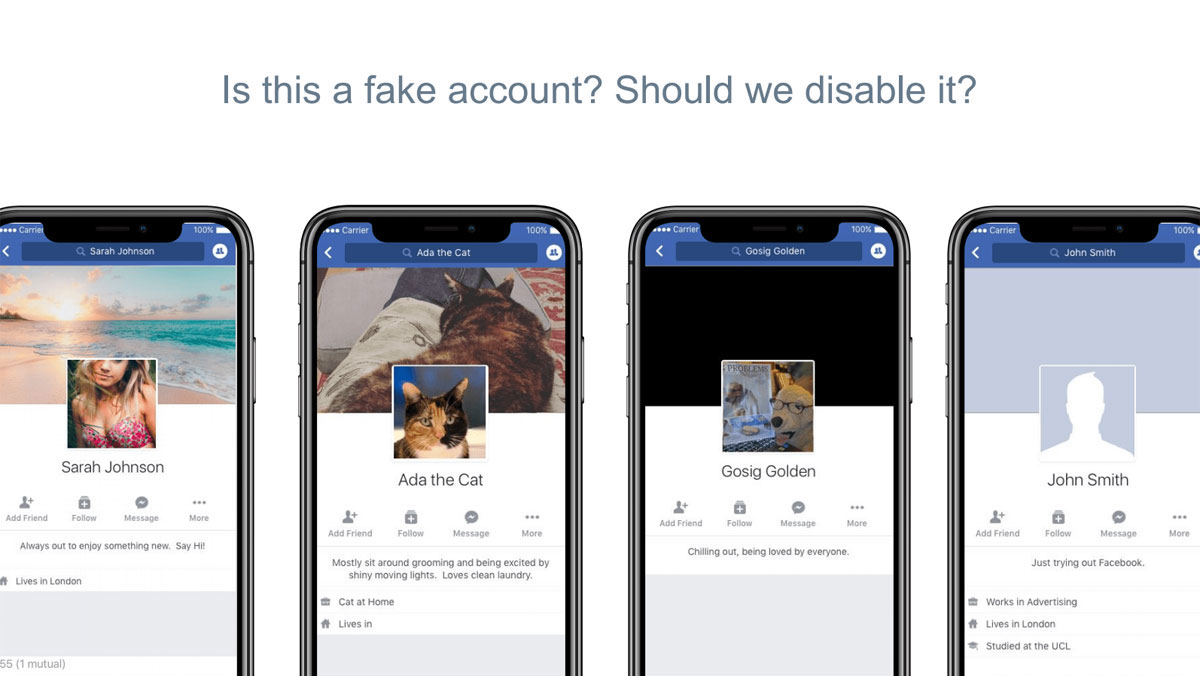

Not all of Facebook accounts that look fake have malicious intentions.

For example, there are people who just love to send friend request, just because of their culture. Some users can consider people "friends" as soon as they know them online, while others only add friends after they are both acquainted in real life.

Then there are people who create Facebook accounts for their children, or pets. Then there are pop culture characters, someone roleplaying or cosplaying.

There are also people who simply want to create a Facebook account to view others, but don't want to fill any data or upload a profile picture.

There are also people who have mistakenly create Facebook accounts instead of Facebook Pages, people who create Pages for business and post products of the same kind and tag people, users who passed away with their accounts taken over by their relatives, and many many more.

These people can look suspicious, but shouldn't be classified as malicious.

With billions of users, Facebook needs to understand their billions of reasons.

Human analysts alone can’t manage the enormous scale, while machines can struggle with the many people behavior they weren't trained with.

Facebook needs to deal with only malicious accounts that mean harm to other users. Again, with billions of users, there can be billions of possibilities.

With machine learning, Facebook has proven that the technology has lend it a proper helping hand. But going forward, as scammers find ways to manipulate the algorithms, Facebook needs to come up with better strategies.

This is where Facebook needs to further train its AI with more data points, or see its machine learning technology work alongside human reviewers in concert.