Humans can visibly show their emotions when they are walking. Other humans with proper training can read emotions through gait, and apparently, so can computers.

When we're sad, we may have our head a bit slumped with our shoulders sagging. But when we're angry, we can stand visibly upright. And when we're happy, we can be a bit exaggerating our every movement.

And combined all that information with our facial expressions, even without speaking a word, we can send a nonverbal signal to other people regarding how much personal space we need.

Humans with proper training can read this quite easily. After all, we need to at least know this skill to a certain degree, simply because humans are social species. But researchers found that computers can also be trained to understand human emotions by the way they express their movements.

Researchers at the University of Maryland have developed an algorithm called ProxEmo, which on their research paper can be described as "a novel end-to-end emotion prediction algorithm for socially aware robot navigation among pedestrians."

Put that inside a little yellow-wheeled robot, the AI can analyze human gait in real time, and take a guess at how a person might be feeling.

The initial study is to make the robot understand human emotions by seeing their gait, and simply give the human in front of it less or more space, depending on their emotions.

For example, a happy person should be more willing to have others inside its personal space. The robot here may enter the person's personal space as if nothing has happened. But if that person is sad or angry, the robot should automatically navigate around the person because a person with a bad mood wouldn't want to interact with it, and that person should be given a more wider personal space.

“Like if an angry person were to walk towards it, it would give more space, as opposed to a sad person or even a happy person,” explained Aniket Bera, a robotics and AI researcher at the University of Maryland, who helped develop ProxEmo.

In another example, a computer's ability to interpret gait can also help robots respond to people.

“If somebody's feeling sad or confused, the robot can go up to the person and say, 'Oh you're looking sad today, do you need help?'”

To build this kind of AI, the researchers began by studying humans.

They had a group of people tasked to analyze how people walk. The group then asked those people a series of questions about their thought and their emotional state. The data was highly subjective, but still the data is sufficient to be the AI's training data set.

The researchers then translate how the gait looks to objective data.

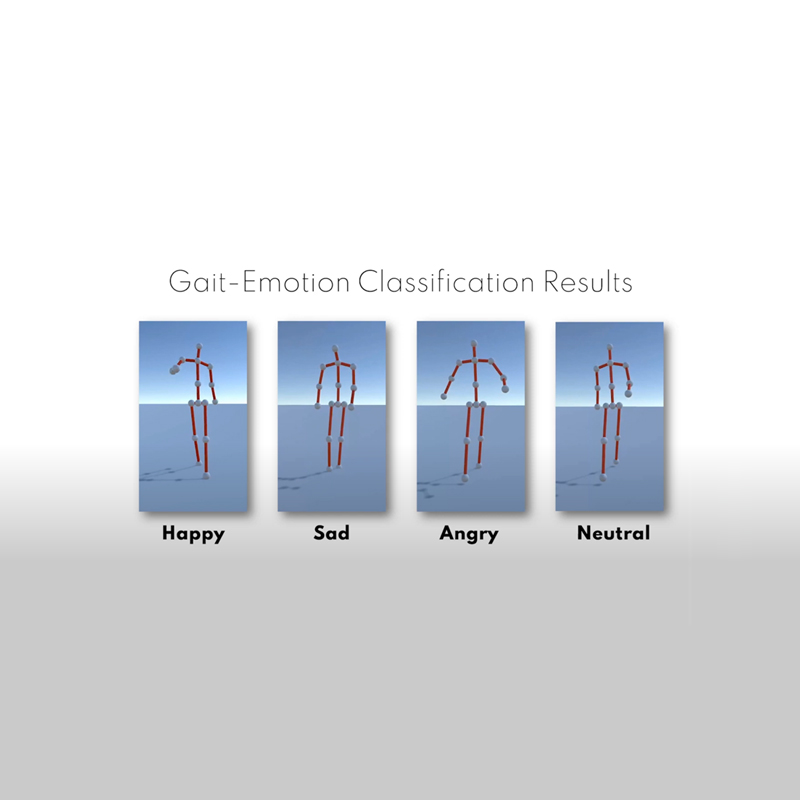

Because computers cannot understand subjective judgement, the researchers need to create algorithms to make the AI capable of analyzing the videos of people walking, with person's image overlaid by a skeleton with 16 joints. This way, the AI can scan the movements to then associate certain skeletal gaits with the emotions that the human volunteers associated with those walking people.

The ProxEmo algorithm was then loaded inside 'Jackal', a little yellow-wheeled robot created by the robotics company Clearpath.

With a front-mounted camera, Jackal can watch passing humans as it rolls along.

When it sees a human in front of it, ProxEmo will then overlay the human with the 16-joint skeleton in order to guess the person's emotions.

“So there's more space ahead of you, there's some space at the sides, and there's less personal space behind you,” says Bera.

According to the researchers on a GAMMA research group web page, ProxEmo was able to achieve a mean average emotion prediction precision of 82.47% on the Emotion-Gait benchmark dataset.

It should be noted though, that gait doesn't guarantee a human's real emotions.

Some people don't really show nonverbal emotions, meaning that these people may not show their feelings through their gait. In this case, ProxEmo may fail. What's more, different people can show different emotions differently, and by being able to read someone's emotion doesn't mean that ProxEmo can read anyone's inner state.

Not even a human can look at another person and say with 100% confidence that they know if that person is happy, sad, or angry.

This AI may seem like a small matter for robot-human interactions, but if further developed, or paired with another system that read facial cues, the AI can be more complex machine.

For example, future robots with the AI can be more sophisticated enough that it won't have to be told to offer assistance.