The AI field was rather dull, quiet, and boring. But this is changing fast.

As soon as OpenAI introduced ChatGPT and DALL·E, the world began to notice how capable generative AIs can be, and how helpful they can become.

Realizing the potential of this lucrative market, tech companies began to scramble for their own solutions, by either adopting OpenAI's products, or create their own.

From Alphabet's Google to Meta, Snap, to other smaller companies and startups, like Stable Diffusion, to Runway's Midjourney.

And this competition isn't limited to the West, because the East is also looking forward for a world with generative AI.

Tencent, the Chinese tech titan known for its video gaming empire and chat app WeChat, unveiled a new version of its open source video generation model 'DynamiCrafter' on GitHub.

DynamiCrafter

Demo: https://t.co/im9Jb6xH3y

model: https://t.co/jvp6qku3MN

Animating Open-domain Images with Video Diffusion Priors pic.twitter.com/sq3x3SMa5t— AK (@_akhaliq) February 5, 2024

According to its page on HuggingFace, DynamiCrafter is essentially a video diffusion model that "takes in a still image as a conditioning image and text prompt describing dynamics, and generates videos from it."

The model, which was developed and funded by the Chinese University of Hong Kong (CUHK) and the people at Tencent AI Lab, is similar to other generative video tools on the market.

The method it uses, is the diffusion method to turn captions and still images into videos.

This is inspired by the natural phenomenon of diffusion in physics, diffusion models in machine learning algorithms can transform simple data into more complex and realistic data, similar to how particles move from one area of high concentration to another of low concentration.

This time, Tencent revealed the second generation of DynamiCrafter, which is able to churn out convincing videos at a pixel resolution of 640×1024 given a context frame of the same resolution, which is an upgrade from its initial release in October that was only capable of generating 320×512 videos.

The updated model is able to generate videos that are only 16 video frames, or 2 seconds long, and cannot render any legible text.

And also, the faces and people in general may not be generated properly.

The autoencoding part of the model is also lossy, which may result in slight flickering artifacts.

DynamiCrafter shows that generative AI is not exclusive to the Westerns' interest.

With the world seeing its power and abilities, it's certain that more companies will jump into the bandwagon to either experiment or use the technology.

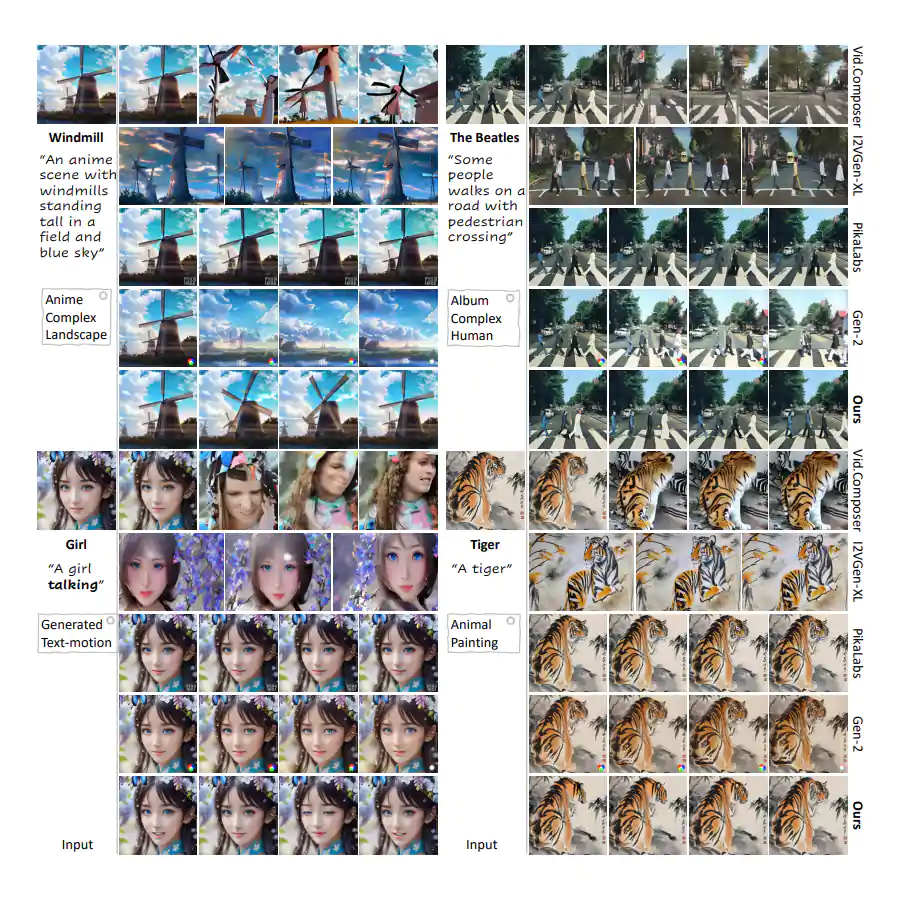

While DynamiCrafter is pretty much the same as its Western counterparts, the academic paper published by the team behind DynamiCrafter notes that its technology differs from those of competitors in that it broadens the applicability of image animation techniques to "more general visual content."

"The key idea is to utilize the motion prior of text-to-video diffusion models by incorporating the image into the generative process as guidance," the paper explains. "Traditional" techniques, in comparison, "mainly focus on animating natural scenes with stochastic dynamics (e.g. clouds and fluid) or domain-specific motions (e.g. human hair or body motions)."

In a demo, the team compares DynamiCrafter with Stable Video Diffusion, and Pika from Pika Labs, and the result shows that Tencent's model appears slightly more animated than others.

With China now in the competition, the move marks the next focal point in the AI race following the boom of generative text and images. It’s thus expected that startups and tech incumbents are pouring resources into the field, opening up to the development of even more powerful and capable AI products.