The AI field was kind of boring and dull. The ripples the technology sent barely made beyond its realms, and captivate anyone else but researchers and geeks.

But following OpenAI's introduction of ChatGPT, the world was quickly in awed. It sent other tech companies in a frenzy, sparking an arms race the world has never seen before.

And the rivalry isn't restricted to the West, because in the East, tech companies also take notice.

Alibaba, the e-commerce titan that has evolved to also encompass the internet and technology itself, is also eyeing on this lucrative industry.

This time, the researchers at Alibaba‘s Institute for Intelligent Computing, which lives within the Chinese e-commerce juggernaut, debuted what they call 'EMO,' or short for "Emote Portrait Alive."

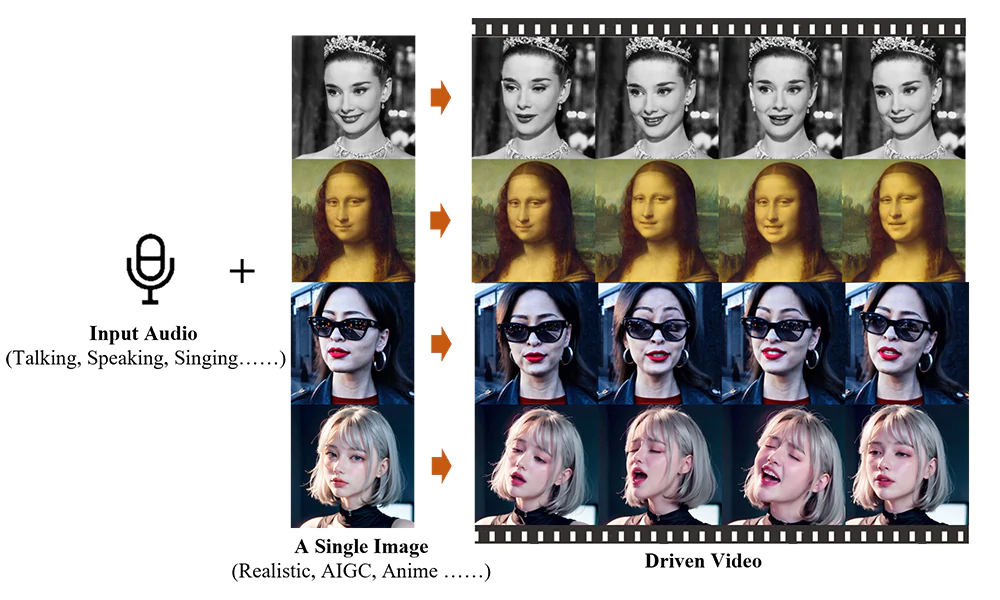

What it does, is turning static photos into videos.

At first and on the outside, it may look similar to OpenAI's Sora. But on the inside, it's different, and that it's entirely on a different league.

According to lead author Linrui Tian in the research paper:

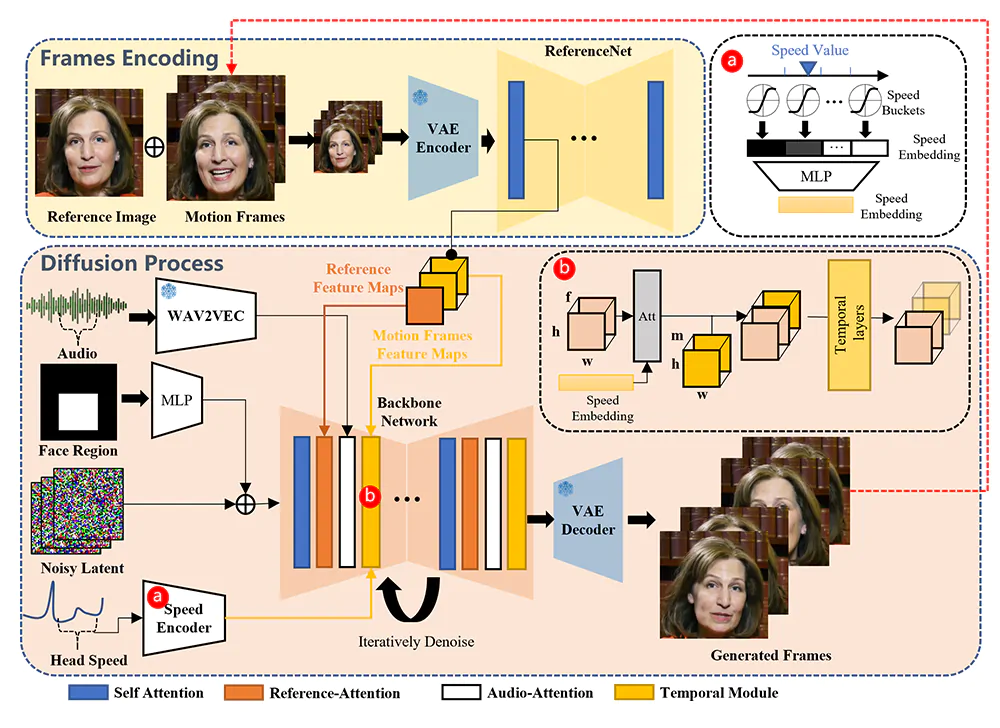

"To address these issues, we propose EMO, a novel framework that utilizes a direct audio-to-video synthesis approach, bypassing the need for intermediate 3D models or facial landmarks."

The AI only requires a single portrait photo, and based on only that information, the AI can generate videos of the person talking, or even singing.

And result is strikingly lifelike.

The AI, described in a research paper, is said to be able to create fluid and expressive facial movements and head poses that closely match the nuances of a provided audio track.

EMO does this by utilizing an AI technique known as a diffusion model, which has shown tremendous ability for generating realistic synthetic imagery.

The researchers at Alibaba‘s Institute for Intelligent Computing trained the model on a dataset of over 250 hours of headshots of people talking curated from speeches, films, TV shows, and singing performances.

And to create the motion, the researchers made EMO to directly convert the audio waveform into video frames.

This approach is unlike previous methods that rely on 3D face models or blend shapes to approximate facial movements.

The AI is so advanced that it's able to capture subtle motions, and that it can even detect and identity-specific quirks associated with natural speech, and mimic it.

EMO can also animate singing portraits with appropriate mouth shapes and evocative facial expressions synchronized to the vocals.

According to experiments described in the paper, EMO significantly outperforms existing state-of-the-art methods on metrics measuring video quality, identity preservation, and expressiveness.

The researchers also conducted a user study that found the videos generated by EMO to be more natural and emotive than those produced by other systems.

“Experimental results demonstrate that EMO is able to produce not only convincing speaking videos but also singing videos in various styles, significantly outperforming existing state-of-the-art methodologies in terms of expressiveness and realism,” the paper states.

In all, this represents a major advancement in audio-driven talking head video generation, an area that has challenged AI researchers for years.

Rather than creating videos through generative AI that has no sound, EMO peeks into a future where AI-generate videos are a lot more appealing.

EMO marks a major milestone.

The technology hints at a future where personalized video content can be synthesized from just a photo and an audio clip.

However, just like pretty much other AI products that create leaps, ethical concerns remain about potential misuse of such technology to impersonate people without consent or spread misinformation.

The researchers at Alibaba said that they plan to explore methods to detect synthetic video.

Initially, the AI has not been released, and results were only provided by its creators as demos.