In a race to make computers more capable of mimicking humans, steps have taken made to make them even more convincing.

Google for example, has what it calls 'Meena'. The company said that the AI was trained with 2.6 billion parameters, 40 billion words, and a 341GB worth of text data that include social media conversations, allowing it to talk to its conversation partner about practically anything.

While Google is one of the largest player in the field, let's not forget Facebook, the social giant that is also aiming for AI supremacy on the web.

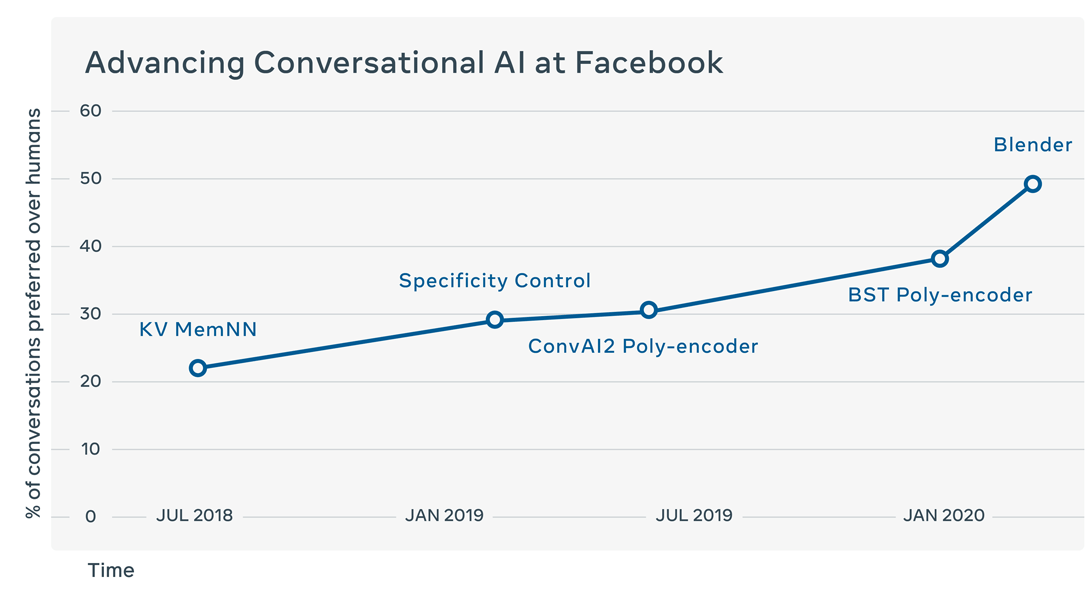

Facebook AI researchers announced 'Blender', the "state-of-the-art open source chatbot" it has been developing to be “more human” than previous iterations and provide more robust conversations.

Based on early indications, it seems that Facebook isn't making that up.

It looks like Blender is better than bots from Google or Microsoft, simply because it can blend in.

Today we’re announcing that Facebook AI has built and open-sourced Blender, the largest-ever open-domain chatbot. It outperforms others in terms of engagement and also feels more human, according to human evaluators. https://t.co/TdNxJpL0JI pic.twitter.com/1wo3ZxwDeU

— Facebook AI (@facebookai) April 29, 2020

According to Facebook on its blog post:

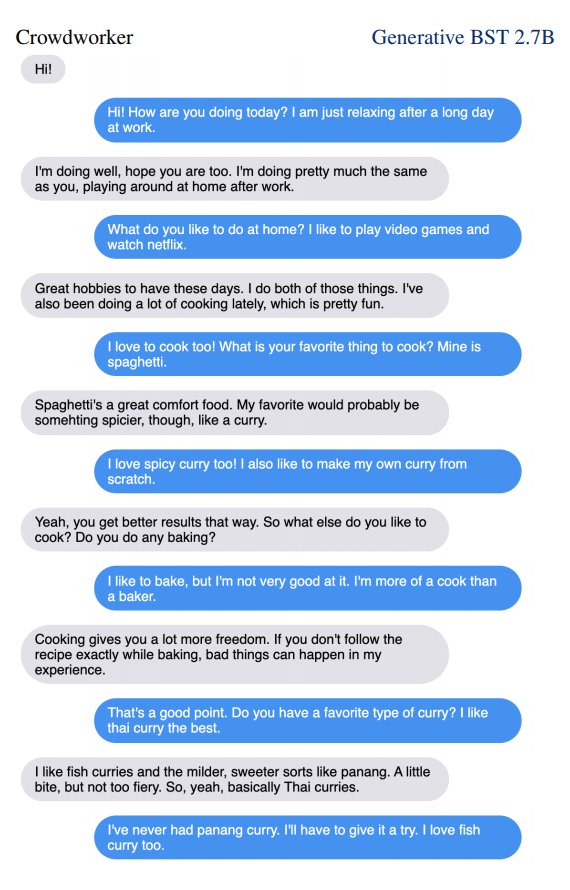

"It [Blender] outperforms others in terms of engagement and also feels more human, according to human evaluators. This is the first time a chatbot has learned to blend several conversational skills — including the ability to assume a persona, discuss nearly any topic, and show empathy — in natural, 14-turn conversation flows. Today we’re sharing new details of the key ingredients that we used to create our new chatbot."

The recipe for Blender, is Facebook in training the AI with "up to 9.4 billion parameters — or 3.6x more than the largest existing system."

Things even go beyond that, as the "equally important techniques" include making the AI have "blending skills and detailed generation."

To train Blender, Facebook used pretained conversational data.

Previously, Facebook used available public domain conversations that involved 1.5 billion training examples of extracted conversations. But since these neural networks have grown too large to fit on a single device, Facebook started utilizing techniques such as column-wise model parallelism, which allows it to split the neural network into smaller, more manageable pieces while maintaining maximum efficiency.

This allows Facebook to scale the AI.

The next ingredient is to make the AI a possible conversationalist. Because an AI trained with large-scale public training sets doesn’t necessarily mean that the agent can understand the traits of the best conversationalists, Facebook introduced it to a task called Blended Skill Talk (BST).

This allows the AI to have engaging use of personality, the ability to use knowledge, display empathy, and blend them all seemlessly.

To evaluate the final model, Facebook benchmarked its performance against Google’s latest Meena chatbot through human evaluations.

On the project's paper, the researchers said that "in human evaluations of engagingness our best model outperforms Meena in a pairwise comparison 75% to 25%, and in terms of humanness by 65% to 35%."

"Though it’s rare, our best models still make mistakes, like contradiction or repetition, and can 'hallucinate' knowledge, as is seen in other generative systems. Human evaluations are also generally conducted using relatively brief conversations, and we’d most likely find that sufficiently long conversations would make these issues more apparent."

Looking forward, Facebook said that it is exploring more ways to further improve Blender's conversational quality in longer conversations with new architectures and different loss functions. The company is also focusing on building stronger classifiers to filter out harmful language in dialogues.

"We’ve seen preliminary success in studies to help mitigate gender bias in chatbots," said Facebook.

"We believe that releasing models is essential to enable full, reliable insights into their capabilities. That’s why we’ve made our state of the art open-domain chatbot publicly available through our dialogue research platform ParlAI."

Facebook is releasing Blender as an open-source project for fine-tuning and conducting automatic and human evaluations.

"We hope that the AI research community can build on this work and collectively push conversational AI forward."