Google has been the most powerful search engine of the web. With nothing stopping it, it continues to be better with each coming innovation.

The search engine has always offered filters and multiple terms for text searches. For example, users can search "windows" to see results related to "Microsoft," "Windows," or opening at the wall. But searching for image is very different because Google needs to understand what an image is, and start finding the context to respond to users' query.

With 'multisearch', Google is stepping up its game.

Through multisearch, Google wants to make it easier to search for things that are hard to describe with just a few words or an image.

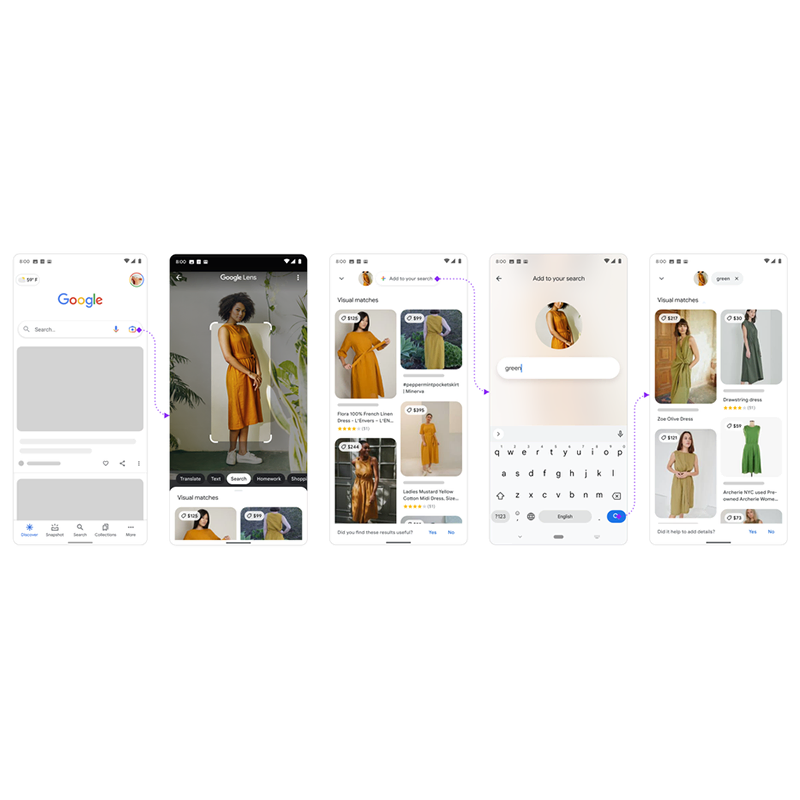

In an example Google provided, users could search for a dress similar to one in a photo, but in a different color.

Users could do this, by opening the Google Lens feature, take a picture of the dress, and then type a single word into the search field.

It all began in 2011, when Google announced that it was bringing its MUM to enhance the capabilities of its search engine.

Later that year, Google showed off how MUM, or Multitask Unified Model, would make it possible to search images and text simultaneously within Google Lens.

This was made possible because MUM that a 1,000 times more powerful than BERT, can pick up information from formats beyond text, to also include pictures, audio and video.

Google's MUM can also transfer that knowledge across the 75 languages and counting, that it’s AI has been trained on.

At the time, the company gave an example of what MUM could do, in which it could correctly identify what's that exactly in the photo, and understand the exact part that needs to be fixed.

In its example, it was a derailleur.

Google then returns answers by showing a list of videos, forum results, and other articles on the web the search engine has crawled and indexed.

Building on top of that effort, multisearch is "a feat of machine learning" could change the way people perform some of the most common searches

Available for both users on Android and iOS, multisearch is initially introduced in beta for English users in the U.S..

On the announcement:

"At Google, we’re always dreaming up new ways to help you uncover the information you’re looking for — no matter how tricky it might be to express what you need. That’s why today, we’re introducing an entirely new way to search: using text and images at the same time. With multisearch in Lens, you can go beyond the search box and ask questions about what you see."

With multisearch, users can ask a question about an object in front of them, tap the "+ Add to your search" button to add text, in order to refine their previous search by color, brand or a visual attribute.

"All this is made possible by our latest advancements in artificial intelligence, which is making it easier to understand the world around you in more natural and intuitive ways," added Google.

"We’re also exploring ways in which this feature might be enhanced by MUM– our latest AI model in Search– to improve results for all the questions you could imagine asking."