DeepMind is an artificial intelligence (AI) company acquired by Google back in 2014.

Under Alphabet's umbrella, its goal is to "solve intelligence" by trying to combine "the best techniques from machine learning and systems neuroscience to build powerful general-purpose learning algorithms."

On October 12th, 2016, Google announced that the AI can now refer to its own memory bank to learn and use it as a base of its knowledge to answer more questions.

What this means is that the AI has the potential to respond to queries all by itself, without having humans to teach possible answers.

The new AI model is called a differentiable neural computer (DNC).

The type can learn by feeding on data to answer complex questions about the relationship between the items in those data structures.

For example, the computer could respond to questions like: "Starting at Bond street, and taking the Central line in a direction one stop, the Circle line in a direction for four stops, and the Jubilee line in a direction for two stops, at what stop do you wind up?"

This type of question involves understanding the structure of data and their relationship, an the DNC model here can plan an efficient way of determining what context to go where.

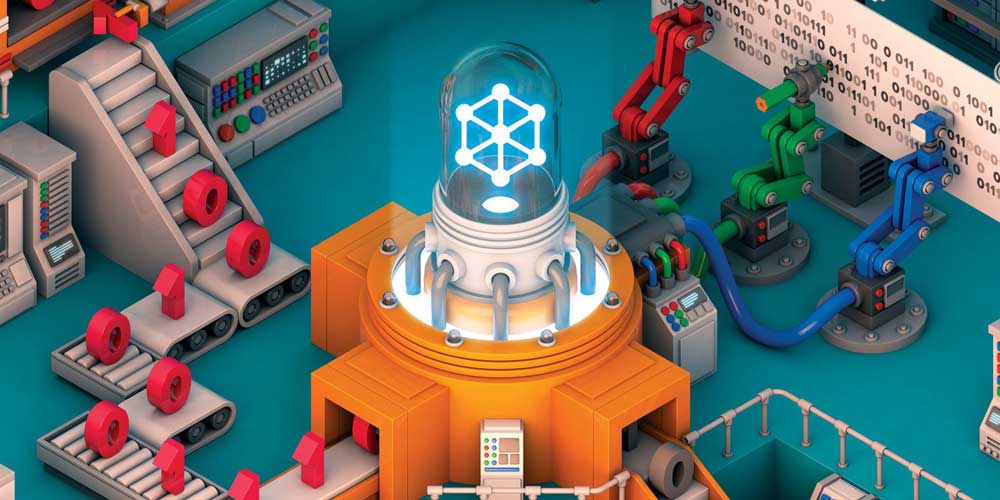

Below is the visualization of how it works:

This discovery builds on the concept of AI's neural networks, mimic the way the human mind works. They're great for machine learning applications where humans can make computers learn to do things by recognizing patterns.

Using DNC, humans can further eliminate their role in providing information to AI. Previously, AI needs a lot of data to learn, and with all those data, AI will structure them by getting to know their way around the subject.

For example, Google's AlphaGo AI that defeated a human world champion in a game of Go, had to learn by feeding more than 30 million moves from the complex and historical game. But by augmenting its capability using the DNC model, the AI can learn from its memory, and will likely to be able to compete the task for learning on its own.

What this controller does, is to perform several operations inside a computer's memory. The AI then chooses whether to write a data to the memory or not. When it chooses to write, it can either store it to a new and unused location of on an already existing location that contains information the controller is searching for.

This enables the controller to update whatever data it stored in that location. Each time a new information is written, the location is connected by links of association which represents the order in which information was stored.

And besides writing, the controller can also read from multiple locations in memory. The associative temporal links that were previously created, can be followed forward and backward to recall information written in sequence or in reverse. This enables the AI to read out information to be used to answer questions or actions.

This method allows the DNC to produce an answer, and when it's corrected, the controller will learn to produce answers closer and closer to the desired correct answer. This is because the DNC enables computers to learn how to use its own memory and how to produce answers out from nothing.