By putting all what people posted on the web into a single place, search engines are smart and could probably answer just about anything.

The sad thing about search engines however, they tend to provide the most detailed answers whenever they can, in order to overcome the doubts its users may have. It's the way they try to answer queries by showing all they have that makes them fascinating.

Unfortunately, there are times that users want straight answers. Like a simple 'Yes' or 'No' for a given query.

Well, these people are getting what they want, as Bing has been updated to do just that.

Microsoft has given Bing some improvements, one of which, is giving it the ability to return a 'Yes' or 'No' to answer certain queries, the company announced in a blog post.

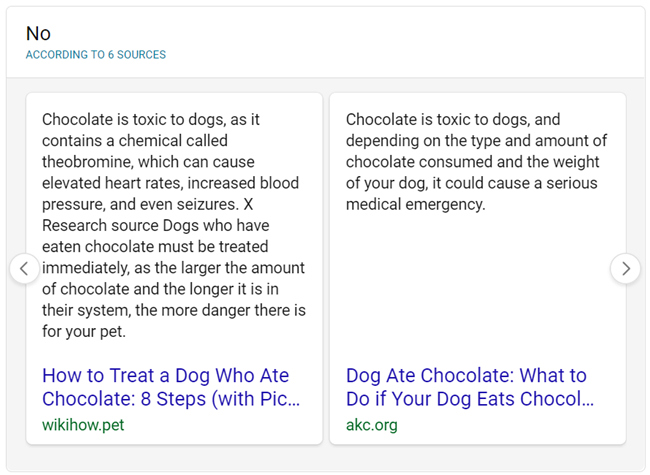

The new search feature also includes the one-word answer, as well as a carousel of related excerpts from various sources.

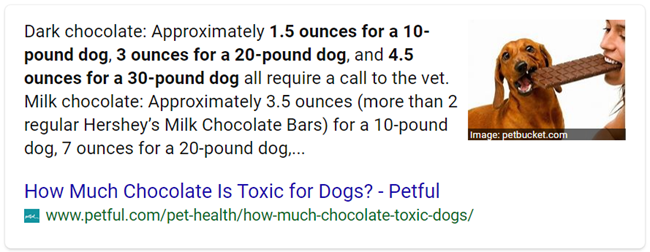

In an example, Bing showcased how the search engine answered the query “can dogs eat chocolate”.

Before the update, Bing would prominently show a single source to answer the query.

"We have always gone through many documents to find and generate the best intelligent answer. Going one step further, we want to summarize the answer, possibly with a single word," Bing said.

To do this, the team at the search engine started with a large pre-trained language model to then follow a multi-task approach.

"That means we fine-tuned the model to perform two separate (but complementary) tasks: assessing the relevance of a document passage in relation to the user query, and providing a conclusive Yes/No answer by reasoning over and summarizing across multiple sources."

Here, the query “can dogs eat chocolate” is synthesized across different sources to generate an unambiguous 'No' for an answer.

It does this by understanding the user's query using Natural Language Representation (NLR). The AI model can infer that “chocolate is toxic to dogs” means that dogs cannot eat chocolate, despite sources not explicitly stating so.

This search feature should be able to provide users with a concise answer, as well as a number of sources highlighted in the accompanying carousel.

Over the years, the team at Bing has collaborated with the team at Microsoft Research to develop and deploy large neural network models such as MT-DNN, Unicoder, and UniLM, in order to maximize Bing users' search experience.

Following the first improvement using NLR, Bing also showcased two more examples.

In the second example, Bing said that using multi-task deep learning, the team created a Turing NLR-based model that improved both intelligent answers and caption generation for English speaking markets.

To bring improvements to more users globally, Bing that uses the NLR model with 100 different pre-trained languages, has been tweaked to draw knowledge accumulated from other languages to improve its intelligent answers.

Bing shows that it can translate a query in Italian that translates to “red turnip benefits”, to generate intelligent answer generated from a top search result using the very same universal model that Bing operates in English-speaking markets.

In the third example, Bing is capable of understanding query intent that it is able to improve search relevance by itself.

"At the core of search relevance is understanding the user intent behind a search query and returning the best web pages that fulfill it. To solve this task at scale, we created a NLR-based model that is fine-tuned to rate potential search results for a given query, using the same scale as our human judges," Bing said.

Using this model, Bing can understand complex and ambiguous concepts much better than ever before.

When user entered “brewery germany from year 1080” as a query, it turns out that there is no known German brewery founded that exact year.

But here, Bing can assume that the user was looking for a very old brewery in Germany, even though they may have forgotten or mistyped the year.

"Running such powerful models at web-search scale can be prohibitively expensive. To improve search relevance for all our users globally, wherever they are located and whatever language they speak, we implemented many GPU optimizations and we are running this model on state-of-the-art Azure GPU Virtual Machines," Bing said.