Researchers at Nvidia have created a deep learning AI that can learn by observing the actions of a human, and then complete tasks based on the observed patterns.

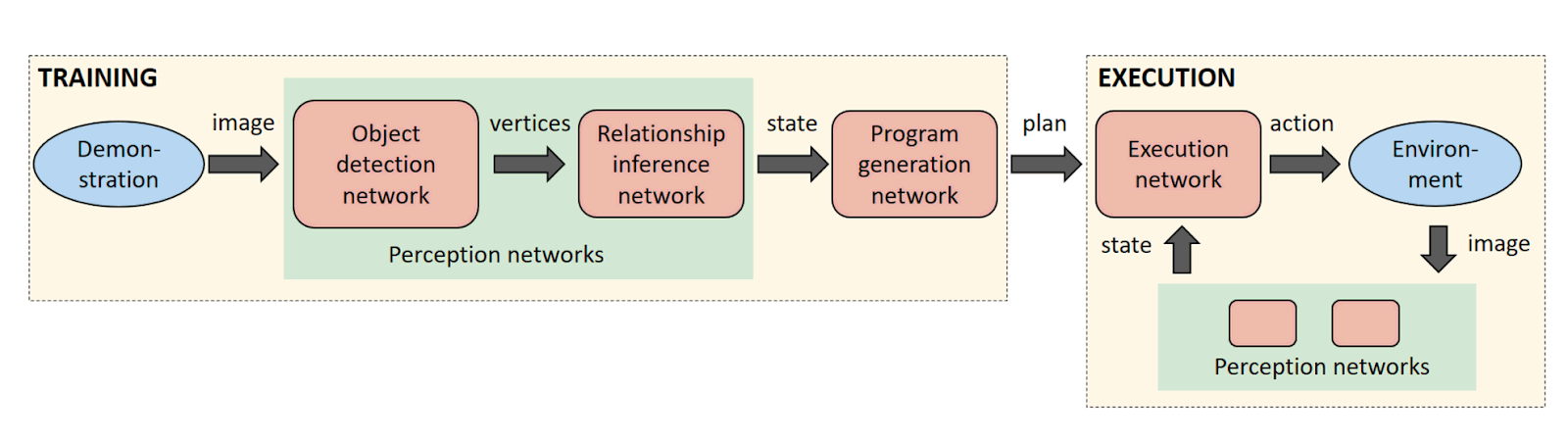

Claiming it as the "first of its kind", the AI project led by Stan Birchfield and Jonathan Tremblay trained a sequence of neural networks to perform duties associated with perception, program generation, and program execution.

As a result, the robot was able to learn a task from a single demonstration in the real world.

"The key to achieving this capability is leveraging the power of synthetic data to train the neural networks," said Nvidia.

"Current approaches to training neural networks require large amounts of labeled training data, which is a serious bottleneck in these systems. With synthetic data generation, an almost infinite amount of labeled training data can be produced with very little effort."

The goal of this project is to make it easier for humans to pass instructions to computers, reducing the time needed to train and program other AIs.

According to Nvidia, the deep learning and artificial intelligence method is designed to improve the communication between human and robots, allowing both to collaborate easier.

Most neural networks are trained using a method that is similar to brute force, where humans "force" the AI to learn by feeding it a huge amount of data. This method is effective because machine learning algorithms rely on pieces of pre-existing data to understand the intention of its existence. However, it isn’t always efficient nor is it applicable in all situations.

A industry factory robot for example. They could benefit from some sort of machine learning algorithms. But training them requires a dedicated specialists to program the robot at a low-level.

Nvidia is taking a different approach here.

Instead of using labeled training data, Nvidia prefers in using "synthetic data".

According to the company, this gives the neural network an almost infinite amount of data to work with, but for a much smaller effort. "Approaches to training neural networks require large amounts of labeled training data, which is a serious bottleneck in these systems," explained Nvidia.

"The perception network as described applies to any rigid real-world object that can be reasonably approximated by its 3D bounding cuboid," the researchers said. "Despite never observing a real image during training, the perception network reliably detects the bounding cuboids of objects in real images, even under severe occlusions."

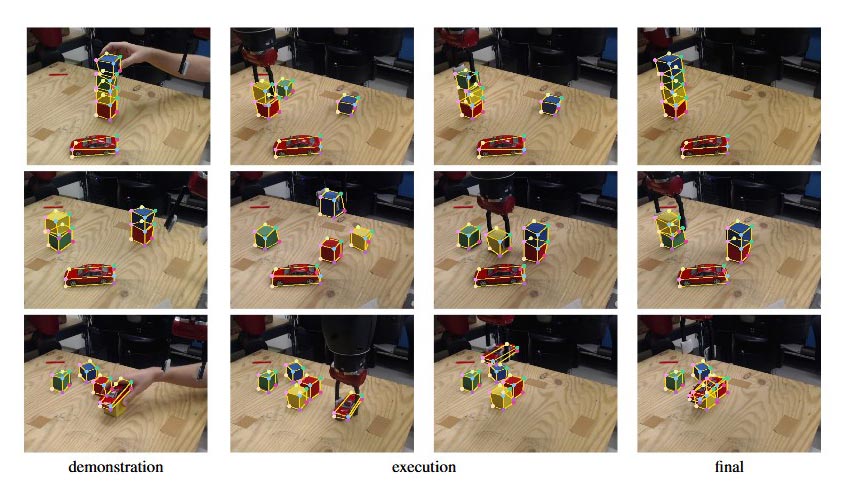

For the demonstration, the team trained object detectors on several a toy car and some colored blocks. The system learned the physical relationship of blocks, like whether they are stacked on top of one another or placed next to each other.

Then a human operator shows a pair of stacks of cubes to the robot.

The system then infers an appropriate program, and places the cubes in the correct order. Because it takes the state of the world into account during execution, the system is able to recover from mistakes in real time.

Nvidia said its method is the first time where synthetic data was combined with an image-centric approach on a robot.

Using generic geometrical objects, the AI would break down the feed into basic geometrical objects, record and learn from the human's actions, and then performed the ordered task on those basic objects. This way, the system could adapt itself to any video feed, image quality, or environmental noise.

The team said that they will continue to explore the use of synthetic training data for robotics manipulation to know and extend the capabilities of this method to other scenarios.

They have also detailed this research in a paper entitled "Synthetically Trained Neural Networks for Learning Human-Readable Plans from Real-World Demonstrations."