Computers have become so advanced, thanks to the more capable hardware they're equipped with. And Nvidia has been on at the forefront in the business.

In particular, Nvidia, the American multinational technology company based in California, is known for developing graphics processing units, and more, as well as system on chips. As one of the biggest chipmaker in the world, Nvidia is also a dominant supplier of both AI hardware, and also software.

And this time, in its planned SIGGRAPH 2023, the company wants to present 20 papers on generative AI and neural graphics.

The first that is readily applicable, is the AI-based neural compression technique for material textures.

The team of Nvidia said that they are able to reduce texture storage requirements at a time when assets are of extremely high quality but also ask for increasingly large amounts of disk space.

To achieve this goal, they've combined GPU textures compression with neural compression techniques.

In its own words in a blog post, Nvidia said that:

"Neural texture compression [...] provides up to 16x more texture detail than previous texture formats without using additional GPU memory."

This is accomplished by enabling low-bitrate compression, unlocking two additional levels of detail (or 16× more texels) with similar storage requirements as commonly used texture compression techniques. Nvidia's main contributions are:

- A approach to texture compression that exploits redundancies spatially, across mipmap levels, and across different material channels.

- A low-cost decoder architecture that is optimized specifically for each material.

- A highly optimized implementation of Nvidia's compressor, with fused backpropagation.

By optimizing for reduced distortion at a low bitrate, Nvidia is able to compress two more levels of details in the same storage as block-compressed textures.

The resulting texture quality at such aggressively low bitrates is better than or comparable to recent image compression standards like AVIF and JPEG XL, which are not designed for real-time decompression with random access.

This architecture also enables real-time performance for random access, and can be integrated into material shader functions, such as filtering, to facilitate on-demand decompression, as well as practical per-material optimization with resolutions up to 8192 × 8192 (8k).

"Our compressor can process a 9-channel, 4k material texture set in 1-15 minutes on an NVIDIA RTX 4090 GPU, depending on the desired quality level," said Nvidia.

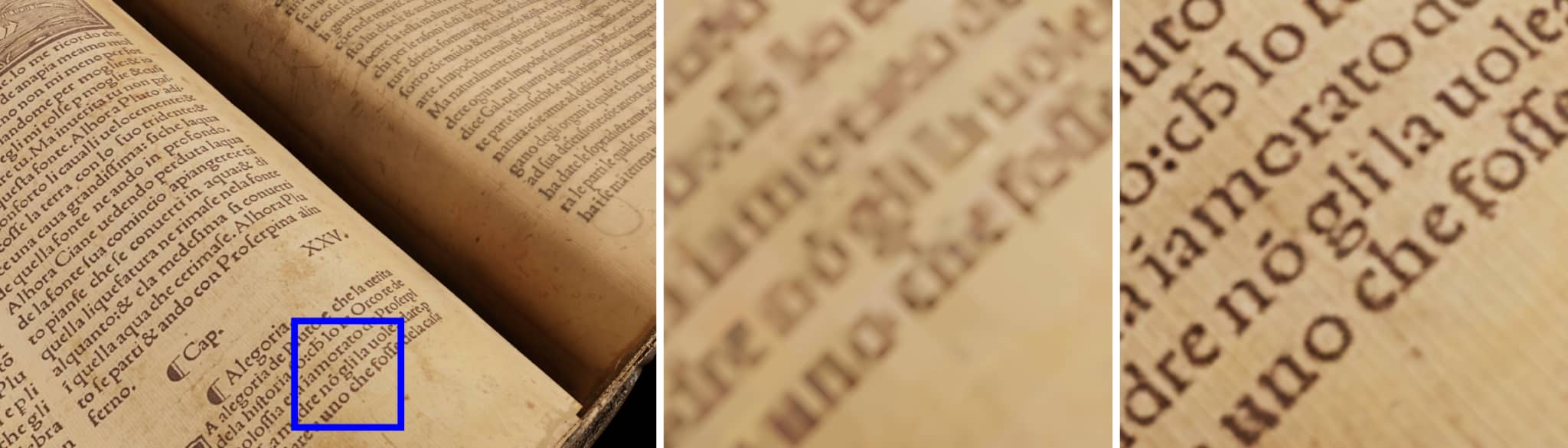

As seen below, Nvidia demonstrates how neural-compressed textures capture sharper detail than previous formats.

The level of realism can be seen in this neural-rendered teapot, which accurately represents the ceramic, the imperfect clear-coat glaze, fingerprints, smudges and even dust.

Long story short, this allows viewers to get very close to an object before losing significant texture detail.

Another SIGGRAPH 2023 paper worth noting here, is titled Interactive Hair Simulation on the GPU Using ADMM.

The technology shows how AI-powered neural physics can enable an incredibly realistic simulation of tens of thousands of hair strands in real time.

Nvidia trained this neural network by letting it analyze how hair is supposed to move, and how the strands should react and behave when they're together.

This allows the neural network to predict how hair would move in the real world.

Humans have an average of 100,000 hairs on their heads, with each reacting dynamically to a motion and the surrounding environment. Traditionally, creators have used physics formulas to calculate hair movement, simplifying or approximating its motion based on the resources available.

This requires a lot of time and resources

This is why virtual characters in a big-budget Hollywood film have hairs that look a lot more detailed that real-time video game avatars.

"The team’s novel approach for accurate simulation of full-scale hair is specifically optimized for modern GPUs. It offers significant performance leaps compared to state-of-the-art, CPU-based solvers, reducing simulation times from multiple days to merely hours — while also boosting the quality of hair simulations possible in real time. This technique finally enables both accurate and interactive physically based hair grooming," said Nvidia.

This research should supplement other of Nvidia's research, which shows how AI models for textures, materials and volumes "can deliver film-quality, photorealistic visuals in real time for video games and digital twins."

In particular, this can help make metaverse, or Nvidia's 'Omniverse' experience closer to reality.

Nvidia has been in business for many years, and its graphics research have helped create film-like graphics to games.

And this time, the company's papers also highlight the power of generative AI, which has taken the world by storm since OpenAI introduced ChatGPT.

But for Nvidia, the company focuses more on showing off more traditional 3D graphics, in which according to Aaron Lefohn, vice president of graphics research at Nvidia, should enable developers and artists to bring their ideas to life — whether still or moving, in 2D or 3D, hyper-realistic or fantastical.

The papers from Nvidia come from collaborations on generative AI and neural graphics with over a dozen universities in the U.S., Europe and Israel.

Besides the novel compression technique. the 'not a hair out of place', and the "personalized" generative AI, the papers also include how AI models can become inverse rendering tools to transform still images into 3D objects; neural physics models that use AI to simulate complex 3D elements with realism; and neural rendering models that unlock new capabilities for generating real-time, AI-powered visual details.