There is a limit in everything. And the same goes to AI.

Despite the field is still under heavy research and development, researchers suggest that conversational AIs have "hit a ceiling". Without more unique and novel approaches or greater challenges, the progress of developing AIs' natural language processing (NLP) may soon slow down.

This is why researchers from Facebook AI Research, Google's DeepMind, University of Washington, and New York University, introduced 'SuperGLUE'.

According to Facebook:

SuperGLUE consists of a series of benchmark tasks to measure the performance of modern, high performance language-understanding AI.

Using Google's BERT as a model performance baseline, SuperGLUE can help fine-tune AI models for a wide range of tasks to achieve performance above a human baseline average.

.

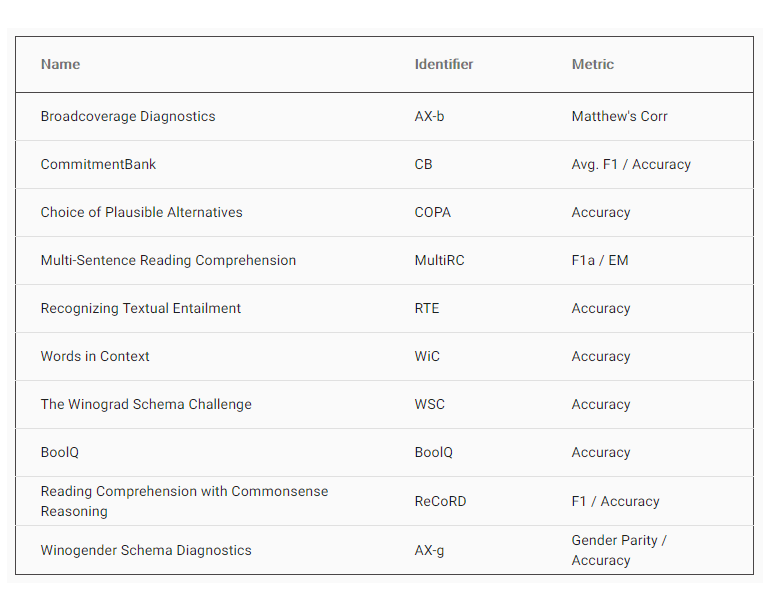

To do this, the benchmark itself consists of eight tasks, including a choice of plausible alternatives (COPA) test, a causal reasoning task in which a system is given a premise sentence and must determine either the cause or effect of the premise from two possible choices, and a textual recognition entailment task whereby AI are required to infer the meaning of one text from another text, among others.

After performing its benchmark, SuperGLUE provides a single-number metric to summarize an AI's ability to handle various given NLP tasks.

According to Facebook AI, humans can obtain 100% accuracy on COPA while Google's BERT achieved only 74%, suggesting that there is a lot of room for NLP improvement.

Using BERT or Bidirectional Encoder Representations from Transformers, SuperGLUE includes language representation model designed to pre-train deep bidirectional representations from unlabeled text by jointly conditioning on both left and right context in all layers.

SuperGLUE itself is preceded by the General Language Understanding Evaluation (GLUE) benchmark for language understanding.

But here, SuperGLUE is designed to be more complicated that GLUE tasks, as it assigns a model a numerical score based on performance on nine English sentence understanding tasks for NLU systems, such as the Stanford Sentiment Treebank (SST-2) for deriving sentiment from a data set of online movie reviews.

SuperGLUE is "styled after GLUE with a new set of more difficult language understanding tasks, a software toolkit, and a public leaderboard," wrote the researchers on their research paper.

It also contains Winogender, a gender bias detection tool.

"Current question answering systems are focused on trivia-type questions, such as whether jellyfish have a brain," said Facebook AI. With SuperGLUE, the challenge goes further "by requiring machines to elaborate with in-depth answers to open-ended questions, such as ‘How do jellyfish function without a brain?'".

To help researchers create robust language-understanding AI, New York University that is part of the team also released an updated version of Jiant, a general purpose text understanding toolkit that is configured to work with HuggingFace PyTorch implementations of BERT and OpenAI’s GPT as well as GLUE and SuperGLUE benchmarks.