Privacy, there are so many ways to view this particular topic. And in the age of internet and mobile, things get more difficult.

This is because the internet is a public space, and that the only thing that differentiate the owner of the data and some strangers, is the former's knowledge of login credentials. But since data on the internet is hosted by some other entities, these entities may have the ability to also access user data.

Apple operates iCloud, where Apple users can upload their photos and videos to the cloud for safekeeping and convenience.

While Apple promises not to ever look at whatever users uploaded to the cloud, back in 2021, the company made a controversial plan to introduce child safety features, which include a system to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos, a Communication Safety option that blurs sexually explicit photos in the Messages app, and child exploitation resources for Siri.

While Communication Safety has been launched back in 2021, and the Siri resources have also been made available, CSAM detection never ended up launching.

And there is a good reason for that.

Read: Apple's Attempt To Scan People's Phone For CSAM Is A 'Surveillance', Said WhatsApp Head

Apple announced that it abandoned the controversial plan to detect known CSAM stored in iCloud Photos, because it may cause more problems that it can solve.

"We have further decided to not move forward with our previously proposed CSAM detection tool for iCloud Photos."

"Children can be protected without companies combing through personal data, and we will continue working with governments, child advocates, and other companies to help protect young people, preserve their right to privacy, and make the internet a safer place for children and for us all."

Apple gave an explanation of why it cancelled a plan to scan iCloud Photos libraries for child sexual abuse material in 2022, and instead went the opposite direction by enabling users to encrypt pictures stored in iCloud Photos.

This time, it gave more details.

According to Erik Neuenschwander, Apple's director of user privacy and child safety:

"Scanning every user's privately stored iCloud data would create new threat vectors for data thieves to find and exploit."

"It would also inject the potential for a slippery slope of unintended consequences. Scanning for one type of content, for instance, opens the door for bulk surveillance and could create a desire to search other encrypted messaging systems across content types."

"Scanning systems are also not foolproof and there is documented evidence from other platforms that innocent parties have been swept into dystopian dragnets that have made them victims when they have done nothing more than share perfectly normal and appropriate pictures of their babies."

After collaborating with an array of privacy and security researchers, digital rights groups, and child safety advocates, the company concluded that it could not proceed with development of a CSAM-scanning mechanism, even one built specifically to preserve privacy.

Apple initially planned to create a CSAM detection tool, and introduced it alongside an update to iOS 15 and iPadOS 15 by the end of 2021.

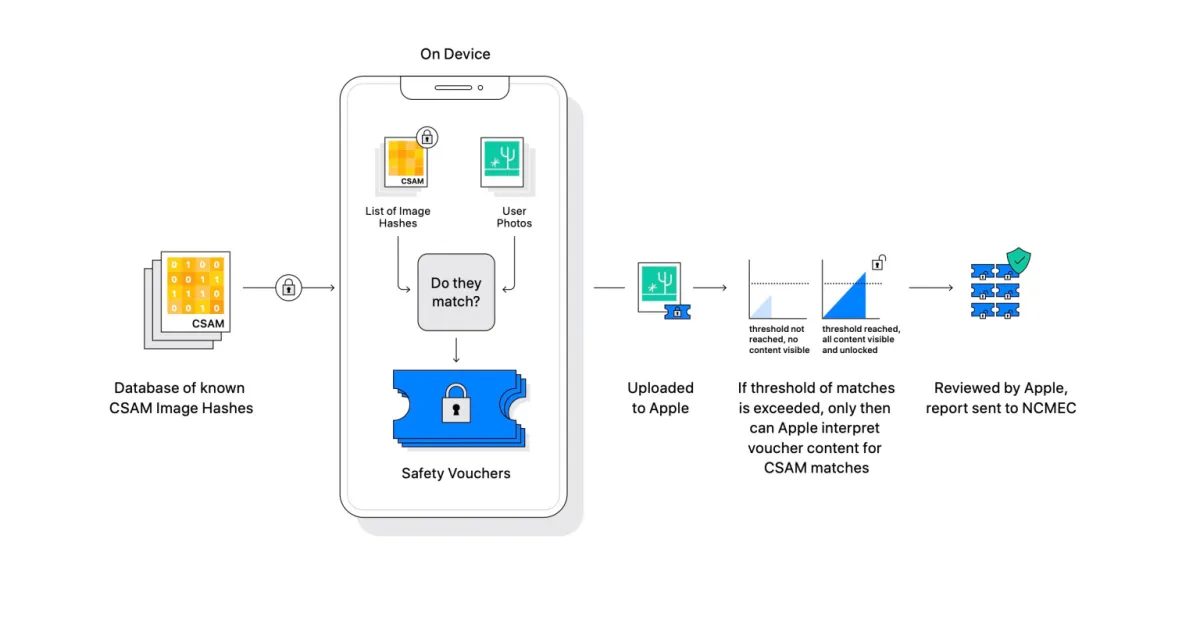

The proposed CSAM detection system was planned to use neuralMatch, "designed with user privacy in mind," and that the system would have performed "on-device matching using a database of known CSAM image hashes" from child safety organizations, which Apple would transform into an "unreadable set of hashes that is securely stored on users' devices."

Apple planned to report iCloud accounts with known CSAM image hashes to the National Center for Missing and Exploited Children (NCMEC), a non-profit organization that works in collaboration with U.S. law enforcement agencies.

Apple said there would be a "threshold" that would ensure "less than a one in one trillion chance per year" of an account being incorrectly flagged by the system, plus a manual review of flagged accounts by a human.

While the goal is noble, the system is just wrong.

Apple's plans were criticized by a wide range of individuals and organizations, including security researchers, the Electronic Frontier Foundation (EFF), politicians, policy groups, university researchers, and even some Apple employees.

Some critics argued that the feature would have created a "backdoor" into devices, which governments or law enforcement agencies could use to surveil users. Another concern was false positives, including the possibility of someone intentionally adding CSAM imagery to another person's iCloud account to get their account flagged.

By not scanning iCloud images for CSAM, it does not mean Apple gave up on fighting child exploitation, because the company has continued its efforts to improve the Communication Safety feature that debuted in 2021.

This enables an iPhone to detect if a child gets or sends sexually explicit photos through the Messages app. The user is then warned.

This process happens entirely on the handset, not on a remote server, and the messages remain encrypted.