Google's parent company, Alphabet, has been all in the AI business for years.

The company has been heavily developing the technology, and improve as it goes, and implement it to its various products.

But generative AI is something different, and that it's capacity and capability is on a whole different level.

What was first popularized by OpenAI's ChatGPT, and sent many others in the competition into frenzy, Google has what it calls 'Bard', its answer to its rival's generative AI chatbot.

While Google is pushing Bard to more and more users, and is wishing to gain even more users by tweaking and improving the AI continuously, Google has a different thought when considering its employees in using the AI chatbot.

Instead, the company advises its employees to be careful of what they say to these AI bots, even to Bard.

The reason is privacy.

Alphabet has warned its employees not to share confidential information with AI chatbots, as this information is subsequently stored by the companies that own the technology.

The reason for this, is because Bard, ChatGPT, and every other generative AI products, are trained using data, and that the data can also include user interactions with the AI products.

As these bots are based on large language models (LLMs) that are in constant training, the companies behind these AI chatbots are required to store user interactions in one way or another.

The companies behind the chatbots may have to label the data, or reuse or tweak the information, to feed them back to the system.

To ensure that this is possible. user interaction with the chatbots should be stored, and could be clearly visible in plain text to their employees.

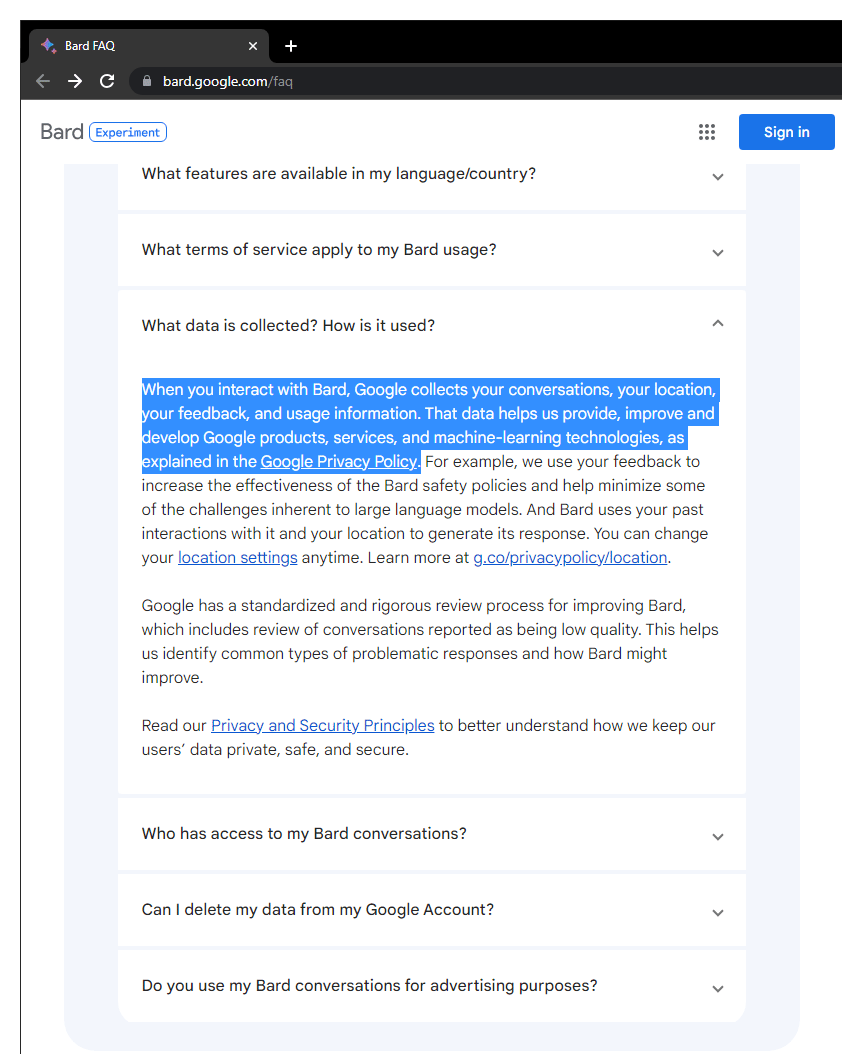

"When you interact with Bard, Google collects your conversations, your location, your feedback, and usage information. That data helps us provide, improve and develop Google products, services, and machine-learning technologies, as explained in the Google Privacy Policy," Google said about Google Bard, in its FAQs.

Furthermore, Google also said that it selects a subset of conversations as samples to be reviewed by trained reviewers, which can be kept for three years.

The company even went as far as saying that people should "not include information that can be used to identify you or others in your Bard conversations."

The repurposing user interaction as a dataset for AI to learn, allows the companies to better serve the AI's users. But this also results in AI chatbots sharing the information submitted by one users in its conversations with other users, a research PDF found.

Google know that its employees, who use Bard or ChatGPT, or any other generative AI chatbots, may not realize that the conversations they have with their newfound AI buddy won't just stay in the chat.

The company said this all, over fears of leaks.

Google, and other tech companies, as well as others in other industries worldwide, are utilizing generative AIs, but also looking for ways to protect themselves from employees giving away secrets to chatbots.

Because the statement came out from Alphabet itself, meaning that it's certainly an advise to anyone who is using AI chatbots.

This should also highlight the importance of online privacy, and how it's never a good idea to share private or confidential information anywhere on the internet.

As for ChatGPT, its creator said that its AI human trainers do review ChatGPT conversations to help improve its systems, saying that, "We review conversations to improve our systems and to ensure the content complies with our policies and safety requirements."