Artificial Intelligence is intelligence demonstrated by machines. Researchers are racing to create smarter and smarter AIs, and the road is never easy.

So far, the intelligence of AIs can be determined from the size and the quality of the data set, the effectiveness and the efficiency of the algorithms, and the methods of learning. Each generation of AI introduces advances, and so does 'Gato'.

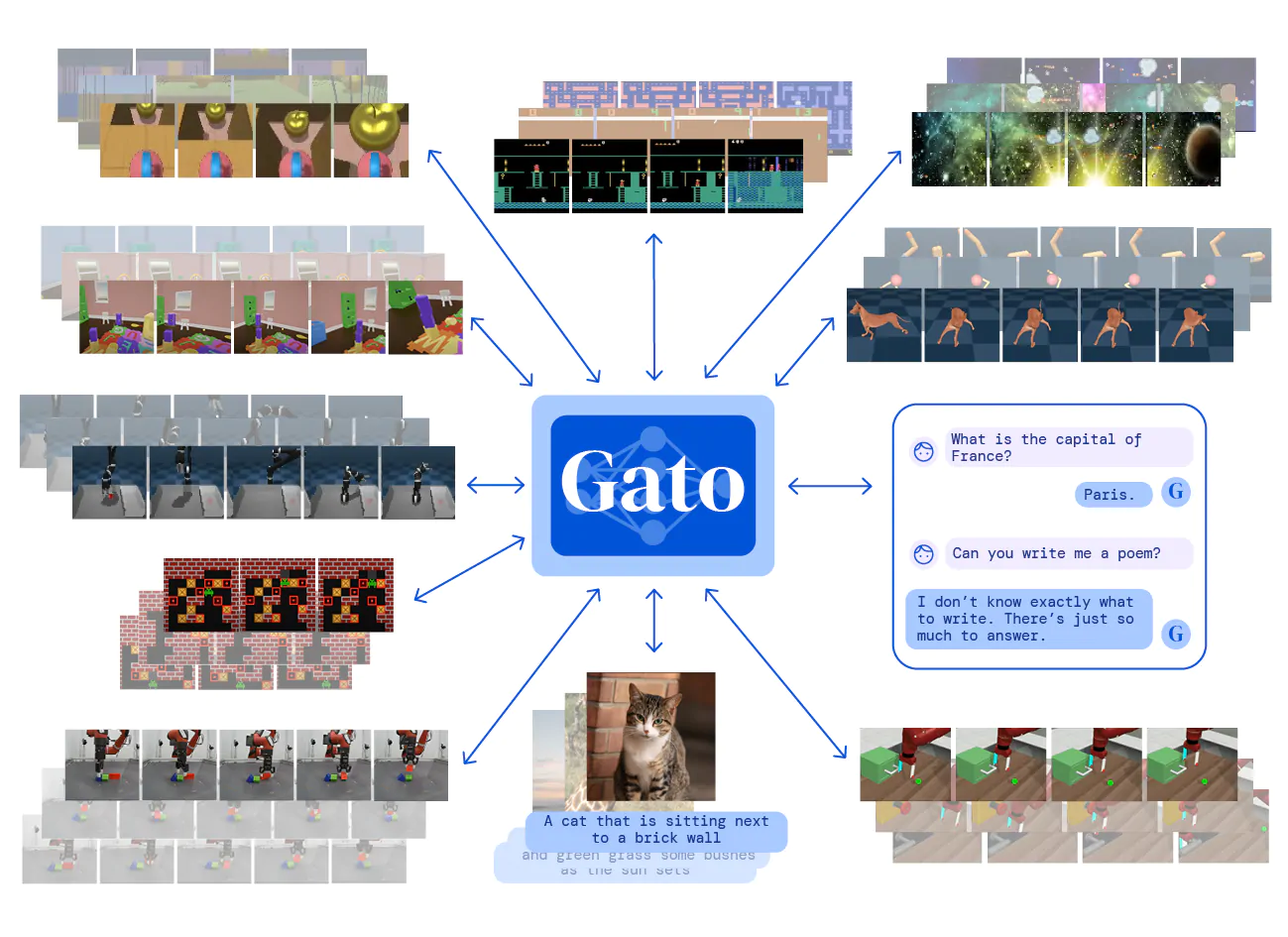

Gato is an AI created by researchers from Google's DeepMind, capable of performing hundreds of different tasks ranging from controlling a robot arm to writing poetry.

For this reason, the company it dubbed the AI as a "generalist" system.

The AI is so advanced that according to Doctor Nando de Freitas, a lead researcher at DeepMind, humanity is apparently on the verge of solving Artificial General Intelligence (AGI) sooner than later.

Freitas made the bold statement on Twitter, suggesting that the race towards general intelligence is over.

"The Game is Over!," Freitas said.

Read: Artificial General Intelligence, And How Necessary Controls Can Help Us Prepare For Their Arrival

Solving these scaling challenges is what will deliver AGI. Research focused on these problems, eg S4 for greater memory, is needed. Philosophy about symbols isn’t. Symbols are tools in the world and big nets have no issue creating them and manipulating them 2/n

— Nando de Freitas (@NandoDF) May 14, 2022

Mentioning an article from The Next Web, Freitas said that:

Solving these scaling challenges is what will deliver AGI. Research focused on these problems, eg S4 for greater memory, is needed. Philosophy about symbols isn’t. Symbols are tools in the world and big nets have no issue creating them and manipulating them.

Finally and importantly, OpenAI co-founder Ilya Sutskever is right.

Rich Sutton is right too, but the AI lesson ain’t bitter but rather sweet. I learned it from Google researcher Geoffrey Hinton a decade ago. Geoff predicted what was predictable with uncanny clarity."

Freitas' opinion is based on how the AI performs, and how it approaches solutions to problems.

According to a publication from DeepMind:

Gatoa scalable generalist agent that uses a single transformer with exactly the same weights to play Atari, follow text instructions, caption images, chat with people, control a real robot arm, and more: https://t.co/9Q7WsRBmIC

Paper: https://t.co/ecHZqzCSAm 1/ pic.twitter.com/cC8ukhw4at— DeepMind (@DeepMind) May 12, 2022

What DeepMind is trying to say here is that, Gato is a multi-modal AI system that can multi-task, and is a multi-embodiment generalist policy.

The same network with the same weights can play Atari, caption images, chat, stack blocks with a real robot arm and much more, deciding based on its context whether to output text, joint torques, button presses, or other tokens.

The researchers did this because Gato was inspired by progress in large-scale language modelling.

DeepMind researchers applied a similar approach towards building a single generalist agent beyond the realm of text outputs.

As a result, one single AI can do many things, even when those things aren't at all related.

But the status of this AI is debatable, in a sense that the AI doesn't really try to mimic human, like what AGI is expected to be capable of.

For example, while Gato is capable of cross-modal feats never seen before in AI, but it still has a number of unreliability and absolute discomprehension that shouldn't be found in sentient entities.

While it's indeed common for AIs to make errors the same way that humans make errors, other researchers argue that Gato is not an AGI, because it's simply a pre-trained, narrow AI models that are bundled neatly into one entity.

Gato isn't an AGI because its intelligence still cannot make it learn new things without prior training, a feat that researchers have agreed AGI can do.

As a matter of fact, the accompanying research paper has nothing to indicate that Gato is an AGI.

Further reading: Paving The Roads To Artificial Intelligence: It's Either Us, Or Them

This is why some other researchers concluded that Gato is an 'Alt Intelligence', which is a hyper-scaled AI model capable of being perceived as an AGI.

Of course, Nando de Freitas defends his hypothesis.

According to Freitas, researchers cannot make models bigger and hope for success.

In the past, researchers have scaled AI using more powerful hardware, better algorithms, and more data sets. While results have achieved great successes, many ran to road blocks.

In essence, DeepMind's Gato is a feat like OpenAI’s DALL·E, which is an AI model capable of generating bespoke images from descriptions.

But all of them have flaws that make them far from AGI, and still considered an ANI (Artificial Narrow Intelligence). They are all biased and can create outputs that aren't consistent. They all have weaknesses that shouldn't be found on AGI.

Most importantly, the argument is because AGI have never been created, and humanity has yet to figure out the secret ingredient to create AGI.

The AI field is complex, full of black boxes, and lacks single, trainable solution.

Regardless, each new generation of AI is capable of demonstrating breakthroughs in humanity's ability to manipulate computers.

If AGI is certain, it's only a matter of time before humanity finally understands it.

Read: How OpenAI's DALL·E 2 Takes Image-Generation AI To An Eerie Level