AI is about intelligence demonstrated by machines, as opposed to natural intelligence displayed by living things. And OpenAI is among the pioneers in the AI field.

The research laboratory that previously created GPT-3, an AI capable of producing text rich with context, nuance and even humor.

This successor of GPT-2 is 100 times larger, and very much changed the game of how people perceive AI, as it was pretty much the hype of text-generation AI.

Among others, OpenAI has also created an AI capable of defeating the world's best Dota 2 players, and Codex, an AI capable of translating natural language to programming codes.

Besides them all, OpenAI has what it calls the 'DALL·E', a portmanteau of the iconic artist Salvador Dali and the robot WALL-E from the computer-animated science fiction film of the same name.

The AI is meant to be the 'GPT' for images.

This time. OpenAI has gone beyond the original DALL·E, by introducing 'DALL·E 2'.

This updated version of its text-to-image generation model DALL·E, has a much higher resolution and lower latency than the original system.

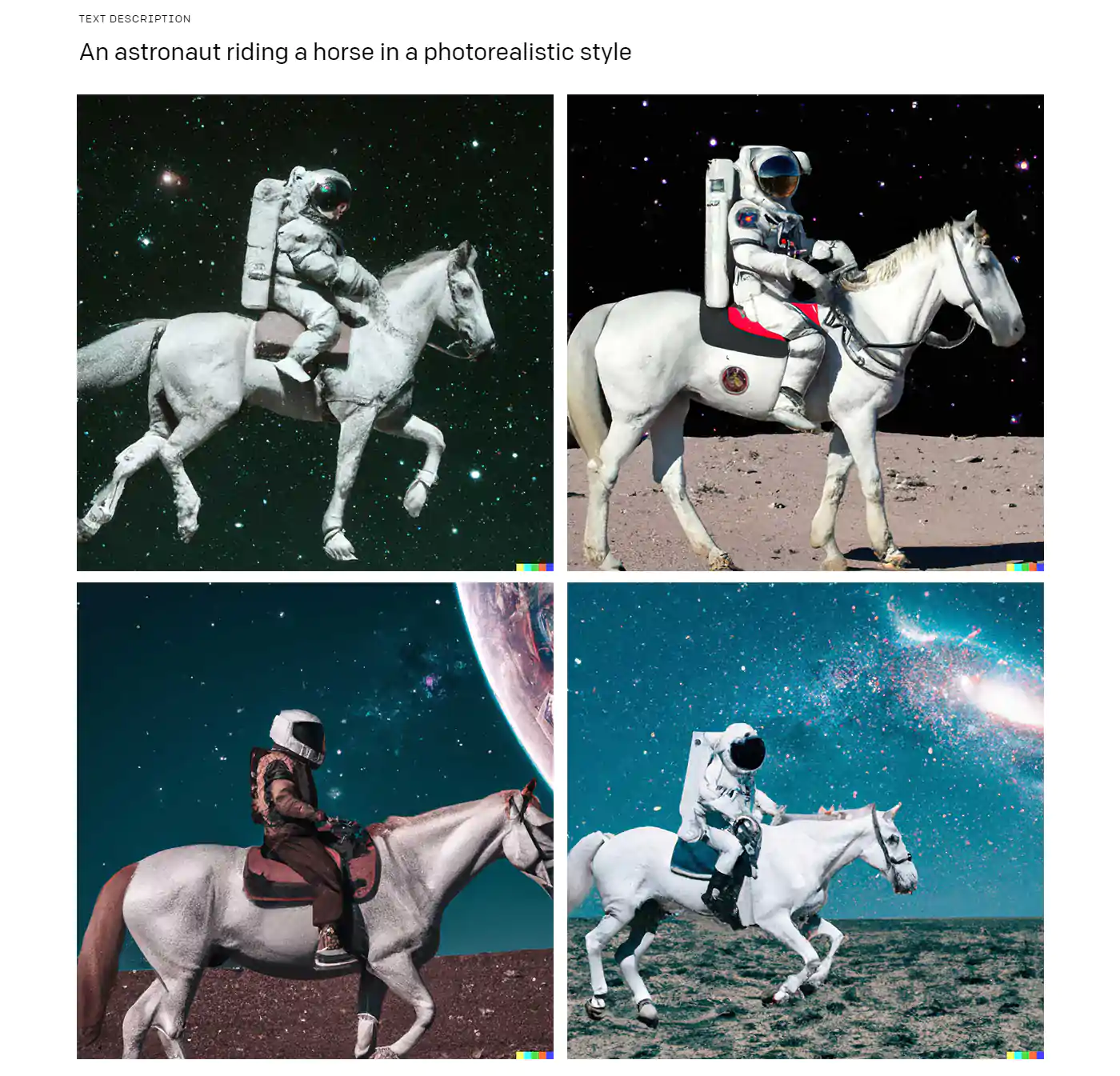

DALL·E 2, like the original DALL·E, can create new images from written descriptions.

The model is able to create depictions of anything, including an astronaut riding a horse in space.

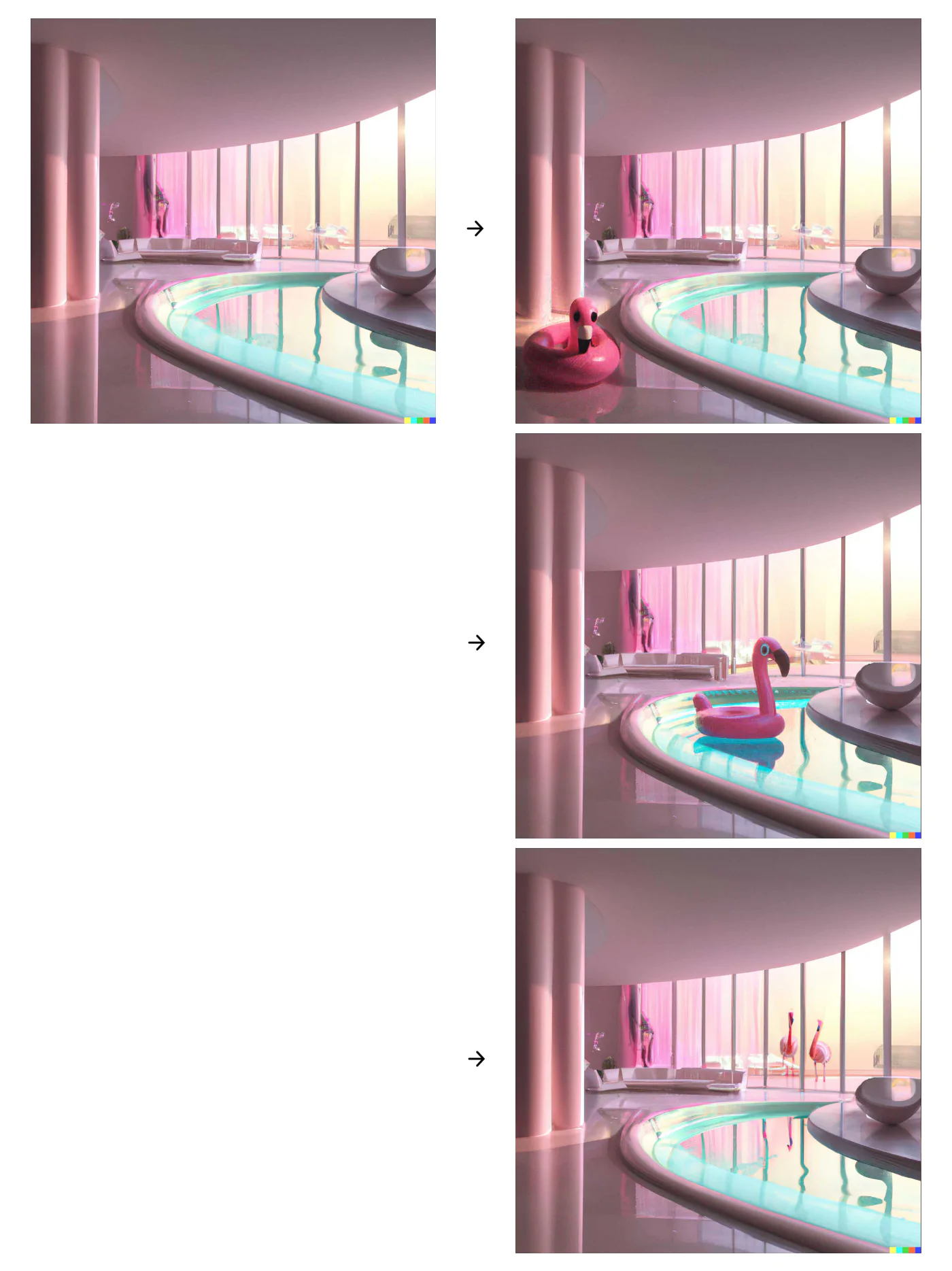

But as the successor of DALL·E, OpenAI has given it a few more capabilities to make it worthy, such as being able to edit an existing picture.

This is possible because DALL·E 2 also includes an 'inpainting' feature that can be used to improve DALL·E’s text-to-image prowess on a more granular level, as OpenAI explained.

With it, the updated model can start with an existing picture, where users can tell recognize parts inside image and edit them.

In one example, users could ask DALL·E 2 to create a flamingo and put in somewhere inside an existing picture.

Likewise, using OpenAI's huge data set, DALL·E 2 is able to remove an object from an image while taking into account how that would affect details such as the object's shadow, reflections, and textures into account for example.

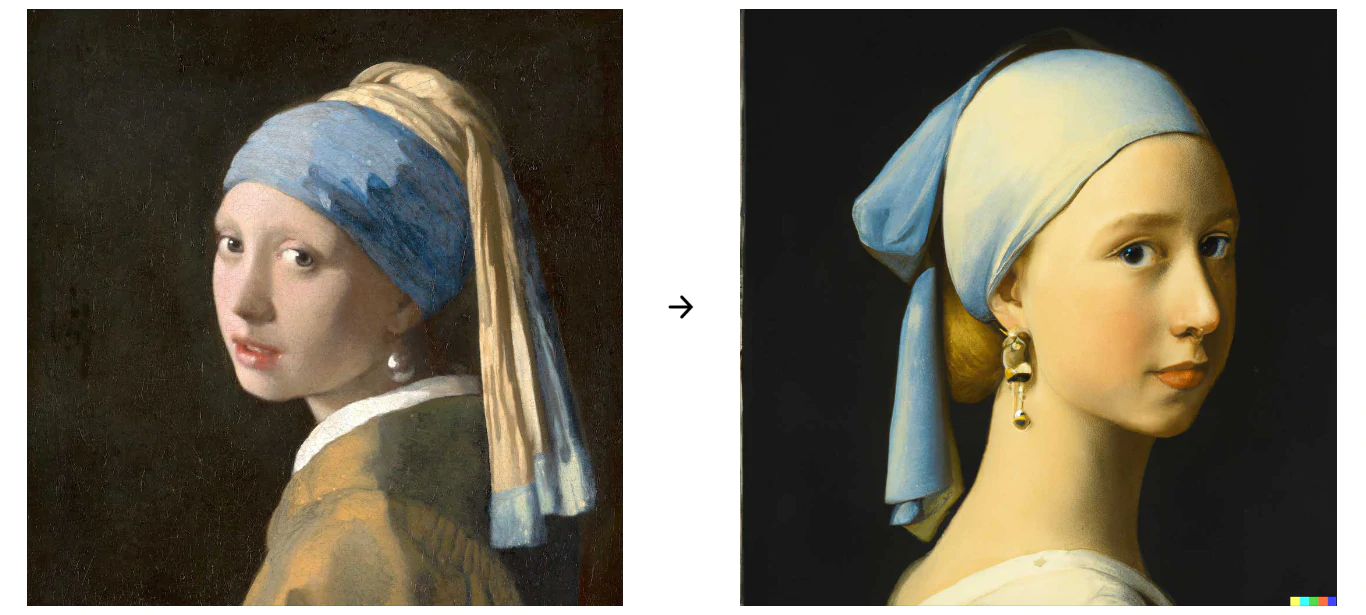

The second prominent DALL·E 2 feature, is variations.

What it does, is allowing users to upload an image and then have the model create variations of it. It’s also possible to blend two existing images together, to generate a third different picture that contains elements of both.

To accomplish this outstanding feat, OpenAI built DALL·E 2 using CLIP, a computer vision system it created that is based on Generative Pre-trained Transformer 3, a so-called autoregressive language model that uses deep learning to produce human-like text, only instead it generates images.

CLIP was originally designed to look at images and summarize the content of what it saw in human language, similar to how humans do. Later, OpenAI progressed by creating an inverted version of that model called unCLIP, which begins with the summary and works backward to recreate the image.

But just like any other AI that came before it, DALL·E 2 comes with biases.

Not surprisingly, results generated by the AI model can sometimes be NSFW, biased towards white male, sexualizing and/or undergrading women.

DALL·E 2 shows how humans can progress in creating increasingly capable AIs as long as the resources are met.

But the biases here is again representing how AIs can cross the boundaries.

The more intelligent an AI becomes, the more it can venture into dangerous territories.

Reading text and understanding the meaning is one thing, but to enable critical thinking and provide results based on that is something else. The society is already struggling with fake news and things such as AI-generated deepfakes, and DALL·E 2 can make it worse, if it is made available to the public masses without control or governance.

This is why DALL-E 2 has some built-in safeguards. OpenAI explained that it was trained on data where potentially objectionable material was first filtered out, in order to reduce the chance of it creating an image that some might find offensive.

The images DALL-E 2 create also contain a watermark, and OpenAI has also ensured that DALL-E 2 cannot generate any recognizable human faces based on a name.

Even so, the possibilities are virtually limitless.

Regardless, OpenAI said it hopes to add DALL·E 2 to its application programming interface toolset once it has undergone extensive testing, meaning it could one day be made available to third-party applications.

The researchers also encouraged developers and researchers to sign up to test the system, with OpenAI saying that it is continuing to build on the system, while being careful to examine such dangers.

Just like most of its previous works, DALL·E 2 isn’t being open-sourced.

Further reading: How OpenAI's 'DALL·E 2' Invented Its Own Language That Nobody Can Understand