The data needed for insights is personal and sensitive, and must be kept private.

When businesses need to gather an increasing amount of information to improve experience, how can they do that without sacrificing privacy?

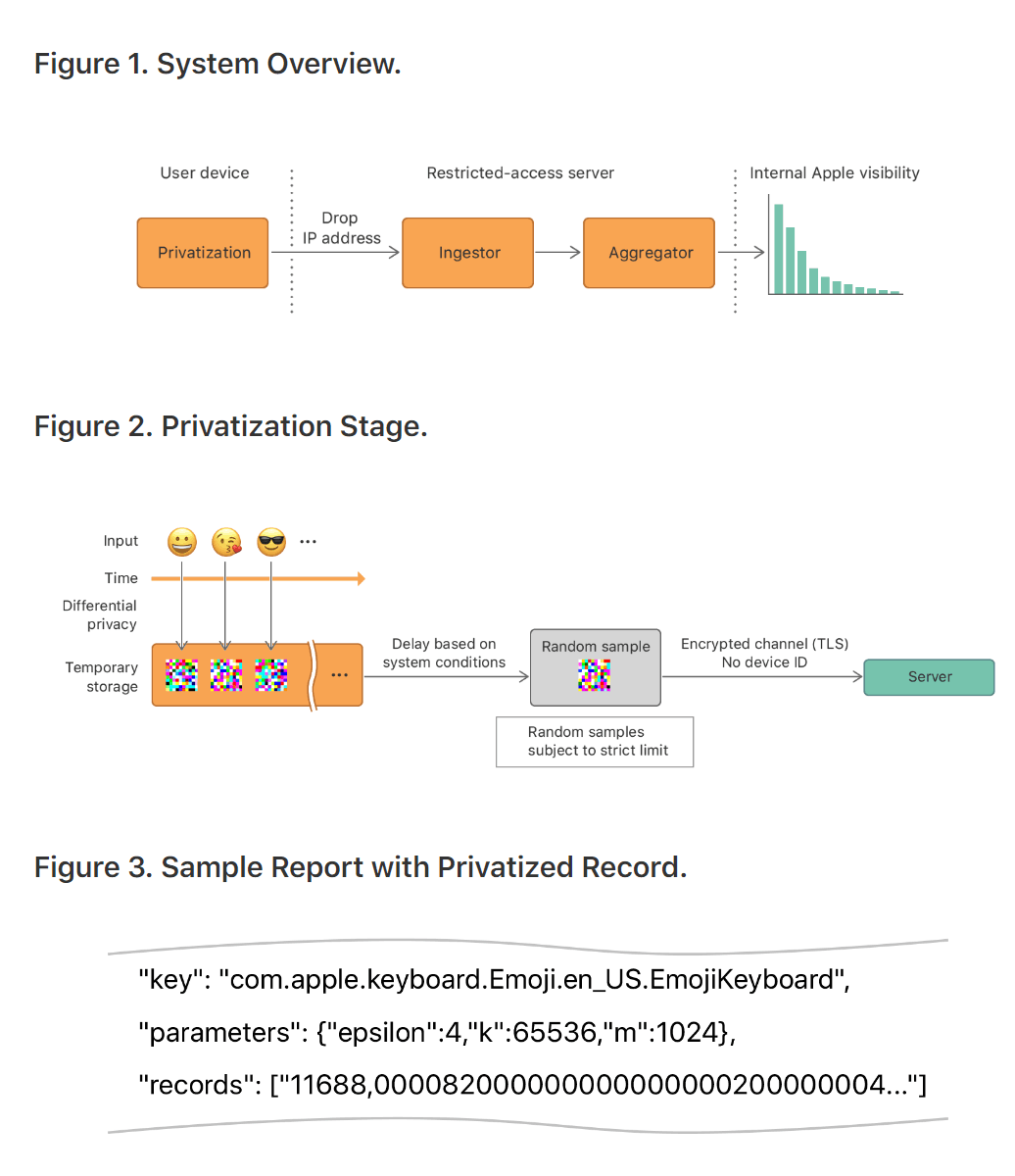

In a paper Apple published on its Machine Learning Journal, the company proposed the use of local differential privacy, instead of the traditional central privacy. What this means, Apple is saying that individual users' devices can be used to make noise to mix up any data before its servers receive them.

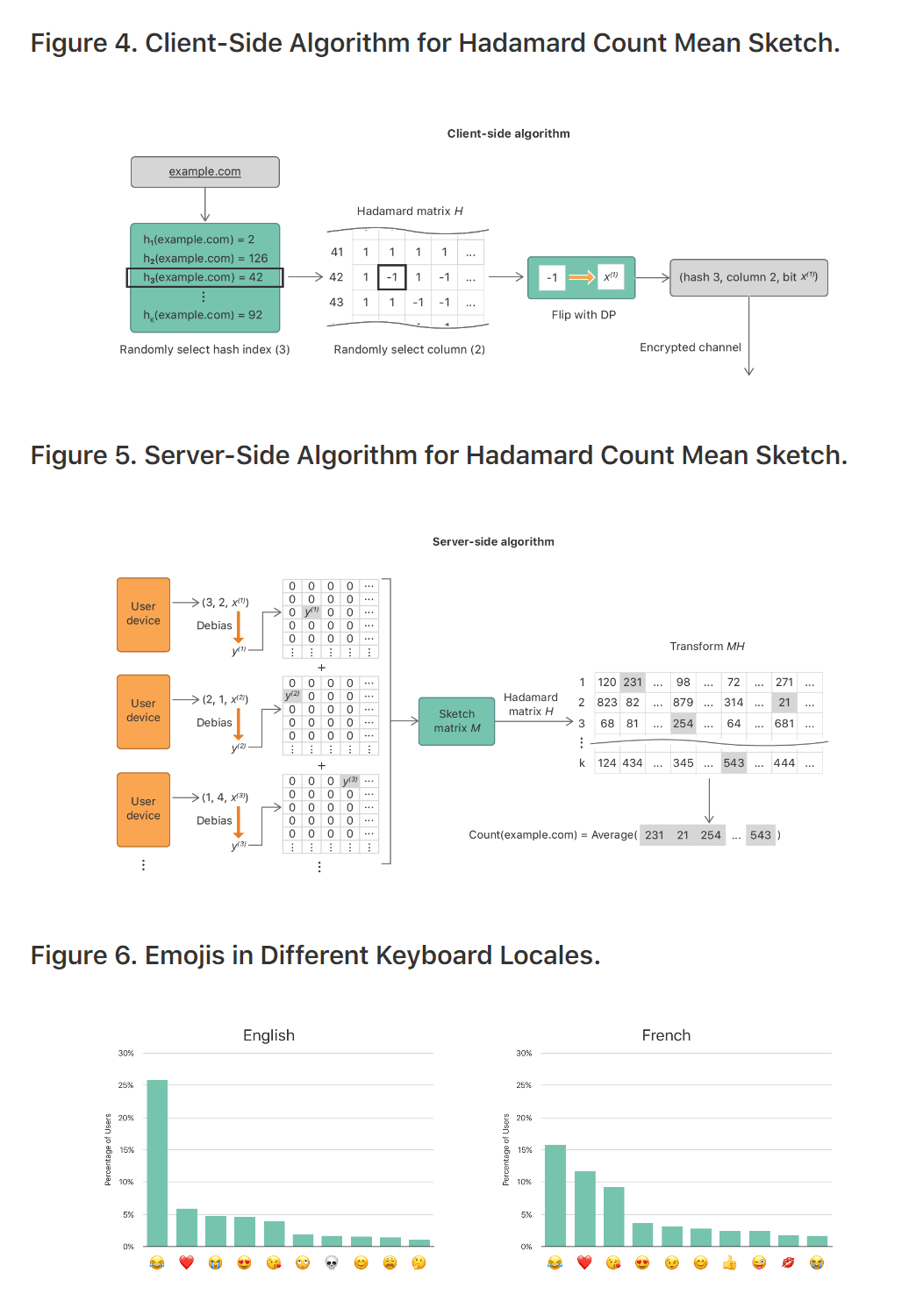

Some of the use cases for the algorithm include identifying popular emojis and words, popular health data types, media playback preferences, and finding out what websites put the most strain on Safari.

Differential privacy, according to Apple, provides a mathematically rigorous definition of privacy and is "one of the strongest guarantees of privacy available."

The idea is based when users are creating a lot of noise, and that noise can mask their data. According to the paper, when enough people sending in their data, the noise averages out and leaves only usable information behind.

Within this differential privacy framework, there are two settings: central and local.

Local differential privacy has the advantage that the data is randomized before being sent from the device, so servers will never be able to see or receive raw data. No data is recorded or transmitted before users have explicitly choose to report usage information.

Data is privatized within users' device. So for example users are typing an emoji, differential privacy strategy will restrict the number of transmitted privatized events per use case. The transmission to the server occurs over an encrypted channel only once per day, without any device identifiers.

When the records arrive on a restricted-access server, IP identifiers are discarded. Any association between multiple records is also discarded. What this means, Apple won't be able to distinguish, for example, if an emoji record and a Safari web domain record came from the same user.

Ideally, obfuscation will help in protecting users' private data from any hacker or government agencies that accesses Apple's databases.

The differential privacy's effectiveness is heavily dependent on a variable known as the "privacy loss parameter," or "epsilon," which determines just how much specificity a data collector is willing to sacrifice for the sake of protecting its users' secrets.

Differential privacy isn’t without its critics. It's also isn't a simple toggle which switches on total privacy and no-holds-barred invasiveness

However. studies suggested that even users who opt into differential privacy are still not protected enough. Apple's strategy here is to obfuscating just how much it mines from individual users. This suggests that the company has gone more aggressive in data-mining than what it had promised.

This was revealed when researchers found that newer version of MacOS uploads more data than the typical differential privacy researcher might consider private. And each iOS update uploads even more than previous versions.

Perhaps the most troubling thing is that, according to the study's authors, is that Apple keeps both its code and epsilon values secret. This is to allow only Apple to potentially change those critical variables and erode their privacy protections with little oversight.

For comparison, the other major player in the differential privacy world is Google.

The search giant differential privacy system is used for Chrome, and it's called RAPPOR (Randomized Aggregatable Privacy-Preserving Ordinal Response). According to Google's analysis, the system claims to have an epsilon of 2 for any particular data sent to the company, and an upper limit of 8 or 9 over the lifetime of the user. In theory, this is better than Apple's differential privacy.

What's more, Google also open-sources its RAPPOR code, so that any changes to its epsilon values can be better understood.

But still, Apple has devoted enormous of its resources toward other privacy-preserving technologies, like full-disk encryption in the iPhones and end-to-end encryption in iMessage and FaceTime app. So here we can say that while some differential strategy can beat others, but in a global term, it's better than having none at all.