With the internet and mobile devices, more and more people around the world enjoy watching videos, more than ever before.

The same goes to people in India. In the 3.2 million km2 home for around 1.3 billion people, Indians spent more than 8.5GB of mobile data on average, with most coming from videos. In 2019, YouTube said that more than 95% of its content consumption in the country comes from those using Indic languages.

What this means, people in India are seeking more and more videos using languages like Hindi, Urdu, Bengali, Sanskrit, and a number of other regional languages.

While the demand is high, not all video creators know all Indic languages.

As a solution, many English-language movies have been dubbed in Indic. However, there are plenty of mistakes, and sometimes the experience isn't any better.

Another solution, is dubbing. But again, the experience may not appeal everyone as lip-syncing is often off, and dubbing seems quite unnatural.

So here, researchers from the International Institute of Information Technology (IIIT) from the southern city of Hyderabad, India have stepped up the efforts by involving AI.

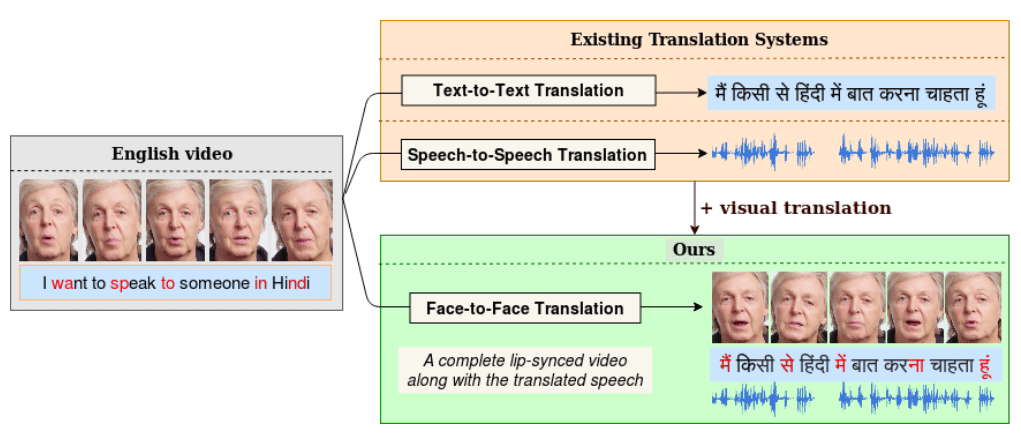

Here, the researchers have developed an AI model that is able to translate and lip-sync a video from one language to another with great accuracy, improving existing technologies of having translated textual or speech output from a video.

Since visual translation - such as the movement of lips - can be lost during dubbing, the Indian researchers utilizes Generative Adversarial Network (GAN) to create what they call 'LipGAN'.

Not only that the AI is capable of matching lip movements of translated text in an original video, as it can also correct lip movements in a dubbed movie.

To do this, the "Face-to-Face Translation" as the researchers called it on their paper, the AI model must first transcribe speech in the video using speech recognition. Then using a specialized model trained specifically for Indic languages, the AI can translate the text, like for example from English to Hindi. After that, the speech recognition model converts that into voice.

Researchers noted that the AI translation model was more accurate than Google Translate.

The internet has too many videos for any single person to consume in even a multiple lifetime. And at any given moment, that amount increases.

In YouTube alone, there are more than 2 billion users with 30 million of them watching videos daily. With about 50 million creators, they have all enjoyed more than 5 billion of their videos watched every single day, collectively. On the most popular video-streaming platform, 500 hours of videos are created every single minute.

By only seeing the rate digital content is created on YouTube, the effort to manually translate and dub existing videos will certainly not scale.

This is where automation can take its role.

Such automation for dubbing and lip-syncing has been created in the past. But most of the automation aren't accessible for a larger Indian audience, which are part of India's 500 million internet users.

While the AI from the researchers from the IIIT has a lot of advantages here, as it targets a specific audience, it does have some struggles.

For example, the team said that the model still has weaknesses in detecting moving and multiple faces in videos.