With the internet and the increasingly powerful tools and computers people can use, manipulating things have become easier than ever.

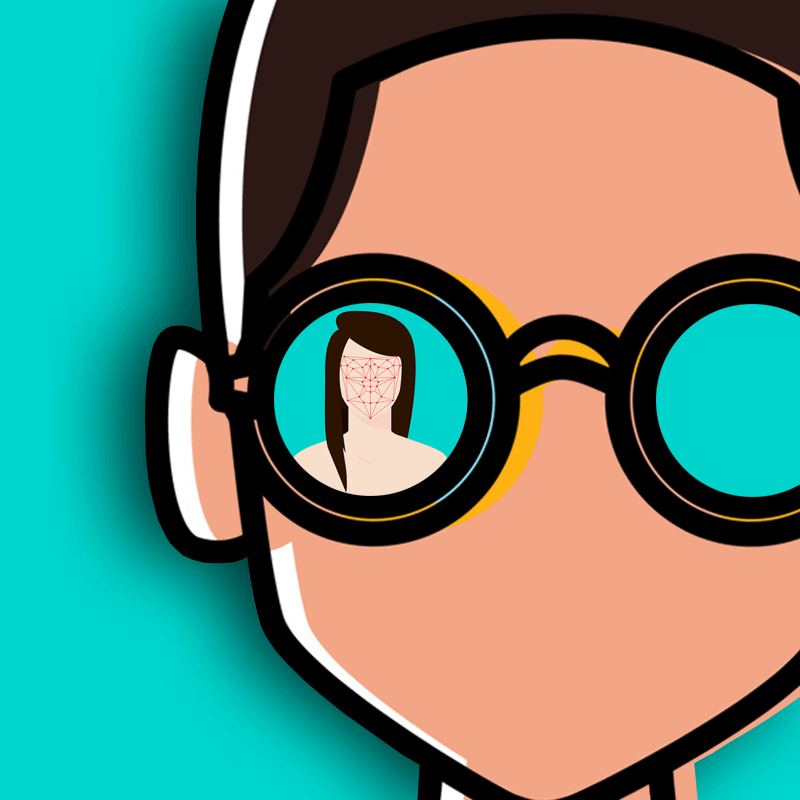

One of the most prominent, is by using 'deepfakes' to manipulate photos and videos using deep-learning algorithms. These manipulated photos and videos, often use prominent celebrities or political figures, to do or say things they aren't supposed to do, or say.

What began as a technology introduced by a Reddit user, people started abusing it.

In the early days of deepfakes, results were kind of rough and somehow distinguishable for the keen, naked eyes. But fast forward, the technology has evolved, and deepfakes are becoming increasingly difficult to spot.

Microsoft as one of the tech giant in the industry, announced a technology to help identify those manipulated photos and videos.

The technology has two components.

The first is a metadata-based system, and the second is a 'Video Authenticator' that can analyze photos and videos to provide a "confidence score" that tells whether or not a media has been altered by an AI.

Read: An AI Capable In Creating Fake Porn, Is Starring Gal Gadot And More: A Terrifying Implication

The metadata system component, uses Microsoft Azure to add digital hashes and certificates to a piece of content.

Those hashes and certificates, live with the contents as their metadata. Whenever the contents are uploaded shared, copied or distributed, the hashes and certificates will remain on them.

In other words, the method involves Microsoft in giving the content its own fingerprint, in order to distinguish it from those that have been manipulated.

Through this approach, whenever the content is manipulated by any means, Microsoft will known because it has the original's fingerprint.

The second approach is by using 'Video Authenticator'. It uses AI to detect manipulations on both photos and videos.

According to Microsoft in a blog post:

To create this Video Authenticator tool, Microsoft trained it using publicly available datasets such as Face Forensic++, and was tested on the DeepFake Detection Challenge Dataset.

The algorithm that is designed to identify manipulated elements that are not easily caught by the human eye, has been developed with the help of Microsoft’s Responsible AI team and the Microsoft AI, Ethics, and Effects in Engineering and Research (AETHER) Committee.

Because it uses AI to power the system, Microsoft is literally fighting deepfake AIs with its own machine-learning technology.

And also because Video Authenticator is an AI meant to fight AIs, Microsoft doesn't broadly detail the technology to the public, worrying that bad actors could potentially train their own algorithms against it.

So instead of putting the technology, and the tools for public use directly, Microsoft is making it available through a partnership with the AI Foundation’s Reality Defender 2020 (RD2020) initiative.

The introduction of the technology (and the two methods) came just ahead of the U.S. Presidential elections.

With the tools, Microsoft hopes that it can help political campaigners and media organizations prevent opponents from using deepfake-like technologies against them, and to also move a step forward in preventing bogus videos from plaguing legit information on the web.

Microsoft acknowledged that its detection tools are not perfect and generative technologies might always be ahead of them. As a result, some manipulated photos or videos might slip through. Microsoft hopes to improve the technology with time.

Related: Facebook And Microsoft Launch Challenge To Detect Deepfake Videos