Humans can describe anything, even the most imaginable. This is because humans can relate objects to their surroundings to understand what the objects mean in a scene.

Computers were thought to be far behind humans in this ability. Object recognition algorithms can be made to recognize things, but they weren't able to relate the things they see. This limitation stopped them to describe things accurately.

This is why researchers have sought to improve object recognition AI by trying to make the technology more aware of their surroundings.

And Microsoft made a huge progress in this area.

Back in 2016, Google said its artificial intelligence could caption images almost as well as humans, with 94 percent accuracy. This time, Microsoft has gone even further, saying that its AI can even be more accurate than humans.

Microsoft claims its two times better than the image captioning model it’s been using since 2015.

When it was announced, the AI from Microsoft was quickly placed at the top of the leaderboard for the nocaps image captioning benchmark.

Eric Boyd, CVP of Azure AI, said that

This achievement allows Microsoft to better understand images, allowing it to refine its captioning techniques.

With it, Microsoft aims to help its users, like to make it easier for them to find images in its search engine. Microsoft also wants to help its visually-impaired users, so they can navigate the web and software dramatically better.

Xuedong Huang, CTO of Azure AI cognitive services, said that Azure has applied this ability as soon as it can, simply because of the potential benefits for users.

His team trained the model with images tagged with specific keywords, which helped give it a visual language most AI frameworks don’t have. It's Microsoft in using keywords instead of full captions allowed the researchers to feed it lots of data into their model.

The pre-trained model was then fine-tuned for captioning on the data set of captioned images. When it was presented with an image containing novel objects, the AI system leveraged the visual vocabulary to generate an accurate caption.

“Image captioning is one of the core computer vision capabilities that can enable a broad range of services,” Huang said in a blog post. "This visual vocabulary pre-training essentially is the education needed to train the system; we are trying to educate this motor memory."

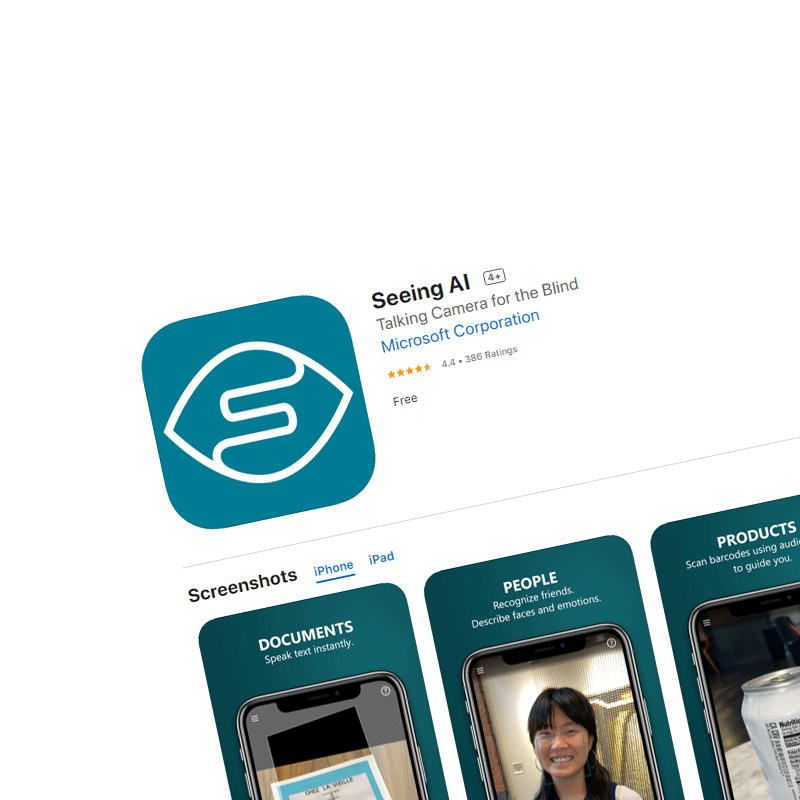

Besides making the captioning model part of Azure's Cognitive Services, where developer can bring it into their apps. Microsoft has also made it available to 'Seeing AI', a Microsoft's app developed for its blind and visually impaired users.

The app is designed to narrate the world around them.

While it’s not unusual to see companies tout their AI research innovations, but seeing one that is quickly deployed to shipping products is actually very rare.

Companies tend to test products thoroughly through different phases, like through alpha and beta releases, gain feedback, tweak some of its parts, bug squashing, and so forth. But here, Microsoft is so confident that it's launching the product to the real test, without much hesitation.

According to Boyd, Seeing AI developer Saqib Shaik, who also pushes for greater accessibility at Microsoft as a blind person himself, describes it as a dramatic improvement over their previous offering.

While the AI has reached a high benchmark, it's how it functions in the real world that would be its real test.

Related: Microsoft Shrinks ‘Data Desert’ To Make AI More Accessible To Those With Disabilities