Artificial Intelligence (AI) and machine deep learning are certainly the future of computing. There are a great deal of research and tests to make this thing happen to an extent that computers will become not only smarter, but also more human.

Artificial Intelligence (AI) and machine deep learning are certainly the future of computing. There are a great deal of research and tests to make this thing happen to an extent that computers will become not only smarter, but also more human.

Nvidia is of the companies that aims to be one of the crowd. It has moved its GPU business beyond powering gaming computers, focusing on technologies to power advanced machine learning. On April 5th, 2016, the company announced the DGX-1 supercomputer.

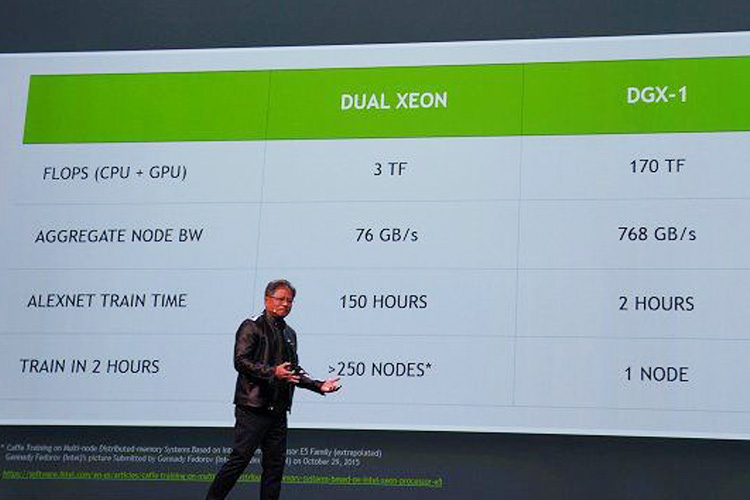

CEO Jen-Hsun Huang called the DGX-1 the equivalent of 250 servers in a box. It houses 7TB of SSD storage, eight Tesla P100 GPUs with 16 GB of RAM each, and two Xeon processors.

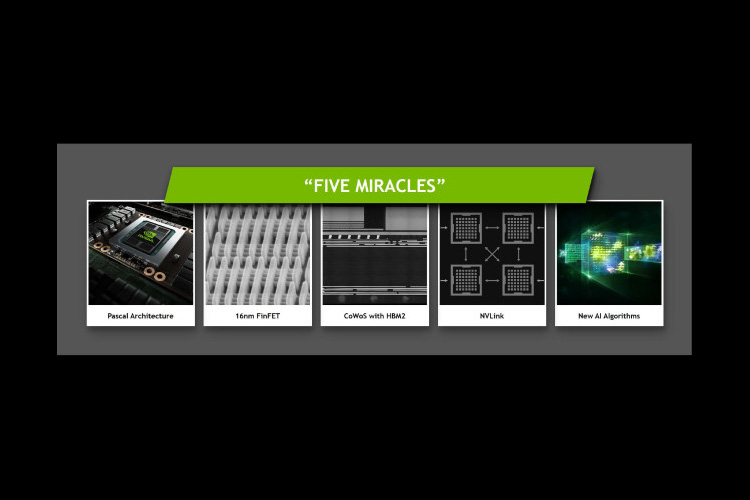

Jen-Hsun Huang described the Tesla P100 as the first GPU designed for hyperscale datacenter applications. Featuring Nvidia’s new Pascal GPU architecture, the latest memory and semiconductor process, and packaging technology, the system is all to create the most powerful computing platform to the date it is released. By using 16nm FinFET manufacturing technology, the Tesla P100 GPUs features over 15 billion transistors on a 600mm2 die. In addition, it combines 16GB of die stacked second generation High-Bandwidth Memory (HBM2).

The machine uses 3500W power budget, a fraction of what a comparable traditional CPU based platforms normally needs.

The DGX-1's memory and GPU are combined into a multichip module on silicon substrate. The P100 has NVIDIA’s NVLink Hybrid Cube Mesh interface technology to connect to multiple Tesla P100 GPU modules. And, NVIDIA didn’t stop there. The company also announced its dubbed the DGX1.

The result? The supercomputer is having 170 teralflops of performance. With that huge amount of raw power, the DGX-1 is 12 times the improvement over what Nvidia announced in 2015.

Huang described the P100 as the most ambitious project the company has ever undertaken. Developed using the new Pascal GPU architecture which is five times faster than the speed of a PCIE connection, Nvidia describes it as the most advanced hyperscale datacenter GPU ever built.

The DGX-1 eight P100s, when compared to that of 4 Maxwell GPUs for example, the margin is quite large. When Maxwell GPUs can render 1.33 billion images in 25 hours, the DGX-1 can do it in just two hours.

What the DGX-1 is for, is to be the first full server appliance platform specializing in deep learning application. While Nvidia has already the GPUs specialized in such application, the DGX-1 is the next logical step for the company to venture deeper into powering AI.

It's aim? To power deep learning system or neural network. In short, the Nvidia wants to be the ones that runs those programs that simulate human-like thought processes.

GPU, The Future Race For Computing Power

There was a time when CPU's processing power is the milestone of computers' computing achievement. Power is everything, and everyone seemed to be racing in it by announcing and producing more capable and faster CPUs. But then comes the time when PCs have become rather stagnated. It's not that we've reached the limit of CPU's computing capability, it's just that everything seems to change.

One of the main reason for this is because the traditional PC market has been declining as consumer shift to mobile computing solutions like smartphones.

While processing power is a must to any computer to achieve the once was impossible, the race to build more capable CPUs slowed. In place, GPU (Graphical Processing Unit) becomes the more interesting subject.

By 2011, AI researchers around the world had discovered the ability of GPUs. The Google Brain project for example, was able to learn and recognize cats and people by watching movies on YouTube. But it required 2,000 CPUs. When Nvidia's research teamed with Andrew Ng's team at Stanford to use GPUs for deep learning, they found that 12 Nvidia GPUs could deliver the deep-learning performance of 2,000 CPUs. When researchers at NYU, the University of Toronto, and the Swiss AI Lab accelerated their DNNs on GPUs. The game began.

GPUs aren't anymore for those that aims to traditionally power graphical user interface, or to designers for creating realistic visuals. It's more for a computing accelerator. GPUs are way better in massive parallel computing capabilities if compared to CPUs, making them great in providing the horsepower for top supercomputers.

This is practically good to power computing for everything from autonomous vehicles to scientific research.

NVIDIA has been the pioneer in this GPU computing market with its CUDA platform, enabling leading researchers to perform leading edge research and continue to develop new uses for GPU acceleration.

DGX-1, the supercomputer Nvidia is building, should be able to quickly and effectively perform tasks like supporting AI and machine learning. This should not be limited to analyzing images, objects and patterns. Systems like these usually run on regular CPUs working in parallel. DGX-1's 8 Teslas work in parallel to handle huge amounts of data.

With that huge amount of power in its disposal, Nvidia's DGX-1 3500W machine costs $129,000.

New Opportunities

Nvidia's keynote at the GPU Tech Conference 2016 discussed how the company is focusing on developing their GPU technology.

First of all, Nvidia's CEO Jen-Hsun Huang describes Nvidia SDK as a collection of essential libraries centered around GPU computing. Gameworks, Designworks, Computeworks and the previously revealed VRworks are all bundled inside of this development kit. The next is VR technology where Nvidia believes VR as the new computing platform.

Huang also believes that AI development is the next thing. Google's Deepmind, Tensorflow and others, are all seeing AI as the development industry that is quickly expanding. Nvidia predicts that the industry will be seeing $500 billion over the course of next decade. At this point, he revealed its new family of GPUs, the Tesla M40, Tesla M4, Tesla P100 and the DGX-1.

Nvidia's target audience for the DGX-1 are AI pioneers such as the University of Toronto, Berkley University and Massachusetts General hospital.

The last thing the company wanted to say is that it will be developing Drive PX, which will be a AI car computing platform. The program detects its surrounding, via integrated sensors, at 180fps. It also can be trained to recognize traffic signs. Nvidia's Drive PX is having the #1 spot for accuracy score on the KITTI car detection test.

And with AI in mind, Nvidia is trying to push AI developers to their limits by announcing the world's first autonomous car race, the Roborace.

What Nvidia is doing here, is taking steps to create more business opportunities.