The generative AI race continues to heat up, and the only way to advance, is by having more and more features.

OpenAI, which kickstarted the trend following the introduction of ChatGPT, has released a 'Read Aloud' feature, which does what it says.

Available on both the web version of ChatGPT and the iOS and Android ChatGPT apps, the Read Aloud feature allows the AI to read its own responses aloud, in one of five voice options.

Read Aloud can speak 37 languages but will auto-detect the language of the text it’s reading.

OpenAI has made it available on both GPT-3.5, and its flagship GPT-4.

ChatGPT launched a voice chat feature back in September 2023, where users can directly ask questions via voice prompts and have the bot answer with its own voice.

But the new feature shall allow users to have ChatGPT read written answers aloud.

The difference here is that the bot can now generate a written response first, and then read it back to its users.

What's more, users can set up the chatbot to always respond verbally when responding to prompts.

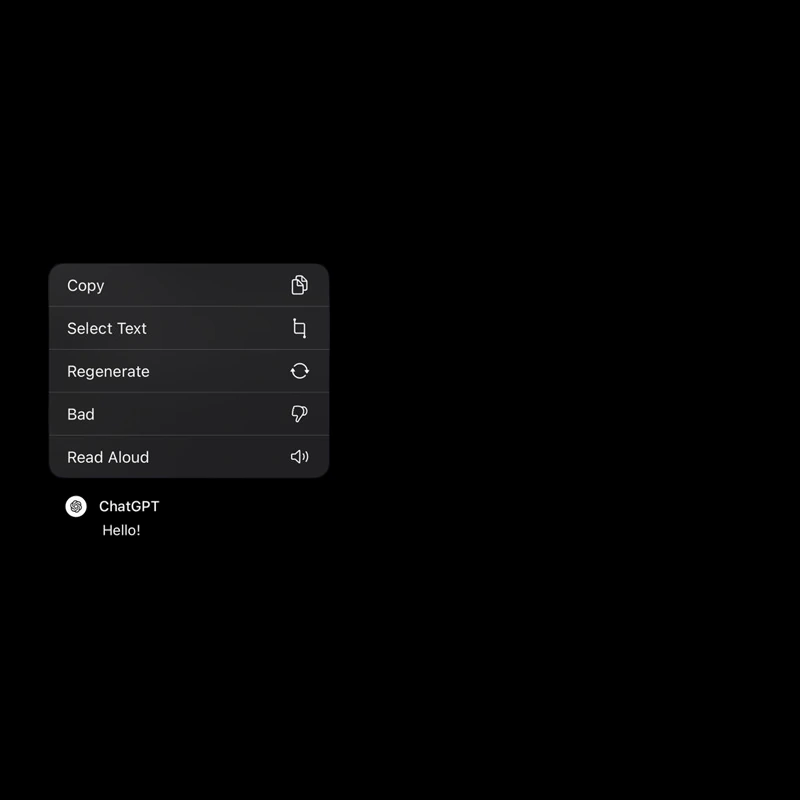

To use the feature, on its mobile apps, users can tap and hold the text to open the Read Aloud player, where they can play, pause, or rewind the readout.

On the web, the feature shows a speaker icon below the text.

In all, this feature could come in handy when users are on the go

It can also be useful when users want it to do things, like reading back speeches or telling stories where users as the listeners may want to pause or rewind a part to hear how it sounds again.

Read: OpenAI Introduces ChatGPT's Voice Chat Feature To All Users: A Treat During Uncertainty

ChatGPT can now read responses to you.

On iOS or Android, tap and hold the message and then tap “Read Aloud”. We’ve also started rolling on web - click the "Read Aloud" button below the message. pic.twitter.com/KevIkgAFbG— OpenAI (@OpenAI) March 4, 2024

The feature shows what OpenAI can do with multimodal capabilities, where it has harnessed the ability to read and respond through more than one medium.

The feature also has the potential of becoming an accessibility tool.

In an interview, Joanne Jang, who leads product for model behavior at OpenAI, explained that the company has been thinking about accessibility for some time.

She pointed to a feature released around 2023, which allows users to use images as inputs, and ask questions about it.

" [...] we knew the technology would be powerful, but we didn’t really understand all the ways in which it could be used by the world."