Researchers at University of Toronto have successfully designed an algorithm that disrupts facial recognition technology.

On the web, mainly on social media networks like Facebook, each time users upload a photo or video, a facial recognition system tries to identify whoever is present based on facial feature points in order to learn more about each of the individuals.

The system feeds on user data, combining face recognition with location data and other metadata, social media is improving by the minute.

For those that are concerned about their privacy, especially since the Facebook - Cambridge Analytica scandal, researchers have developed an algorithm that is able to dynamically disrupt this advanced technology.

Led by Professor Parham Aarabi and graduate student Avishek Bose at U of T's Faculty of Applied Science & Engineering, the system is called 'adversarial training.' It essentially sets up two algorithms that work against each other. Aarabi and Bose created two neural networks: one which identifies people's faces, and one that disrupts the first from its goal.

The two learn from each other, and by each time, they are getting better.

Read: How Artificial Intelligence Can Be Tricked And Fooled By 'Adversarial Examples'

"The disruptive AI can 'attack' what the neural net for the face detection is looking for," explained Bose. "If the detection AI is looking for the corner of the eyes, for example, it adjusts the corner of the eyes so they're less noticeable. It creates very subtle disturbances in the photo, but to the detector, they're significant enough to fool the system."

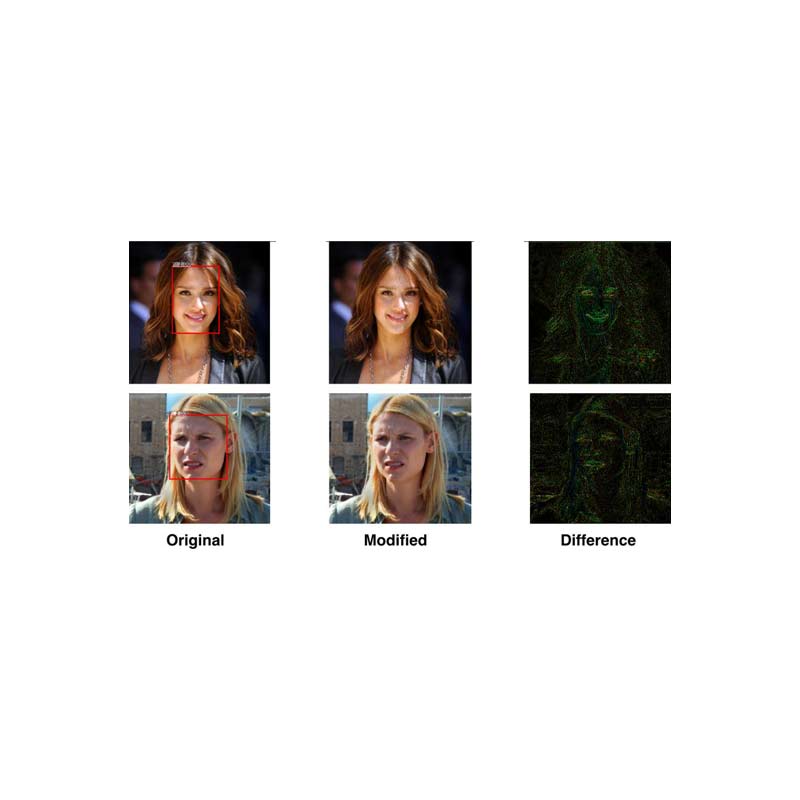

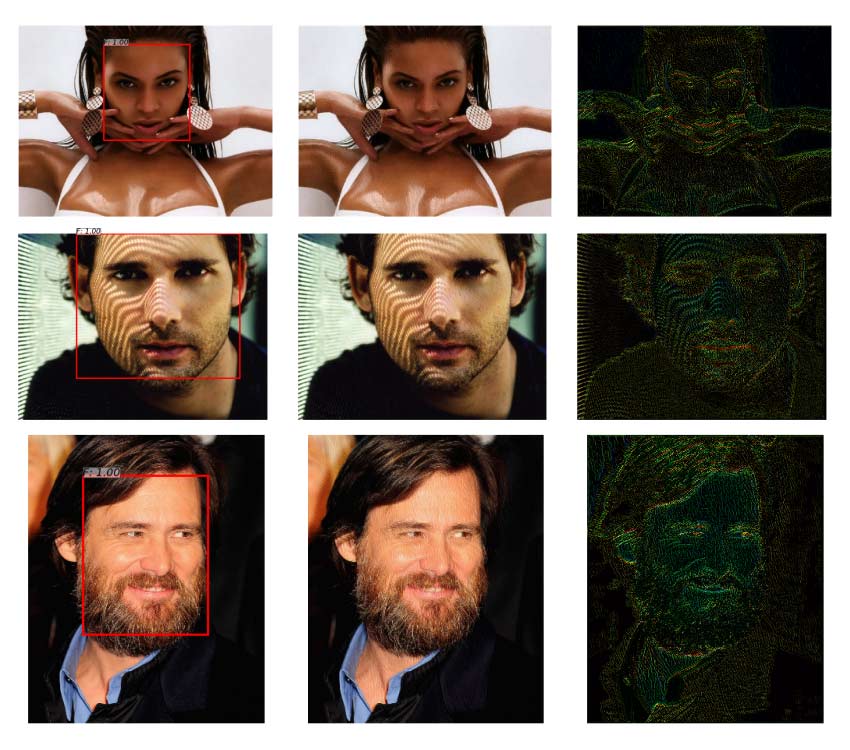

In practice, users can use a kind of filter that alters specific pixels in an image. The alteration can be invisible to the human eyes, but for facial recognition system, the change can confuse it.

In the research, Aarabi and Bose fed the system with 300-W face dataset, an industry standard of more than 600 faces of people from different ethnicity, lighting, and environment. The result is that the system was able to reduce detectable faces from 100 percent down to 0.5 percent.

"Ten years ago these algorithms would have to be human-defined, but now neural nets learn by themselves - you don't need to supply them anything except training data," Aarabi said. "In the end, they can do some really amazing things. It's a fascinating time in the field, there's enormous potential."

"Personal privacy is a real issue as facial recognition becomes better and better," continued Aarabi. “This is one way in which beneficial anti-facial-recognition systems can combat that ability.”

In addition to disabling facial recognition, the technology can also disrupts other face-based attributes, such as image-based search, feature identification, emotion and ethnicity estimation, and others.

With this founding, the team hopes to make the privacy filter publicly available.

Further reading: Understanding Adversarial Images That Trick Both Human And AI