Experimenting with AI can be fun, especially when knowing that they can see what we cannot, or failed to understand what is apparent.

But when life is at stake, like for example on autonomous cars, the fun ends.

To understand how computers see the world, we need to understand visual classification algorithms powered by a computer model called a convolutional neural network (CNN), which are commonly used to recognize objects in images.

Humans can train these algorithms to recognize something like a cat or a dog by showing a lot of cats and dogs pictures, and then letting the CNN to work by itself to compare the given pictures, figuring out what features they share with each other.

Once the CNN gets to know the classifiers after identifying enough cats and dogs pictures in its training process, it should be able to reliably recognize cats or dogs in new pictures it sees. Take a look at the image below:

While humans recognize cats and dogs in pictures by looking for abstract features: ears, snout, fur, teeth and so on, CNN doesn't work this way. This is why AI's method of understanding images doesn't necessarily make sense for humans. This is because we interpret the world much differently than a CNN does.

The problem with AI is that they don't have a proper set of eyes like humans. Machines don't "see" the world like we do, and instead, processes images to understand what's what. This inevitable difference makes it possible for humans (or anything else) to manipulate them.

For example, adding parts to an image can easily fool AI.

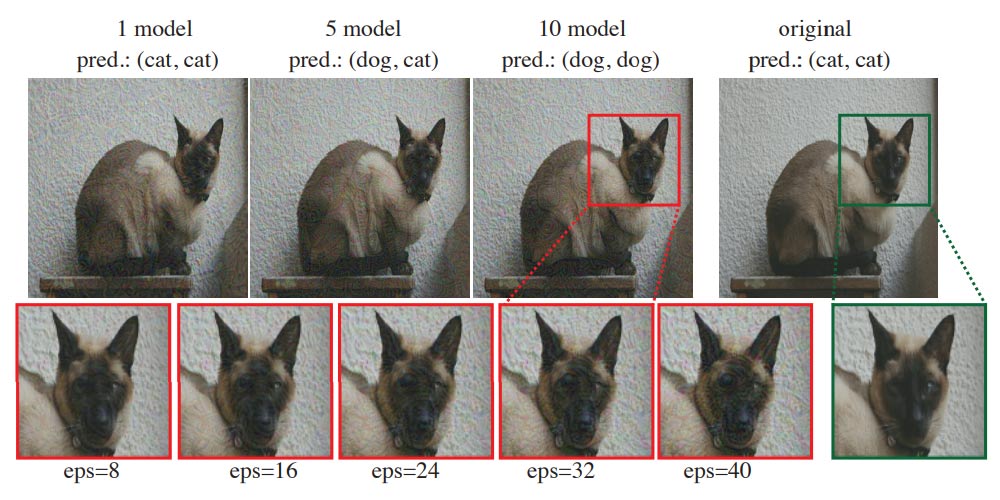

In the image shown, the left is a cat. But on the right, the same image has been tweaked a bit to make it difficult for CNN to be able to tell what it really is. In this case, the CNN thinks that it's a dog rather than a cat.

This however, can also trick humans.

This is an example of 'adversarial image'. This kind of image is designed specifically to fool neural networks into making incorrect determination about what they're seeing.

Understanding adversarial images and how they affect AIs, should help us avoid fatal tragedies involving robots misinterpreting their environment.

It’s incredibly important that researchers in the field of AI understand the very nature of these simple attacks and accidents.

Because computers are getting smarter and smarter, we need to know how AI works by comparing it with our own brain; we need to know why humans are resistant to certain forms of image manipulation. And perhaps more importantly, we need to know what is exactly needed to fool a person with an image.

The idea here is that what doesn’t fool a person shouldn’t be able to trick an AI.

If we knew that the human brain could resist a certain class of adversarial examples, this would provide a proof for a similar mechanism in machine learning security.

Further reading: How Artificial Intelligence Can Be Tricked And Fooled By 'Adversarial Examples'