The robots.txt file is essential on websites, as it contains instructions for search engine crawlers on what to do and not to do.

Using the simple text file, website owners and webmasters can easily exclude entire domains, complete directories, one or more subdirectories or individual files from being crawled.

The file that should always be in the root directory of a domain, is the first thing that (legit) crawlers seek and open, when they are visiting a website.

This is why the file is very important, no matter how simple it is.

And because of that, Bing wants to help website owners and webmasters manage their robots.txt file, by giving them a tool to automatically check the URLs within the file for errors.

The tool that has been made available on Bing Webmaster Tool, can "highlight the issues that would prevent them from getting optimally crawled by Bing and other robots."

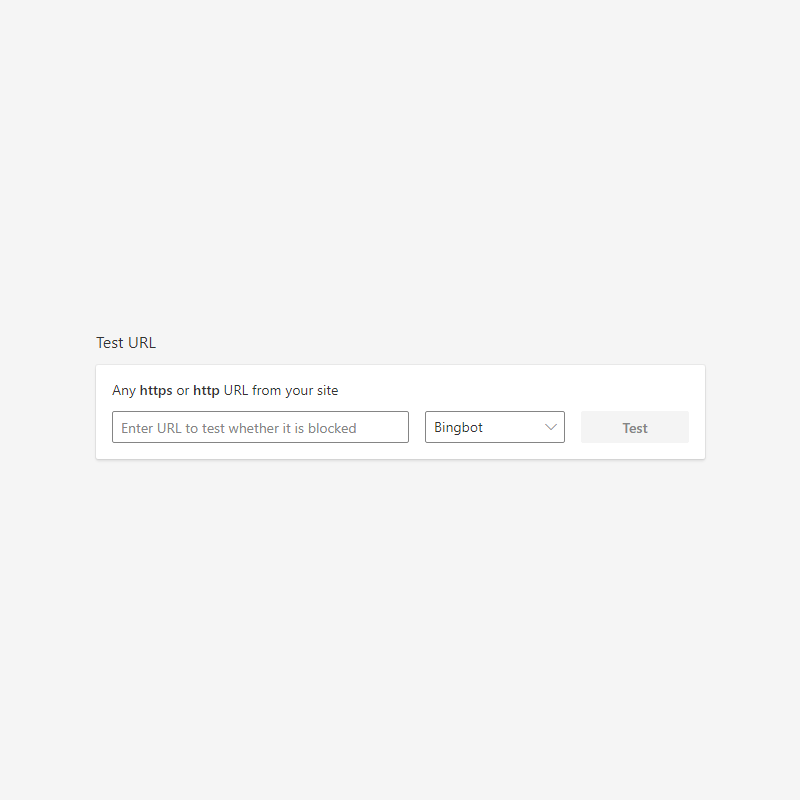

The tool is very simple to use.

Users just need to enter their site in the field, and the tool will validate the robots.txt file.

The tool will then finish and show its results that may include whether certain URL has been blocked from bring crawled, showing which statement is blocking it, and for which user-agent.

Users can also make changes to the robots.txt files using a dedicated editor tool.

With the tool, website owners and webmasters can quickly check submitted URLs against the content editor, allowing them to instantly check errors on the spot.

Users are also given the option to download the edited robots.txt file if they want to.

According to Bing, the tool "guides them step-by-step from fetching the latest file to uploading the same file at the appropriate address."

Bing on its blog post, wrote that:

"While robots exclusion protocol gives the power to inform web robots and crawlers which sections of a website should not be processed or scanned, the growing number of search engines and parameters have forced webmasters to search for their robots.txt file amongst the millions of folders on their hosting servers, editing them without guidance and finally scratching heads as the issue of that unwanted crawler still persists."

Bing said that the tester tool operates in a similar fashion to both Bingbot (the crawler used by Bing search engine) and AdIdxbot (the crawler used by Bing Ads)

There is also a dropdown menu to toggle between the two, as explained on the how-to page.

And to make everything even more convenient, the tool also enables website owners and webmasters to manually submit a request to let Bing know that their robots.txt file has been updated.

This tool should be useful for many website owners and webmasters, due to the fact that the required formats and syntax related to robots.txt can sometimes be complex.

Typos for example, can create errors that may lead to suboptimal crawling.

With the Robots.txt Tester tool, Bing is allowing them to quickly troubleshoot their robots.txt files more easily.