There are a lot of differences when comparing man and machine. One is blood and organs, and the other one is mere electricity running through computer chips and wires.

But the latter has somehow proven that it can actually be more human than humans. According to Mozilla's research, its team that was tasked to develop a method to evaluate the quality of text-to-speech voices, found that there are computerized voices that can pass comprehension test with flying colors.

In a blog post that accompanies the publication of a paper co-authored by Janice Tsai and Jofish Kaye from Mozilla:

It was back in 2019 that Mozilla's Voice team developed a method to evaluate the quality of text-to-speech voices.

For quite a long time, researchers focused on improving the quality of text-to-speech, in order to make the product sound better to the human ears. After all, computerized voice should only be products decades ago. In the modern days of computing, mobile and internet, consumers simply want better-sounding computers.

"How can we determine if a voice is enjoyable to listen to, particularly for long-form content — something you’d listen to for more than a minute or two?" asked Mozilla.

Mozilla created an experiment, led by intern Jessica Colnago during her time at Mozilla.

The concept: Mozilla took one article, "How to Reduce Your Stress in Two Minutes a Day", and recorded each voice reading that article.

The team then had 50 people on Mechanical Turk listen to each recording - 50 different people each time. The test included each person to hear the article no more than once. At the end, the team asked them a couple of questions to check whether they were actually listening, and to see what they thought about the voice.

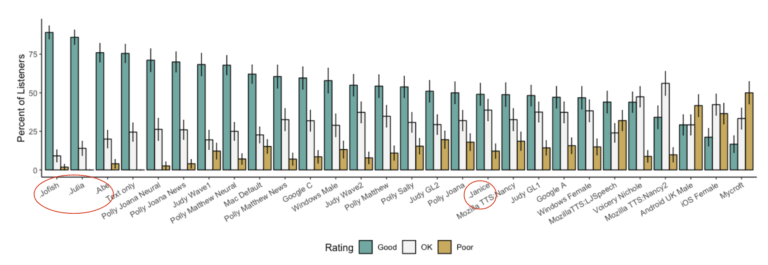

Mozilla found that in a scale of one to five, some computer-generated voices actually performed better than humans.

What's more, the team found that there were some generalizable experiences.

For example, listeners were 54% more likely to give a higher experience rating to the male voices Mozilla tested than the female voices. Mozilla also analyzed the number of words spoken in a minute, and found that “just right speed” ranges around 163 to 177 words per minute.

"And while we still have some licensing questions to figure out, making sure we can create sustainable, publicly accessible, high quality voices, it’s exciting to see that we can do something in an open source way that is competitive with very well funded voice efforts out there, which don’t have the same aim of being private, secure and accessible to all," said Mozilla.