There are many proprietary software used by many companies and organizations, but open-source software has long been the solution for the rest of the digital world.

While nobody can really predict the future, what is certain is that, the world is changing rapidly.

From changes in climates, the pandemic, nuclear race, East vs. West, and many other challenges, as well as AI of the future and more. Then there is also the chances of worsened political war, or maybe, a nuclear apocalypse.

To ensure that next generations can still use open-source software in the future whatever it holds, GitHub with its 'GitHub Arctic Code Vault' program, is preserving 21 terabytes of its data repository at the North Pole.

On its Archive Program web page, GitHub said that:

Read: The Arctic World Archive Facility Opens To Preserve Data For Hundreds Of Years, Or More

“Today, almost every piece of software relies on open source. It’s the lifeblood of the internet, but much of the world’s data is ephemeral, kept on storage media expected to survive only a few decades,” said GitHub vice president of special projects Thomas Dohmke.

“As the home for all developers, GitHub is in a unique position and we believe has the responsibility to protect and preserve the collaborative work of millions of developers around the world."

To make this possible, GitHub partners with experts in anthropology, archaeology, history, linguistics, archival science, futurism, and more, to advise it on what content should be included in the archive and how to best communicate with its inheritors.

To store the equivalent of "10,000 most-starred and most-depended-upon repositories" of source code files inside 10,000 folders, the company uses around 3,500-foot of 186 silver halide and polyester film reels provided and encoded by Piql, a Norwegian company that specializes in very-long-term data storage.

This creates a self-contained and technology-independent storage medium where the data can last a thousand years in a disused coal mine 3 facility on the island of Spitsbergen in Svalbard, Norway.

“We chose to store GitHub’s public repositories in the Arctic World Archive in Svalbard because it is one of the most remote and geopolitically stable places on Earth and is about a mile down the road from the famous Global Seed Vault,” said Dohmke.

The facility that was opened on March 27, 2017, resides in the Arctic climate that results in permafrost. The vault is situated deep enough that it can even sustain damage from nuclear and EMP weapons.

"A thousand years is a very long time," said GitHub, as it gives an example of how ancient ruins such as Angkor Wat, Great Zimbabwe, and Macchu Picchu had not yet been built a thousand years ago.

Because the data is stored inside offline film reels, GitHub doesn’t have to worry about power outages.

And to ensure that the initiative can survive long into the future, GitHub also uses a method online archivists call 'LOCKSS' (acronym for 'Lots Of Copies Keeps Stuff Safe'), where the company is putting its code onto multiple locations.

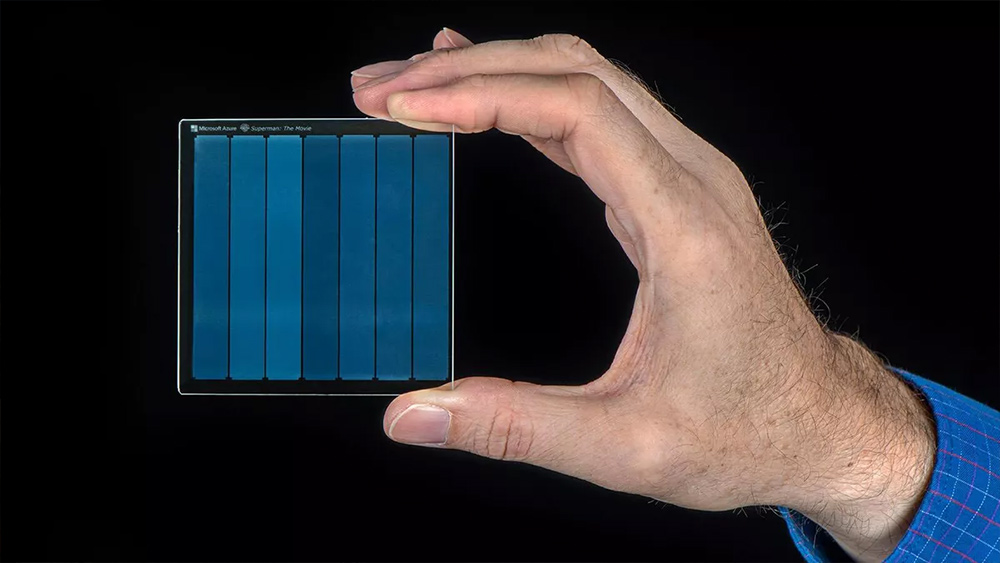

As if that wasn't enough, GitHub is also working Microsoft's Project Silica in order to archive all active public repositories for a 10,000 years.

"This program builds on the best ideas we have today," GitHub added, suggesting that the archive contains as much information about human computing as GitHub could squeeze into it.

The data GitHub is burying far in the north, includes technical guides to QR decoding, file formats, character encodings, and other critical metadata so that the raw data can be converted back into source code for use by others in the future.

The treasures buried at the Arctic archipelago that is covered in glaciers and inhabited by polar bears, also include the original source code for MS-DOS (the precursor to Microsoft Windows), the open source code that powers Bitcoin and other cryptocurrencies, Facebook reacts, the publishing platform WordPress, and many more.

The archive includes an overview to how to use it.

There is also a Tech Tree that will serve as a quickstart manual on software development and computing, bundled with a user guide.

It will describe how to work backwards from raw data to source code and extract projects, directories, files, and data formats.

“The Arctic Code Vault was just the beginning of the GitHub Archive Program’s journey to secure the world’s open source code,” said Thomas Dohmke. “We’ve partnered with multiple organisations and advisers to help us maximize the GitHub Archive Program’s value and preserve all open-source software for future generations.”

"Inspired by the Long Now’s Manual for Civilization, the Tech Tree will consist primarily of existing works, selected to provide a detailed understanding of modern computing, open source and its applications, modern software development, popular programming languages, etc.," GitHub said in a blog post.

Whatever the future holds, if there is a chance that humanity will be working on modern computers but no software to run on them, GitHub said that the archive and its Tech Tree "could be extremely valuable".

"The archive will also include information and guidance for applying open source, with context for how we use it today, in case future readers need to rebuild technologies from scratch. Like the golden records of Voyager 1 and 2, it will help to communicate the story of our world to the future."

While the vault is aimed at preserving the information for future generations, the value of the data for those in the future is more likely to be historical.

GitHub hopes that at least, the technology people are working on today won't be lost by "a tomorrow that carelessly considers it irrelevant—until an unexpected use for our software is discovered."

“We will protect this priceless knowledge by storing multiple copies, on an ongoing basis, across various data formats and locations,” Dohmke said.

GitHub Archive Program's FAQ said that the company plans to re-evaluate the program (and its storage medium) once every five years, in which it will decide whether to take another snapshot.