There was the time when creating "magic" on screen relies on talents from Hollywood and companies with deep pockets. Gone are those days.

Ever since deepfake made its debut on Reddit, it has been used for a range of nefarious purposes, from disinformation campaigns to inserting people's faces into porn stars' bodies.

With advancements in the technology and computer hardware, manipulated media made by deepfake is getting much more difficult to detect.

In a cat-and-mouse game to make manipulated media more identifiable, this technology fights deepfake AIs, with AI.

And that is by simply looking at the light reflected from the eyes.

Developed by computer scientists from the University at Buffalo in New York, U.S., the AI can test portrait-style photos to find whether they are real of manipulated, with 94% accuracy.

Related: Microsoft Introduces Technology To Spot Deepfakes With 'Confidence Score'

The AI manages to accomplish this feat, by only looking deep into the eyes.

It's already known that the human eyes have a reflective surface that can bounce light. It's this mirror-like surface that generates reflective patterns when illuminated by light, and it's through this that the AI can judge whether a person is real of a deepfake.

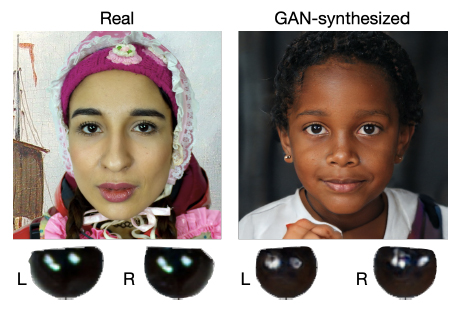

The method goes on like this: a photo of a real face when taken by a camera, will have both eyes to have similar reflection. This is obvious because the eyes were seeing the same thing (whether the person was focusing on the camera or not). But deepfake images and videos, are stylized by GANs, and they typically fail to accurately reflect the light.

For this reason, deepfakes often exhibit inconsistencies, such as different geometric shapes or mismatched locations of the reflections.

What's more, deepfakes may create eyes with corneas that have different sizes. This happens likely because deepfakes work by generating photos based on many similar photos the AI has learned from.

Simply put, the AI from the scientists at University at Buffalo, searches for these discrepancies by mapping out a face and analyzing the light reflected from each eyeball.

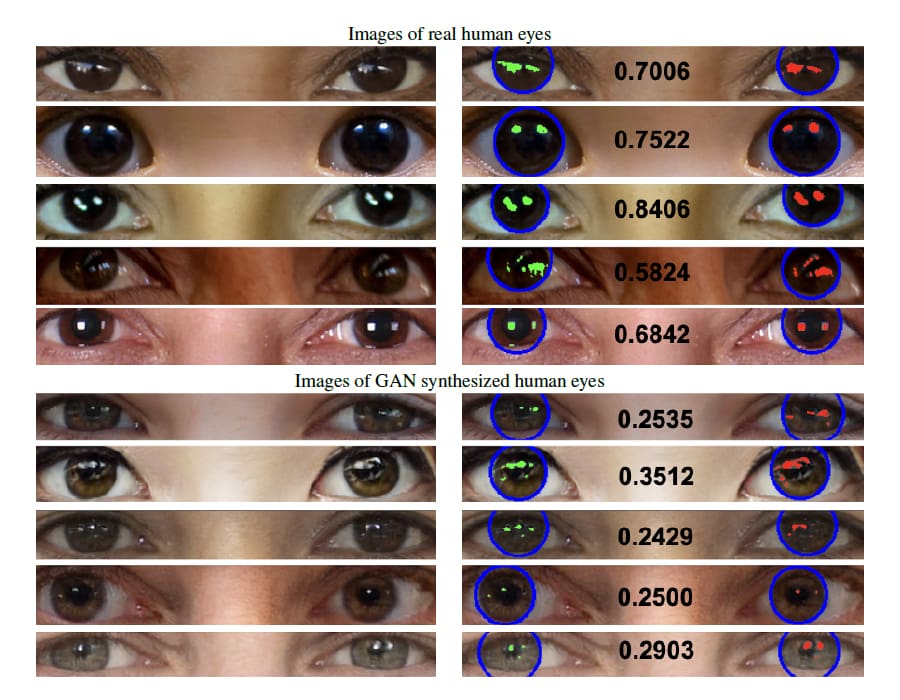

The AI then generates a score that serves as a similarity metric. The smaller the score, the more likely the subject is a deepfake.

According to the scientists on their pre-print paper, the methods to detect GAN-synthesized faces fall into three categories:

- Focusing on signal traces or artifacts by GAN. This looks for color differences, signal-level traces or fingerprints in the noise residuals.

- Analyzing the frequency domain, like upsampling to find GAN-generated artifacts.

- Looking for physical/physiological inconsistencies by GAN models, like analyzing the distributions of facial landmarks and other inconsistencies.

two corneal specular highlights are shown alongside the detections. (Credit: Shu Hu, Yuezun Li, Siwei Lyu)

Here, the computer scientists use the eye approach, knowing that humans have eyes that are unique. Since the eyes can act like fingerprints in differentiating two different individuals, the scientists think that they can train AI to obtain corneal specular highlight to detect deepfakes.

But in order for this method to work, the AI needs to be fed on high-resolution face image, and can clearly see the eyes and to also detect the subject's facial landmarks around the eyes. That in order for it to detect the circle of the corneal area, and extract corneal specular highlights.

After extracting the data, the AI will estimate the internal camera parameters and light source directions from the perspective distortion of the corneal limbus and the locations of the corneal specular highlights of two eyes, which are used to reveal digital images composed from real human faces photographed under different illumination.

And if the subject has inconsistent iris colors, or has the specular reflection from the eyes that is either missing or appear simplified as a white blob, the AI will give the subject a lower score.

Just like what William Shakespeare once said: “the eyes are the window to your soul.” Here, deepfakes literally have no soul.

It should be noted though, that more-advanced and better deepfakes can deal with these inconsistencies better than others, meaning that the cat-and-mouse game continues.

Further reading: How To Easily And Quickly Spot Deepfake Whenever You See One