In a world where things are digitalized, data comes in the form of bits and bytes, which apparently, make some of the things easy to manipulate.

Deepfake is one of the notorious ways to do this. What began on Reddit before conquering the digital sphere, the technology allows anyone, even with the slightest idea of digital manipulation, to use someone's face and put that in someone else's body. This created a trend.

At first, people started swapping celebrities' faces with porn stars' bodies. Then, people started creating deepfakes of political figures and influential people saying what they aren't supposed to say. Then, people started creating revenge porn, and more.

The damage is certain, and sometimes, they are irreversible.

Knowing how devastating deepfakes can be, numerous researchers from around the world have tried to counter the flow of deepfakes, by creating ways and methods to detect them. But it's a race between two sides, and both are getting better as technology advances and data becomes more and more abundance.

This time, "in partnership with Michigan State University (MSU)," researchers at Facebook presented a "research method of detecting and attributing deepfakes that relies on reverse engineering from a single AI-generated image to the generative model used to produce it."

In other words, Facebook has developed the ability to determine where a deepfake videos "in real-world settings" have come from, when the “deepfake image itself is often the only information detectors have to work with.”

While there are ways to detect deepfakes, the ways are becoming increasingly difficult for humans.

And Facebook here, can detect deepfakes by training an AI. This AI can establish if a piece of media is a deepfake or not, and know whether it was created from a still image or from a single video frame. Not only that, as the researcher said that the AI can also identify the AI that was used to create the deepfake in the first place.

That, "no matter how novel the technique.”

The AI from Facebook can do this by looking at even the slightest similarities among a collection of deepfakes to see if they have a shared origin, like for example, looking for unique patterns such as small speckles of noise or slight oddities in the color spectrum of an image.

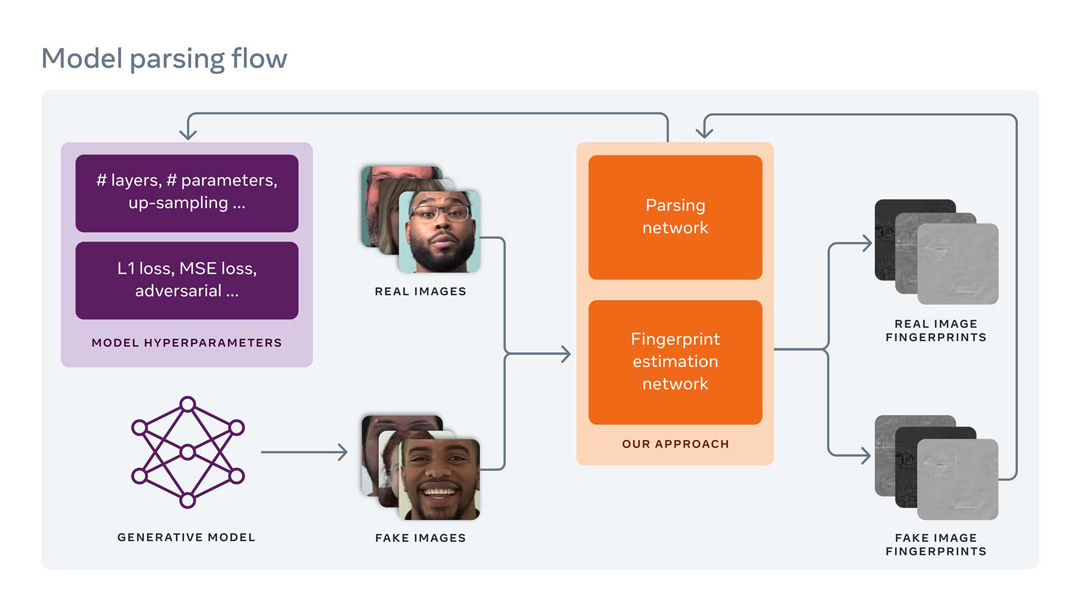

According to a Facebook AI blog post, the method is called the Fingerprint Estimation Network, or FEN.

“Deepfakes have become so believable in recent years that it can be difficult to tell them apart from real images. As they become more convincing, it’s important to expand our understanding of deepfakes and where they come from,” said Facebook in a blog post.

“In collaboration with researchers at Michigan State University (MSU), we’ve developed a method of detecting and attributing deepfakes. It relies on reverse engineering, working back from a single AI-generated image to the generative model used to produce it.”

“Beyond detecting deepfakes, researchers are also able to perform what’s known as image attribution, that is, determining what particular generative model was used to produce a deepfake,” Facebook continued.

“Image attribution can identify a deepfake’s generative model if it was one of a limited number of generative models seen during training. But the vast majority of deepfakes — an infinite number — will have been created by models not seen during training. During image attribution, those deepfakes are flagged as having been produced by unknown models, and nothing more is known about where they came from, or how they were produced.”

“Through this groundbreaking model parsing technique, researchers will now be able to obtain more information about the model used to produce particular deepfakes. Our method will be especially useful in real-world settings where the only information deepfake detectors have at their disposal is often the deepfake itself,” Facebook concluded.

Another way of saying this, Facebook can identifying the minor fingerprints in an image, and discern the details of the neural network that created the fabricated media.

“I thought there’s no way this is going to work,” said Tal Hassner at Facebook. “How would we, just by looking at a photo, be able to tell how many layers a deep neural network had, or what loss function it was trained with?”

To create this deepfake-detecting AI, Hassner and his colleagues used a database of 100,000 deepfake images generated by 100 different generative models making 1000 images each.

Some of those images were used to train the AI model, while others were held back and presented later to the model as images of unknown origin.

“What we’re doing is looking at a photo and trying to estimate what is the design of the generative model that created it, even if we’ve never seen that model before,” continued Hassner.

Hassner who declined to explain about how Facebook would be using this AI, or how accurate the AI’s estimates were, said that the work is a cat-and-mouse game.

“We’re developing better identifying models while others are developing better and better generative models,” he said. “I don’t doubt that at some point there’ll be a method that will fool us completely.”

Read: Deepfakes And Deepfake Detectors: The War Of Concealing And Revealing Faces