An AI is only as good as the data it learns from. And more than often, we humans are unfair with the variety of gender, race, class, and caste biases.

This biases are reflected to machines, as humans teach computers to become smarter. It also happens to Google in a way that it's concerning that it decided to block Gmail's Smart Compose AI tool from suggesting gender-based pronouns.

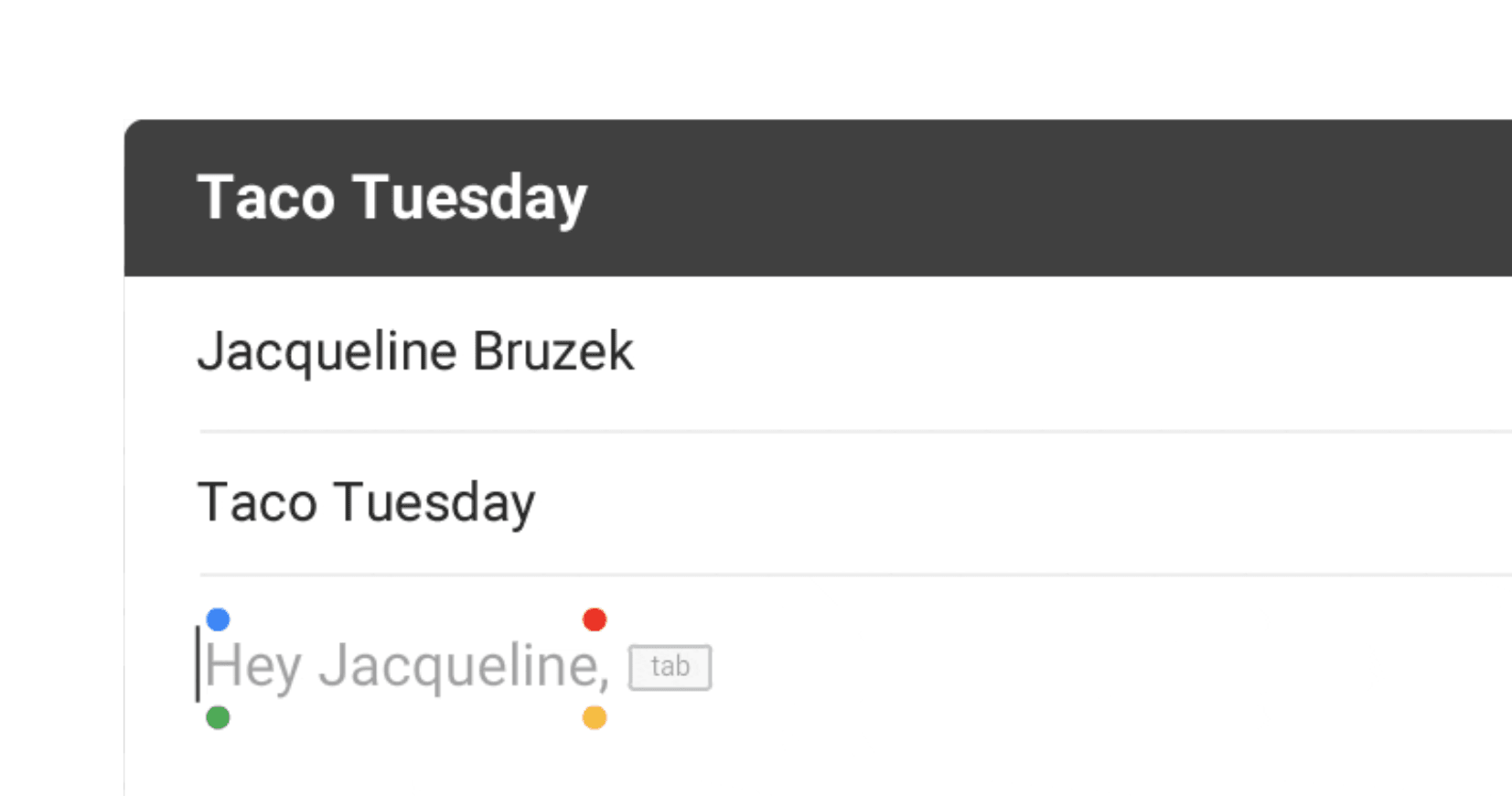

Gmail's Smart Compose was first introduced back in May 2018. What it does, is allowing users to automatically complete sentences as they typed them. This is similar to Google Search's autocomplete feature which suggest words as users continue typing.

But in Gmail's case, its natural language generation (NLG), which learns from patterns and relationships between words in emails, literature and web pages to write sentences, is more biased to men.

Gmail product manager Paul Lambert said that a problem was discovered in January when a company researcher typed "I am meeting an investor next week," and Smart Compose suggested "Do you want to meet him?" instead of "her" when attempting to autocomplete the question.

This AI bias towards the male gender was because the AI thought that the investor was likely to be a male, and very unlikely to be a female. The AI leans this from the data it got from emails written and received by 1.5 billion Gmail users around the world.

In the real world, this may not matter much, especially to those who don't use Gmail to compose or receive emails in English. But for those where feminism takes place promoting gender equality, which fueled part of the #MeToo movement, for example, this AI bias may not be well-received.

Things can be even more concerning when the recipient is a transgender person, and preferred to be addressed as "they".

This is not the first time that Google messed up.

Due to its algorithms and the extensive use of AI to manage all the data it can handle, Google had experienced a fail in its image recognition feature in the past. At that time, Google's AI classified black people as "gorillas", a term which had a racist history.

Google Search had even suggested the anti-Semitic query "are jews evil" when users sought information about Jews.

In previous times, Google also had listed many women as middle-aged men, when its AI learned the data patterns from "Computers and Electronics” or “Parenting” to be occupied by mostly men at their 40s.

And in Gmail's issue, users of the internet and smartphones have accustomed to embarrassing gaffes from autocorrect features. But Google doesn't want to take any chances, especially when gender issues are reshaping politics and society, and critics are scrutinizing potential biases in AI like never before.

Because the risk is too high that Gmail's Smart Compose AI might predict someone’s gender identity incorrectly and offend users, Google decided to block gender-based pronouns altogether.

"Not all 'screw ups' are equal," Lambert said. Gender is a "a big, big thing" to get wrong.

Related: Due To 'Unfair Biases', Google Excludes Gendered Labels From Its Image Recognition AI