Algorithms have helped many in automating tasks. While they can certainly lift most of the burdens, that doesn't mean that everything is all good.

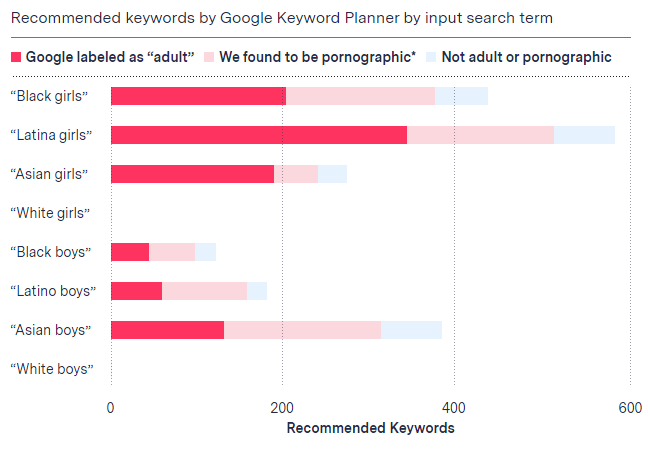

Google Ads' Keywords Planner is a tool which helps advertisers choose the best keywords to associate with their ads. The free tool meant for basic keyword analysis was found to consider "Black girls", "Latin girls", and "Asian girls" with porn-related searches.

According to a report from The Markup, the American nonprofit organization focusing on data-driven journalism, the Google tool offered hundreds of misleading keyword suggestions related to the ethnicities.

"Searches in the keyword planner for 'boys' of those same ethnicities also primarily returned suggestions related to pornography," said the report, while "searches for 'White girls' and 'White boys', however, returned no suggested terms at all."

Google appears to have blocked results from terms combining a race or ethnicity and either “boys” or “girls” from being returned by the Keyword Planner, shortly after The Markup contacted the company for comment about the issue.

The search giant quickly fixed the problem, but didn't make any official statements about it.

In the world where algorithms power many parts of computing and the web, such bias isn't anything new.

But the findings by The Markup, again surfaces how algorithm bias can hurt anything that is associated with it.

In this case, Google’s algorithms contained a racial bias that equated people of color with objectified sexualization, while exempting White people from any associations. Besides associating the ethnicities with porn, this also makes it difficult for advertisers to reach young Black, Latin and Asian people with their products and services.

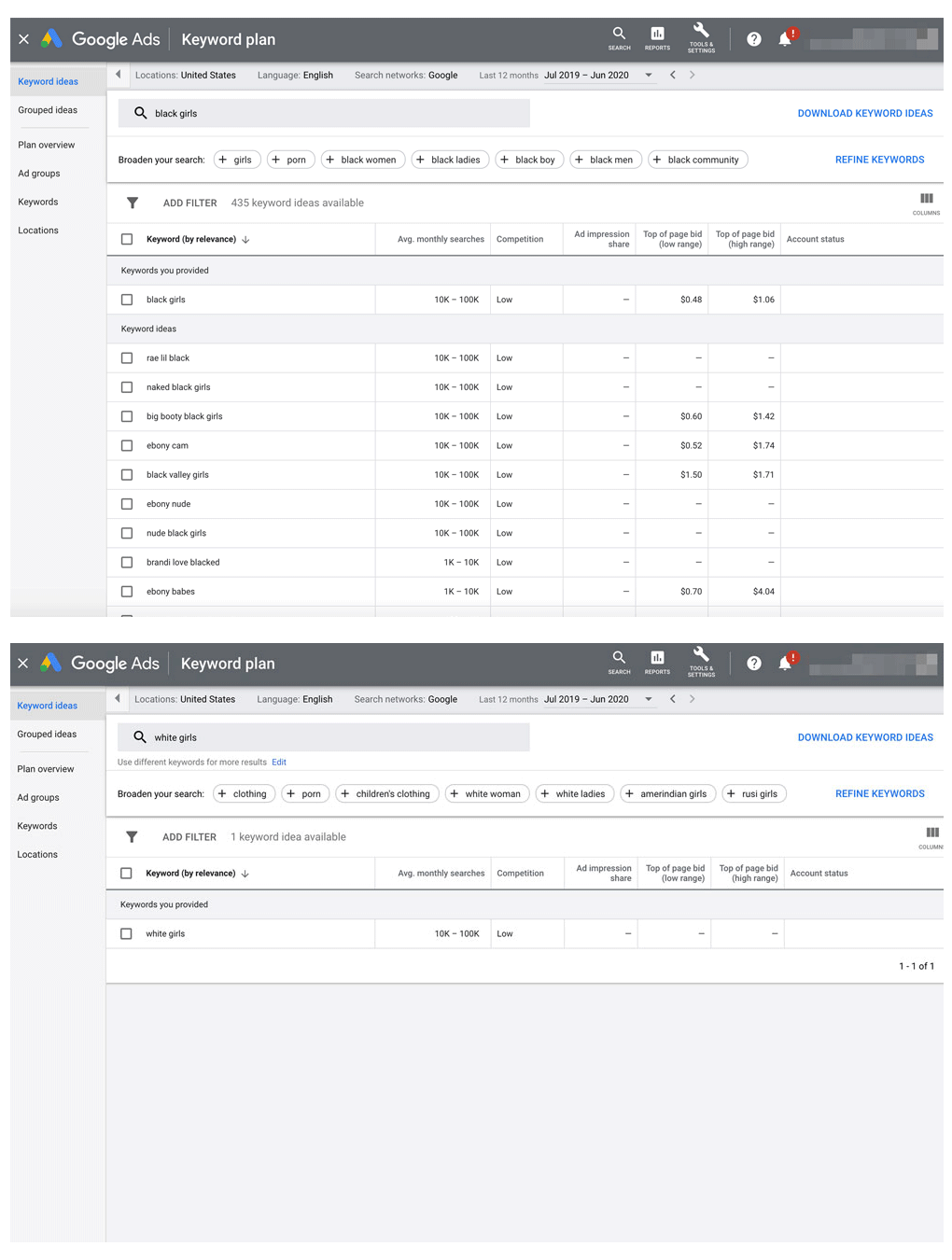

The Markup realized this when entering "Black girls" into Google's Keyword Planner, and found that it returned 435 suggested terms.

Google’s own porn filter flagged 203 of the suggested keywords as “adult ideas”, meaning that Google knew that nearly half of the results for “Black girls” were adult-related.

"Many of the 232 terms that remained would also have led to pornography in search results, meaning that the 'adult ideas' filter wasn’t completely effective at identifying key terms related to adult content," The Markup said.

"The filter allowed through suggested key terms like 'black girls sucking d—', 'black chicks white d—' and 'Piper Perri Blacked'. Piper Perri is a White adult actress, and Blacked is a porn production company."

In response, Google spokesperson Suzanne Blackburn emailed The Markup with the following statement:

"We’ve removed these terms from the tool and are looking into how we stop this from happening again."

“Within the tool, we filter out terms that are not consistent with our ad policies. And by default, we filter out suggestions for adult content. That filter obviously did not work as intended in this case and we’re working to update our systems so that those suggested keywords will no longer be shown.”

"We’ve made many changes in our systems to ensure our algorithms serve all users and reduce problematic representations of people and other forms of offensive results, and this work continues."

"Many issues along these lines have been addressed by our ongoing work to systematically improve the quality of search results. We have had a fully staffed and permanent team dedicated to this challenge for multiple years.”

Blackburn added that just because something was suggested by the Keyword Planner tool, it doesn’t necessarily mean ads using that suggestion would have been approved for ads being served to users of Google’s products.

The company however, did not explain why searches for “White girls” and “White boys” on the Keyword Planner did not return any suggested results.

Google’s Keyword Planner is one of the most important tools from Google that helps advertisers choose the best keywords for their ads to be shown on Google's products and services.

With Google Ads generating more than $134 billion in revenue in 2019 alone, this kind of issue is certainly concerning and dishearting.

This case however, isn't the first time that Google's algorithm is caught in a racial bias.

Back in 2012 for example, it was reported that searching for "Black girls" regularly surface porn websites in Google Search's top results. In 2013, it was revealed that searching for Black names on Google was far more likely to surface ads about arrest records associated with those names, rather than searches for White names.

In 2015, Google Photos was found labeling photos of Black people as gorillas. And in 2016, it was reported that searching for "beautiful woman" would surface more white people than people of colors, and searching for "ugly woman" would surface more Black and Asian women than White women.

After that being said, it should be noted that algorithms that are trained with data, are only as good as the data themselves.

This is explained by Blackburn.

She said that Google’s products are constantly incorporating data from the web, biases and stereotypes present in the broader culture can become enshrined in its algorithms.

“We understand that this can cause harm to people of all races, genders and other groups who may be affected by such biases or stereotypes, and we share the concern about this. We have worked, and will continue to work, to improve image results for all of our users,” she said.

The Markup has create a GitHub repository that contains materials to reproduce its findings.