Researchers publish papers to the public to showcase their work, by presenting their own interpretation or evaluation or argument about a subject.

By writing their own research papers, researchers can put whatever they know , and make a deliberate attempt to find out, or challenge what others in the field know. They can do this by doing prior researches on other existing research papers and others' work, to support their own work.

The same goes to AI, a growing field that is still under heavy research and development by many researchers and scientists all over the world.

But apparently, in the AI field, lots of research paper cannot be reproduced, or at least not as easy.

A researcher was so frustrated by this, that he went to Reddit. Under the username ContributionSecure14, he posted his concerns on the r/MachineLearning subreddit: “

Quickly, the post attracted many others on r/MachineLearning, which is by far, one of the largest Reddit community for AI and machine learning.

“Easier to compile a list of reproducible ones…,” one user responded.

“Probably 50%-75% of all papers are unreproducible. It’s sad, but it’s true,” another Reddit user said. “Think about it, most papers are ‘optimized’ to get into a conference. More often than not the authors know that a paper they’re trying to get into a conference isn’t very good! So they don’t have to worry about reproducibility because nobody will try to reproduce them.”

Others also expressed their opinion, by also providing links to papers that they failed to reproduce.

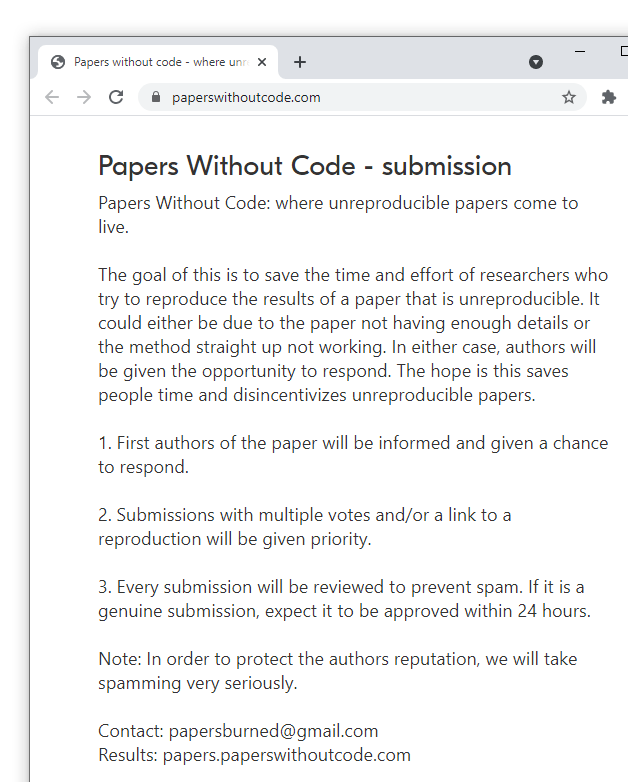

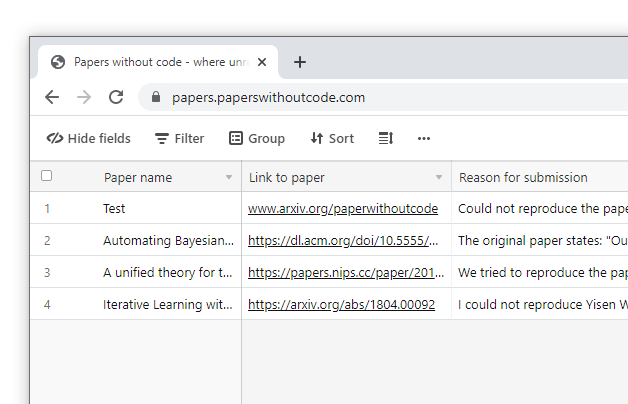

Knowing that he was not alone, ContributionSecure14 created 'Papers Without Code', a website with a goal to gather research papers that aren't implementable, and put them together in a centralized list.

“I’m not sure if this is the best or worst idea ever but I figured it would be useful to collect a list of papers which people have tried to reproduce and failed,” ContributionSecure14 wrote on r/MachineLearning. “This will give the authors a chance to either release their code, provide pointers or rescind the paper. My hope is that this incentivizes a healthier ML research culture around not publishing unreproducible work.”

AI and and its related fields are some that are still under heavy research and development.

And more than often, researchers from various institutions, large or small, from companies or organizations, for-profits and non-profits, publish their papers to online platforms like arXiv and OpenReview, among others.

They do this so the public can see their work, allowing them to be part of a large global group of researchers with a goal to continue developing this particular field.

A lot of these papers describe the concepts and techniques that highlight new challenges in machine learning systems, and some were even able to introduce new ways to solve known age-long problems. Because of this, many of these papers made their way into mainstream artificial intelligence conferences, like NeurIPS, ICML, ICLR, and CVPR.

However, the AI field and others that involve topics about intelligent machine agents, are relatively new.

And because the lack of source code inside the many papers, and also because many conferences don't require the presenter to present any codes to back their claims, attendees or others who read the papers can struggle with reproducing the results.

“I think it is necessary to have reproducible code as a prerequisite in order to independently verify the validity of the results claimed in the paper, but [code alone is] not sufficient,” ContributionSecure14 said.

“Unreproducible work wastes the time and effort of well-meaning researchers, and authors should strive to ensure at least one public implementation of their work exists,” added ContributionSecure14. “Publishing a paper with empirical results in the public domain is pointless if others cannot build off of the paper or use it as a baseline.”

This issue is not limited to just small projects or small AI software, as big companies that spend millions of dollars on AI research on their own every year, can often fail to validate the results of their own papers.

This has been for numerous times criticized in the past, with scientists wiring a joint article in Nature, describing the lack of transparency and reproducibility in a paper on the use of AI in medical imaging, published by a group of AI researchers at Google.

“[The] absence of sufficiently documented methods and computer code underlying the study effectively undermines its scientific value. This shortcoming limits the evidence required for others to prospectively validate and clinically implement such technologies,” the authors wrote.

“Scientific progress depends on the ability of independent researchers to scrutinize the results of a research study, to reproduce the study’s main results using its materials, and to build on them in future studies.”

And here, Papers With Code provides a repository of code implementation for those research papers.

Papers Without Code wants to make things easier for researchers, and make the field a lot friendlier to those who really want to learn.

Papers Without Code got its name from 'Papers With Code,' which provides implementations for scientific research papers published and presented at different venues.

“PapersWithCode plays an important role in highlighting papers that are reproducible. However, it does not address the problem of unreproducible papers,” ContributionSecure14 said.

Further reading: Paving The Roads To Artificial Intelligence: It's Either Us, Or Them