Taking selfies can be traced back to 1839, when Robert Cornelius produced a daguerreotype of himself.

At that time, it was difficult for him to create a selfie because the process was so slow. But regardless Cornelius is then considered an American pioneer in photography, that even his "first selfie" graces his tombstone at Laurel Hill Cemetery in Philadelphia, Pennsylvania.

Fast forward to the digital age, selfies are extremely common that it's almost impossible to find anyone active on social media who has never made or upload a selfie.

But for all this time, it's safe to say that selfies are real photos, meaning that they're real humans captured from the reflected light from their faces.

That, until AI steps in.

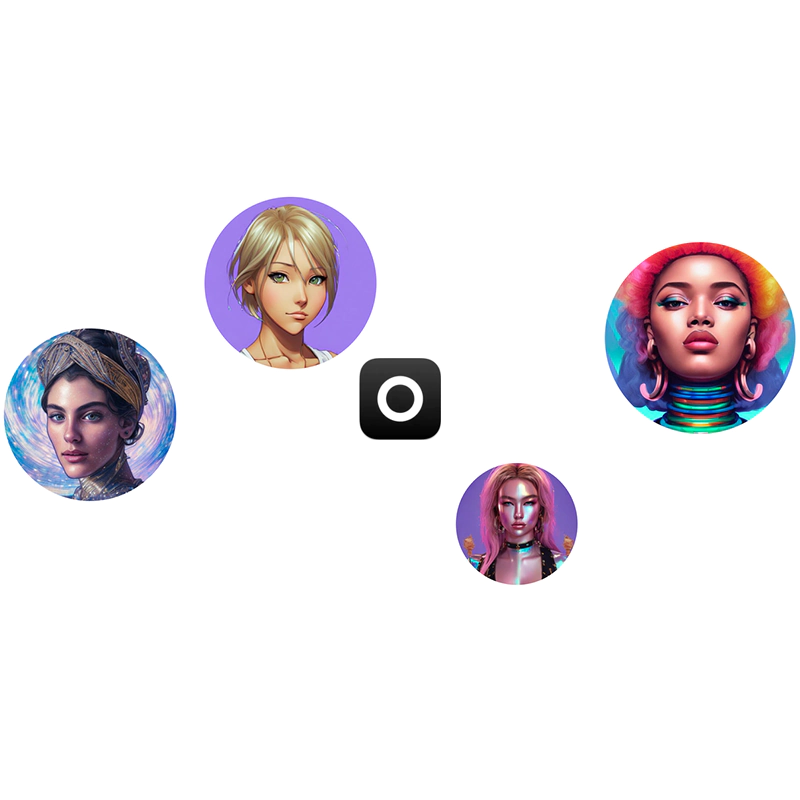

And this time, one particular AI has made rounds on social media platforms, after enabling users to create illustrated selfies that can go beyond imagination.

Lensa AI has driven lots of unique selfies uploaded to the internet, depicting people as fairy princesses, astronauts, anime characters, and more.

These photos are all AI-generated images, in which Lensa AI uses machine learning technology to stylized portraits based on photos provided by users.

Users can use this feature using the digital editing app's 'Magic Avatars' feature.

The feature essentially use an open-source neural network model called 'Stable Diffusion' that has been "trained on a sizable set of internet data to generate images from […] small pieces of text describing the desired scene in the output image," according to Prisma Labs, the company behind Lensa.

Basically, Lensa uses the AI technology to blends users' real selfies with users' text prompts, to render photos that can be considered an art.

The good thing about this feature is that, it allows users to create interesting selfies that aren't otherwise possible, even with filters. Without traditional editing apps whatsoever, users only rely on the AI to do the job.

What's more, it doesn't require lots of processing power, since all of the training and processing take place off-device and in the cloud.

While there are several other software out there that can do similar things by using Stable Diffusion AI or some other AI products, Lensa AI is arguably the first time personalized latent diffusion avatar generation has reached a mass audience in such a viral way.

Prisma Labs has been around since 2018, but started skyrocketing in popularity when Lensa AI introduced Magic Avatars in late November. The app saw around 13.5 million worldwide installs in the first 12 days of December, more than six times the 2 million it saw in November, according to analytics firm Sensor Tower.

Consumers also spent approximately $29.3 million in the app during that 12-day period, added Sensor Tower.

Read: The 'Stable Diffusion' AI Brings Text-To-Image Generator To The Public, For Free

The thing is, Lensa not only made headlines because of its capability.

Lensa also gained mass attention because the AI has a tendency to create sexualized depictions of women.

It started early in December, when women using the app noticed that Lensa's Magic Avatar feature would create semi-pornographic or unintentionally sexualized images of their selfies.

According to one of the users, even when she uploaded selfies of herself fully clothed and mostly close-ups of her face, the app returned several images with "implied or actual nudity."

Women were seen having big breasts, cleavage, and sometimes with clearly visible nipples.

In all, while the results can be cartoonish in color and kind of funny, the skin is a little too smooth and too many, prominent eyes, and facial features that are a little too symmetrical.

Making things worse, Lensa AI can be made to create NSFW images of recognizable people, opening the possibilities of revenge porn.

The same however, doesn't really apply to men.

With more women sharing that they've experienced similar things, the incident media quickly caught the media's attention.

This however, doesn't mean that Lensa AI is to blame.

The issue comes from how Stable Diffusion generates images, and how the AI considers different women differently by creating different results depending on how closely their face resemble photos of a particular actress, celebrity, or model in the Stable Diffusion dataset.

It is said that products using Stable Diffusion 1.x that are more susceptible to this kind of danger, because the versions have a high tendency of sexualizing their outputs by default.

The behavior comes from the large quantity of images that were used in the datasets.

Stable Diffusion was trained with lots and lots of images, which apparently included sexualized images. This happened because many of the images were scraped from the internet.

It's only since Stable Diffusion 2.x, that the developers attempted to rectify this by removing NSFW material from the training set.

This isn't the first time that an AI shows biases towards something, because for more than many times, it appears that AIs tend to glorify white men, and kind of misogynistic.

Since men are visual creatures, and that the internet is highly populated with naked women, and that most researchers are also male, man-made unfiltered data sourced online introduced to an AI model simply transfers human biases to artificial intelligence.

In this case, the issue has been so prevalent that the Prisma Labs Magic Avatar FAQ page has a section titled: "Why do female users tend to get results featuring an over sexualized look?"

The company explained that the reason is because the program was trained on “unfiltered internet content," and that Lensa AI "reflects the biases humans incorporate into the images they produce."

What began as deepfakes creating pornography by superimposing someone's face onto a porn star's body, generative AIs are turning the existing controversial topic into an even worse ethical nightmare.

In response to the widespread criticism, Prisma Labs has reportedly been working to prevent further accidental generation of nude images.

Read: Human-Sourced Biases That Would Trouble The Advancements Of Artificial Intelligence