The web is big, and it's still growing. Google as the tech giant of the web, feeds on the web's information.

To crawl and index websites and their web pages, Google uses crawlers it calls the 'Googlebot'. The crawlers jump from one link to another, sifting through sitemaps and all form of media and known web technologies.

And following the rise of generative AIs, and that Bard requires a lot of data to train, the company introduces a new form of Googlebot.

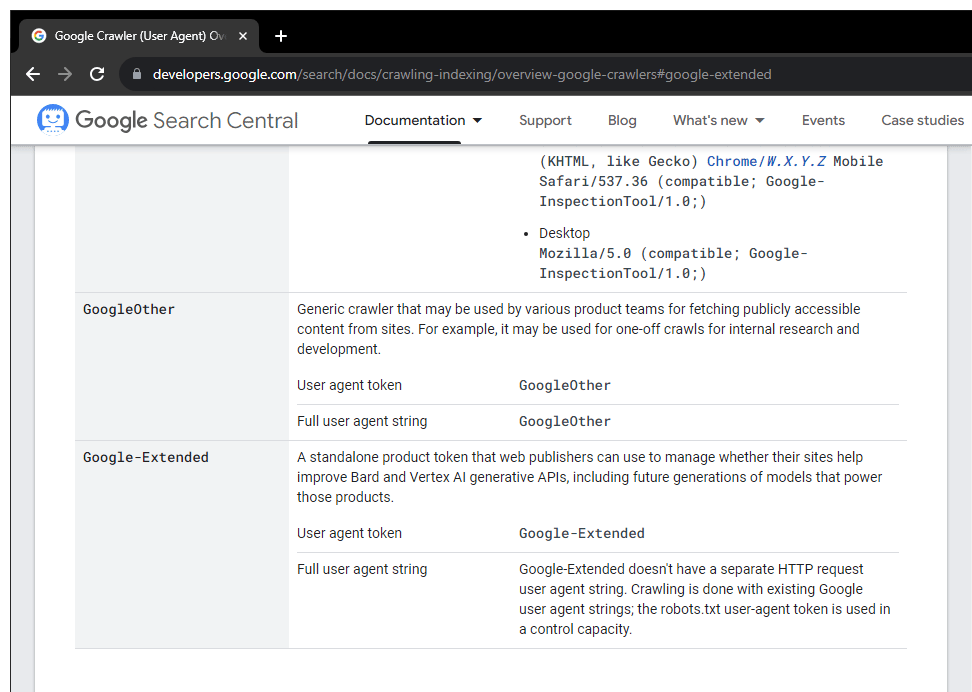

Calling it the 'Google-Extended', it's essentially a specialized crawler that is designed to crawl data to be collected for Bard.

And because it's a “standalone product token" and that it has a name, it's also a user-agent, meaning that website owners and webmasters can control it's movement when it's inside their property.

Read: How Google Search Works, And How It Can Show You The Things You Want

According to Google in a documentation page, Google-Extended is:

Its user agent token is Google-Extended, and that it doesn't have a full user agent string because it doesn't have a separate HTTP request user agent string.

To control this Google-Extended crawler, developers can use the robots.txt file and mention the user agent to control its capacity.

The launch of Google-Extended is a result of a "public discussion Google initiated back in July, which happened after the company promised to gather "voices from across web publishers, civil society, academia and more fields" to talk about choice and control over web content.

This happened after Google said that it has the right to use whatever publicly available information on the web to train its AI.

In a blog post, Google said that:

"Today we’re announcing Google-Extended, a new control that web publishers can use to manage whether their sites help improve Bard and Vertex AI generative APIs, including future generations of models that power those products. By using Google-Extended to control access to content on a site, a website administrator can choose whether to help these AI models become more accurate and capable over time."

Since the number of crawlers that scout the web for information to be consumed by AIs increase, Google warns that "web publishers will face the increasing complexity of managing different uses at scale."

By putting its own crawler under control through the robots.txt file, Google wants to provide people of the web with transparency and the control "we believe all providers of AI models should make available."

But most importantly, Google is giving an example, showing others that the choice and control should be at the hands of web publishers, not some tech companies desperate to find its ever-hungry AIs something to eat.