The launch of OpenAI’s advanced AI language model, the third generation of Generative Pre-training Transformer, GPT-3, has caught the AI world attention.

GPT-3 is around 100 times larger than GPT-2, the successor that already scared its creators.

Not only that the model has been the largest of them all, being trained on 175 billion parameters, but also has showcased the impressive capability to outrank existing state-of-the-art models for text prediction and translation.

After OpenAI launched an API so researchers and developers can access its AI models, many researchers and developers started using it for their projects to see how capable this AI can be.

And here, developer Manuel Araoz, the co-founder and former CTO of OpenZeppelin, has played a practical joke to demonstrate how scary this OpenAI's advanced AI language model can be.

He created a bot using GPT-3, and make it to write an article about itself.

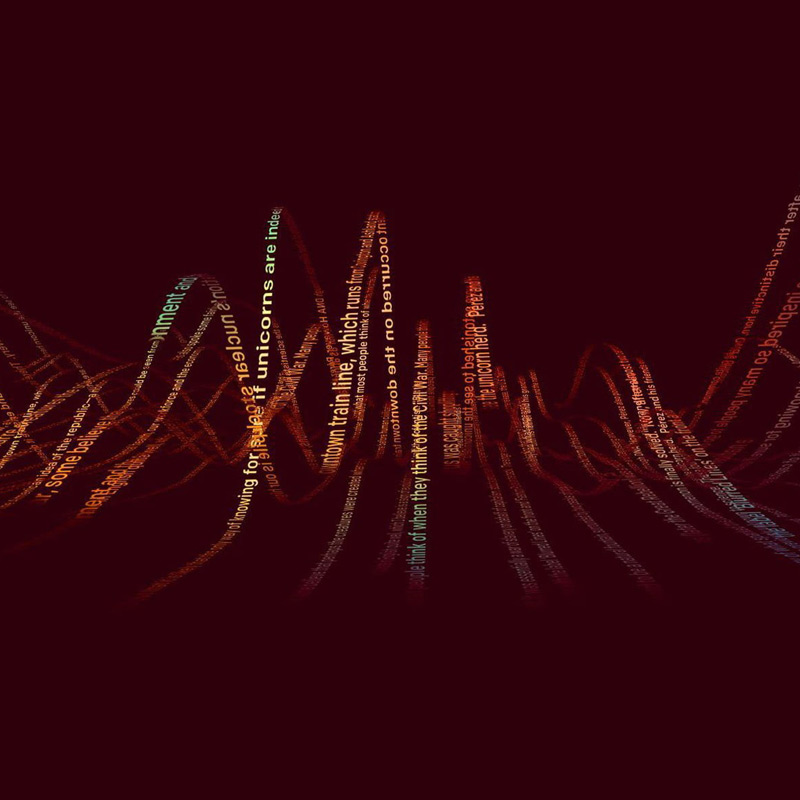

According to a post on Araoz’s blog, the third generation of OpenAI's language prediction model is capable of creating “random-ish sentences of approximately the same length and grammatical structure as those in a given body of text.”

The blog provides practical information regarding how the technology could be used to impersonate famous individuals by mimicking their writing styles.

In an example, Araoz used it to create a fake interview with Albert Einstein. He predicted that the GPT-3 could potentially replace journalists, political speech writers, and even advertising itself as a copywriter.

In the end, the sentences managed to persuade the community at bitcointalk.org into thinking that the article is 'positive', and got feedback that concluded “the system must have been intelligent.”

However, Araoz said that he didn't wrote anything in the blog.

Araoz admitted that he hasn’t posted anything on the forum for years, and has nothing against its users. It was GPT-3 that wrote the whole thing the whole time.

GPT-3 on the dangers of GPT-3

tl;dr: "GPT-3 should be banned" - GPT-3 pic.twitter.com/MTuZnC4zyi— Manuel Araoz (@maraoz) July 20, 2020

What Araoz did was relatively simple.

He started by providing the AI a short biography with his information, put his desired blog title, and created a few tags that was seen enough for the bot to start writing.

Araoz unleashed his GPT-3-powered bot, and left it on its own to create an original 750-word article to review itself.

“I generated different results a couple (less than 10) times until I felt the writing style somewhat matched my own, and published it,” said Araoz. “I do believe GPT-3 is one of the major technological advancements I’ve seen so far, and I look forward to playing with it a lot more.”

Araoz has shown that the AI technology has evolved pretty fast, and a lot faster than most people can ever imagine.

Realizing that they have been fooled, people at the forum started expressing their astonishment, shock, and also fear.

The GPT-3 hype is way too much. It’s impressive (thanks for the nice compliments!) but it still has serious weaknesses and sometimes makes very silly mistakes. AI is going to change the world, but GPT-3 is just a very early glimpse. We have a lot still to figure out.

— Sam Altman (@sama) July 19, 2020

Apart from consuming a massive amount of resources that impact the environment, as well as money, GPT-3 can scrape the internet to create archive for itself to generate the texts it needs to create.

This poses a heavy threat to disinformation.

This kind of technology can be used by bad actors to create an endless stream of fake news, spread misinformation, and bury facts by topping them with tons of easily-made malinformation.

Having said that, such advancements in language model can greatly impact the future where every written material available will be generated by computers.

And what’s worse, this could be easily attributed to the high-quality text generation capability that GPT-3 encompasses, which makes texts convincingly human-like and majorly undetectable by readers.

This potential misuse is indeed is a big concern for the industry, especially during crisis like the 'COVID-19' coronavirus pandemic.

This is why such technology has became controversial, where the authors of the GPT-3 paper warned users about its malicious use in spam, phishing and fraudulent behaviors like deepfakes.

However, it should be noted that such advancement in AI technology shouldn't make anyone to conclude that AI can completely replace human writers anytime soon.

"A text-only model trained on the Internet (like GPT-3) can't achieve human-level intelligence,” said Araoz. “It lacks visual understanding (e.g. non-verbal communication), complex motor skills or physical expertise, and a survival instinct.”

Sam Altman, the co-founder and CEO of OpenAI stated how the language model GPT-3 can be a bit of hype and still needs to work on its limitations to reduce its potential misuse.

Read: Paving The Roads To Artificial Intelligence: It's Either Us, Or Them