The AI field has developed very fast, and the hype seems to never fade.

OpenAI is one of the big players in the field. The company has had proven itself worthy in the industry, one of which, was when it created an AI capable of defeating the world's best Dota 2 players.

And one of the most famous that came out of OpenAI, would be the GPT-2.

GPT-2 is an AI capable of producing text rich with context, nuance and even humor.

It’s so good in doing what it's told to do, that OpenAI researchers think it could be misused. In other words, the creators of the AI were scared of it.

What made GPT-2 popular was not limited to its capabilities, as the hype surrounding the AI further made it a headline-grabbing text generator.

Having open-sourced the technology, the AI has been the foundation by many researchers for their projects.

And this time, the company has quietly unveiled the successor, called the GPT-3.

GPT-3 is built as the successor of its big brother, but with a lot.

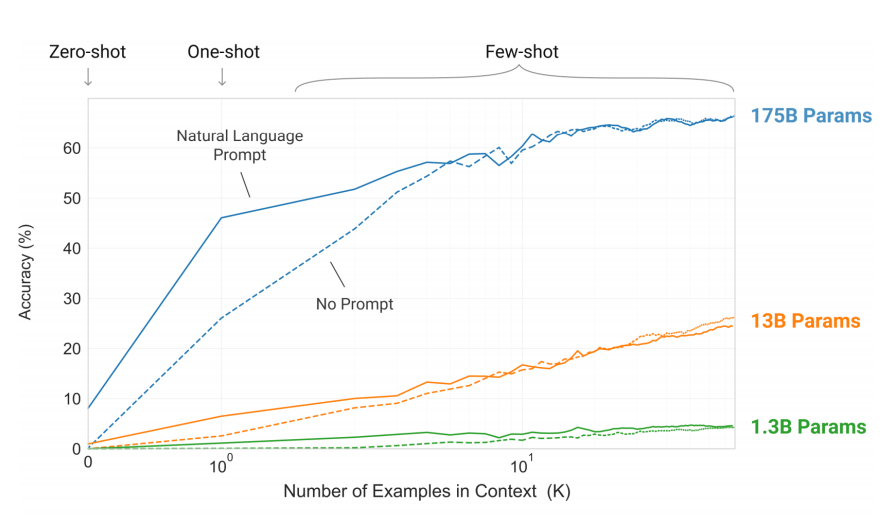

For comparison, the GPT-2 was built with 1.5 billion parameters. At the time of its release, this considered gigantic. The GPT-3 on the hand, was built with 175 billion parameters.

In other words, GPT-3 has more than a 100 times the parameters of GPT-2.

And if compared to the largest Transformer-based language model that was released by Microsoft earlier this May, which was made using 17 billion parameters, GPT-3 is still significantly larger.

Simply put, GPT-3 adopts and scales up the GPT-2 model architecture - including modified initialization, pre-normalization, and reversible tokenization - and shows strong performance on many natural language processing (NLP) tasks and benchmarks in zero-shot, one-shot, and few-shot settings.

What this means, GPT-3 should be able to perform an impressive range of natural language processing tasks, without having to be fine-tuned for each specific job.

As a matter of fact, GPT-3 is capable of translation, question-answering, reading comprehension tasks, writing poetry, improved writing capabilities, and can even solve basic math questions:

The research paper which was released on May 28, 2020, also dwarfs GPT-2 by margins, growing from 25 to 72 pages.

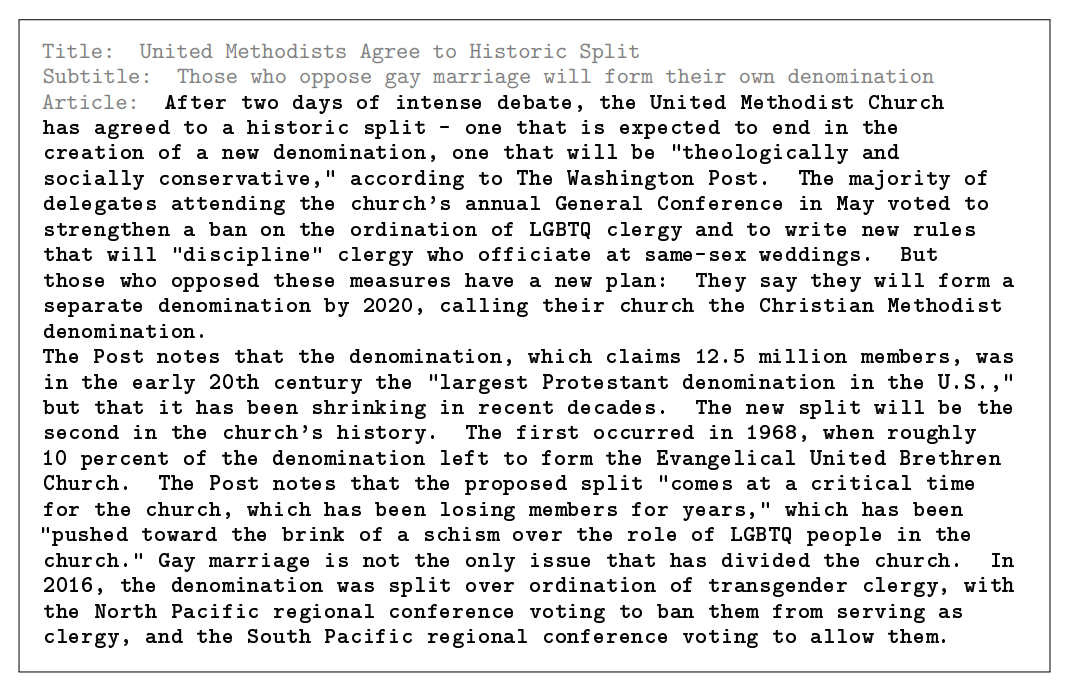

"We find that GPT-3 can generate samples of news articles which human evaluators have difficulty distinguishing from articles written by humans."

GPT-3’s skills led the researchers to issue yet another warning about its potential for misuse:

While the AI packs a punch, it's very unlikely for that many people to utilize its capabilities to full potential.

As has been in the past, training AIs require a huge amount of computing power. GPT-2 was already known to be resource hungry, and GPT-3 here, is even hungrier.

The cost to train this 175 billion parameter language model can reach $12 million in compute based on public cloud GPU/TPU cost models. To put that into another perspective, it costs around 200 times the price of GPT-2.

For those who are interested, OpenAI has open-sourced the project on GitHub.