Artificial intelligence has came a long way, and OpenAI has some of the most formidable text generator AI in the market.

First, is the newer GPT-3. The third generation of OpenAI’s Generative Pretrained Transformer is a general-purpose language algorithm that uses machine learning to translate text, answer questions and predictively write text.

It works by analyzing a sequence of words, text or other data, to then expand on these examples using its knowledge by looking at billions of examples of words, sentences, and paragraphs, scraped from the corners of the web.

With that, it could then manipulate words into new sentences by statistically predicting the order in which they should appear.

GPT-3 is so good at what it does that it can deceive people on almost topic it’s given. Initially available in private beta, its capabilities have astonished early testers.

What made GPT-3 so powerful is that, OpenAI trained it on more than 175 billion learning parameters. This an order of magnitude larger than the second-most powerful language model, Microsoft Corp.’s Turing-NLG algorithm, which has just 17 billion parameters.

With GPT-3's massive improvement over its predecessor, it doesn't mean that OpenAI is giving up its research on GPT-2.

The algorithm’s predecessor, GPT-2, had already proved to be controversial because of its ability to create realistic fake news articles based on only an opening sentence.

Even OpenAI's researchers were 'scared' of it.

While most AI models developments and research that are based on GPT-2 were to create additional paragraphs of text, as well as making formatting in such a way that it is almost indistinguishable from the original, human written text, researchers at OpenAI are also exploring what would happen if GPT-2 is fed part of an image.

Here, the researchers trained the same algorithms on images from ImageNet, the most popular image bank for deep learning.

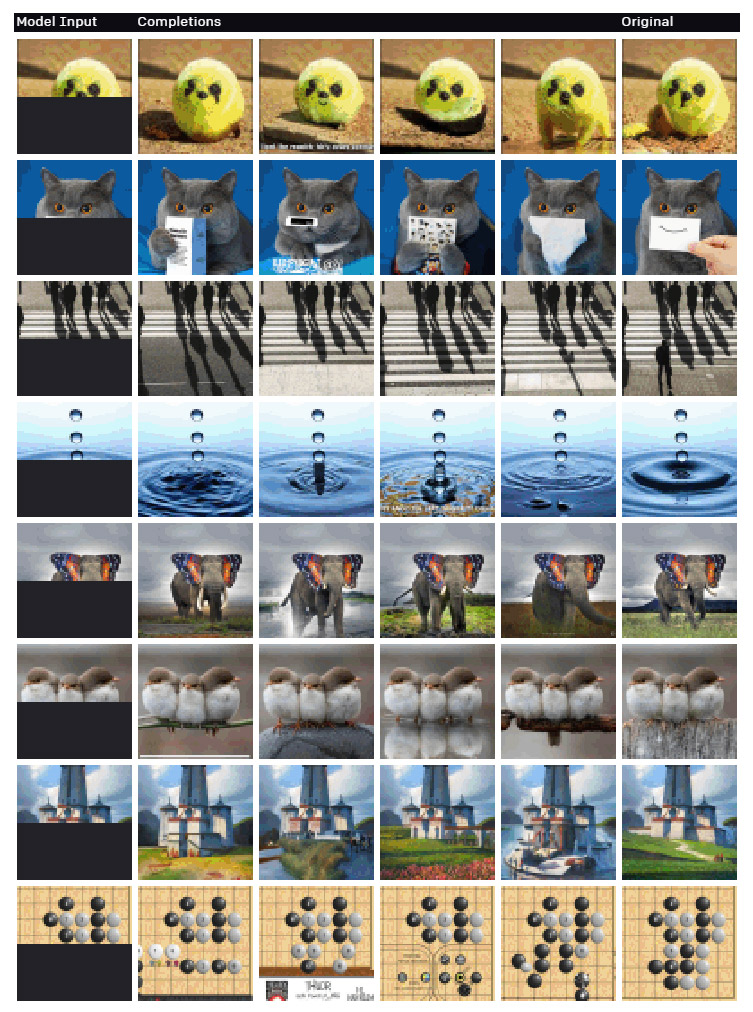

The researchers created a new model called Image GPT (iGPT) to grasp two-dimensional structures of images.

Because the algorithm was designed to work with one-dimensional data (strings of text), it essentially unfolds the images into a single sequence of pixels. After it was given the sequence of pixels for the first half of an image, the algorithm could predict the second half in ways that a human would.

And the result is indeed impressive.

The results, which were mentioned for the best paper at the International Conference on Machine Learning, opens up a new avenue for image generation.

All that with massive opportunity and consequences.

OpenAI's algorithm literally demonstrates a new opportunity for unsupervised learning. Machines can become smarter as they can be trained to better understand the visual world we all live in, with minimum efforts.

Having succeeded with iGPT, OpenAI is a step closer towards its ultimate ambition to achieve more generalizable machine intelligence.

But here comes the consequences: the method presents a concerning (and easier) way to create deepfake imagery.

If previous forms of deepfake algorithms needed to be trained on highly curated data, iGPT by contrast can simply learn enough of the visual world billions if not millions of examples, to create convincing fakes.

The only barrier here, is the price. Training such algorithm requires a massive computational power, making the whole process very expensive.