With smartphones having more importance in their users' daily lives, manufacturers are trying their best to go even beyond the necessity.

Manufacturers have worked so hard to add better hardware and features to improve the quality of their products.

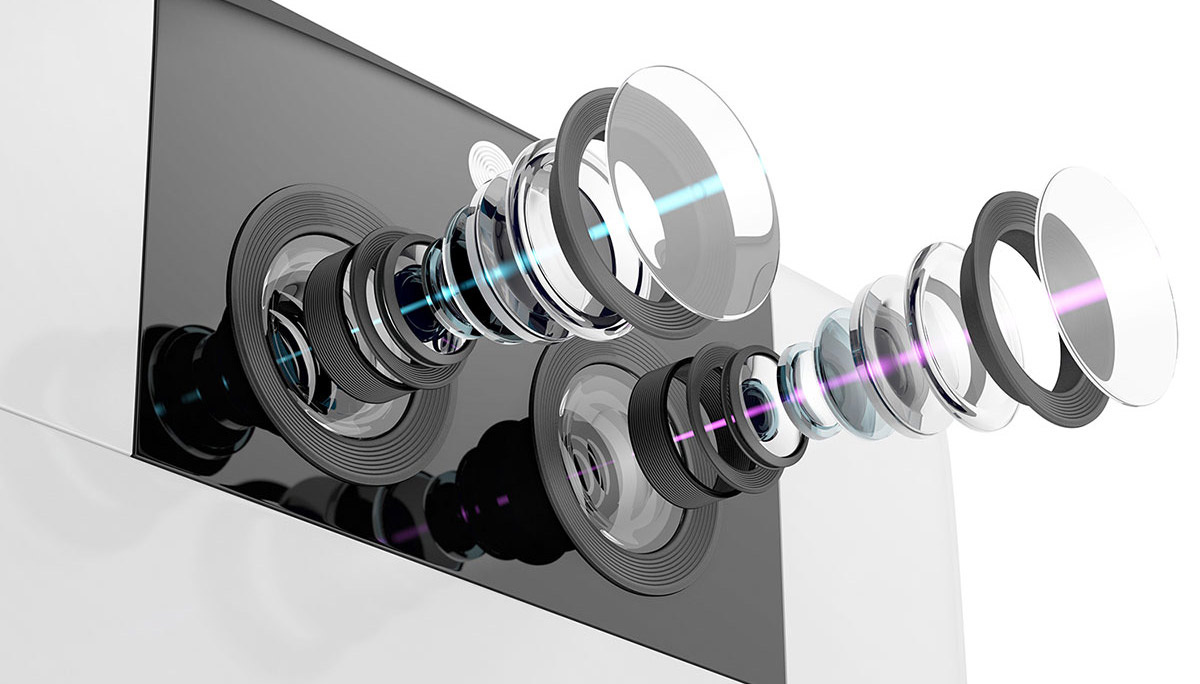

Among the many, one includes improving their camera to capture better-quality photos.

Before manufacturers raced to put more and more cameras on their products, they tried improving their products' image-capturing abilities by sticking to the single-lens camera, but with improvements, like making the lens bigger and putting optical zoom functionality.

This improved the products, but with some drawbacks.

For example, smartphones with those kind of cameras were bulkier and much heavier.

In order to make thinner devices to appeal those customers, manufacturers had to deal with the size of the individual camera module, which was at the time difficult. This caused manufacturers to create larger phones by adding a little more width and height, and try to eliminate that bulky feeling.

In 2011, the first phones with dual rear cameras were released to the market, but failed to gain traction. Originally, the dual rear cameras were implemented as a way to capture 3D content, which was something that electronics manufacturers were trying to market at the time.

Years later, Apple's iPhone 7 series popularized the concept of having more than one camera.

But multiple cameras also present new challenges never before experienced by those manufacturers:

- Manufacturers had to make the cameras work properly, using software to manage them all.

- Knowing what type of cameras to use.

- Tweaking the hardware and software in order to improve performance and image/video-capturing abilities.

- Synchronizing the cameras to improve experience and quality.

- Dealing with the increasing bulk.

- Managing the cost of production, and the increased requirement in processing power.

- And more.

Having a smartphone with multiple cameras provide an array of new features possible. From further zoom capability, better HDR, portrait modes, 3D and augmented reality-related features, low-light photography, and more.

Until the introduction and market success of the dual-camera iPhone 7, zooming in on a smartphone was almost always entirely digital. This was because putting a moving lens inside a thin smartphone is not an easy task to accomplish.

So instead of having optical zoom, smartphone manufacturers, taking cues from Apple, moved to a dual-lens-and-sensor design for their rear-facing camera. This way, they can have one main camera for general purposes, and one telephoto camera designed for zooming.

The most obvious advantage of having a dedicated telephoto camera module is for capturing better images at long focal lengths.

This provides a better result than cropping and scaling the image from the main camera.

And pair those cameras with software, manufacturers can create even better results at non-native focal lengths. But again, this presents some unique challenges in image processing.

For example, the preview image displayed to users is taken from one camera module or the other. But before that can happen, the phone needs to switch smoothly between the two sensors as the user zooms in or out. This requires the phone to adjust things like exposure, white balance, and focus distance.

And because the two modules are slightly offset from each other, the preview needs to be tweaked so the images can be realigned in order to minimize the shift in the image when the phone switches from one camera module to the other. The results are definitely not as seamless as when using a dedicated zoom lens on a standalone camera.

To address the troubles of tweaking dual-cameras with different alignment, manufacturers moved on to the next step: folded optics.

The method involves the design of a camera that bends light in a way to make the optical path much longer than the size of the system. The components make light bend 90 degrees using a prism/mirror after the lens cover.

So instead of having to cram a bunch of lenses and the CMOS chip into a vertical phone space, the folded optics design allow phones to optimize more usable phone space.

Much like a periscope. This is also why the setup is called a periscope design. This provides the best of both worlds.

Folded optics is a way to overcome the focal length limit that is previously restricted by the phone’s thickness.

Solving one problem doesn't mean that manufacturers stop just right there. Not long after manufacturers started putting more than one camera on their phones, some started experimenting with a way to do the exact opposite: zooming out.

This is by putting another kind of sensor, called the wide-angle zoom.

While not as much in demand as telephoto capability, photographers moving from standalone cameras also missed the ability to shoot wide-angle photos. One of the pioneers in putting a wide-angle lens on a smartphone, was LG.

Better telephoto and wide-angle images are only the beginning of what smartphone makers have accomplished with the addition of a second camera module.

And going forward, manufacturers started thinking even beyond that.

Utilizing multiple camera modules, it has been made possible for them to tweak photos even further. Mainly due to the increasing processing power inside smartphones, manufacturers can start to better make faces less distorted when shooting portraits, remove some unpleasant effects of photos, and more.

And knowing that having multiple cameras with different alignment has its own disadvantages, manufacturers started to realize that those cameras can be made to better estimate the depth of objects in a scene.

This process starts by measuring how far apart objects are in the images from the two cameras. This is an effect called parallax.

Objects that are closer to the cameras will be quite far apart in the two images, while objects further away will appear much closer together.

Adding the ability to estimate the depth of objects in a scene allowed manufacturers to introduce specialized Portrait modes in multi-camera phones to keep the subject sharp, while giving the background a pleasing blur. This gave birth to the 'bokeh' effect, which is naturally blurring objects using wide-aperture optics, an effect that is difficult to replicate computationally.

This is because standalone cameras with wide-aperture lenses can automatically do this with the way the optics work. But phones that are smaller, require in-camera post-processing of the images to achieve a similar effect.

Read: Time-Of-Flight Sensor, And How It Takes Invisible Light For Depth-Sensing Capabilities

And knowing that multiple cameras that not aligned can capture the depth of an object, manufacturers started to think about using them to power augmented reality. This is done by creating a depth map of a scene, making it possible to smartphones to display synthetic objects on surfaces in the scene, for example.

If done in real time, the objects can move around and seem to come alive.

Both Apple with ARKit and Android with ARCore have provided AR platforms for phones with multiple cameras.

And it seems that technology isn't stopping just right there, as smartphone manufacturers have found a way to put even more cameras. Some brands started pushing the number of their smartphone cameras to four and beyond, which is ill-advised.

But here is what it is worth: the increasing number of cameras does not guarantee a better quality picture.

Manufacturers know this. But the brands choose to add more cameras simply because it's a cheap marketing trick to use in their promotional materials. So clearly, most brands are using their multiple camera setup on their products, in order to ramp up the specifications of their phones, rather than a part to really deliver better image quality.

Quantity Is Not Equal To Quality

When it comes to cameras, quality is more important than quantity. However, if quality is not the priority, the answer is yes. More cameras do create a whole range of possibilities.

The main camera, the depth sensor, the telephoto and the wide-angle sensor are used often. They specialized in what they do, and when working together, they are hoped to capture images far beyond what the camera can do when working individually

However, the different camera types have almost nothing to do with the actual picture quality when shooting a standard photo, which is what people use the most.

In other words, the presence of multiple cameras don't magically add up to deliver a better overall photo. In fact, the biggest contributing factor to better image quality is the main camera'd hardware and a brand’s image processing software.

Whether it’s the introduction of a better main image sensor, optical image stabilization to reduce blur, or better camera software, all of these additions actually have an impact on overall picture quality.

But still there are the moments that extra cameras are worth having on a phone though, either offering a wildly different perspective or improving overall image quality.

Ultra-wide cameras for example, can take pictures that a wider than a typical camera. This is ideal for landscape, group photos, or other situations where users want to cramp as much as possible inside one frame. Many ultra-wide cameras are also capable of taking macro shots too.

Zoom cameras such as periscope or telephoto lenses are another truly handy addition too.

These cameras offer much better zoom quality than phones relying on digital zoom alone.

Conclusion

As smartphone makers compete with others in the competition with additional photographic capabilities, they are extending the power of their cameras into realms that were previously unknown.

When it comes to cameras, quality doesn't always come with more cameras, but more from the hardware that powers the cameras, as well as the software that is needed to tweak the captured images.

From Samsung that introduced the first 100MP camera, manufacturers trying to improve images using pixel binning, the introduction of Time-of-Light and LiDAR sensors on smartphones, and more, in the future, manufacturers will always peek into what the competition is doing, to see whether what others are doing can benefit them as well.

The end goal is to please users. After all, happy users mean good business.

From Android phones copying Apple's phones and vice versa, more camera modules will certainly be introduced, either or both in types and quantity.

Read: 'Digital Zombies', And How The World Can Fight Or Embrace Them