Tech companies continuously improve their offerings. And with the smartphone market worth billions of dollars, the only way to thrive is by introducing more tech.

Inside all mobile devices, there are multiple sensors that can work independently or in tandem with other sensors, to accomplish certain task.

For example, the camera sensors can work with the GPS sensor to determine where you are while taking a photo. Then there is the accelerometer sensor that works with the gyroscope sensor to measure both linear acceleration or directional movement, and the angular velocity or tilt or lateral orientation all at the same time.

As tech continues to evolve, there is what it's called the time-of-flight sensors, or simply 'ToF'.

One of the very first Android phone equipped with ToF sensor was the Oppo RX17 Pro, which was introduced back in August 2018.

Besides its dual-aperture 12MP camera and a 20MP f/2.6 camera for 2X zoom, the smartphone has a third camera, which is a ToF sensor that is capable of sensing 3D depth. Since 2018, other manufacturers started implementing their own ToF sensors to compete in the high-end smartphone market.

ToF For Sensing Depth

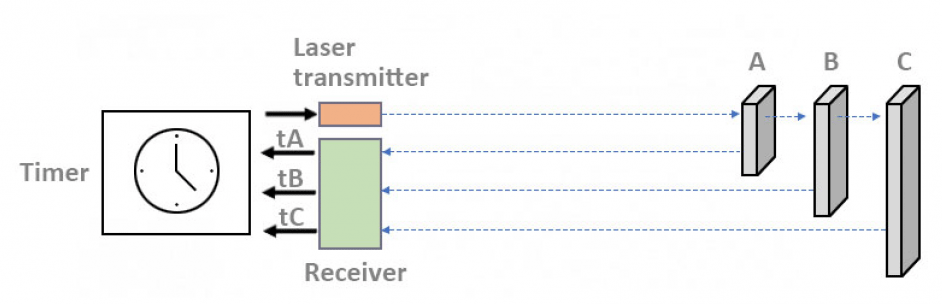

A ToF sensor is simply a range of imaging camera system that employs time-of-flight techniques to understand the distance between the sensor and the subject for each point of the image. This is done by measuring the round trip time of an artificial light signal provided by a laser or an LED.

This kind of sensor has been used for civil applications around the year 2000.

The technology was made popular when people started using it to collect high-density data to produce high-resolution maps. LiDAR sensors fitted under drones can create readings of the terrain, transmission lines, buildings, and trees. The results are more highly accurate 3D model than terrestrial sampling methods.

But in smartphones that are relatively compact, their ToF sensors are unlike other 3D-depth scanning technologies. On smartphones, the ToF technology in use in relatively cheaper and smaller. But still, they can reach up to 160fps, which means that it can be good in real-time applications such as background blur in on-the-fly video.

On smartphones, the ToF sensor also uses lesser processing power and more friendly to the battery.

In general, ToF sensors inside smartphones can do the following:

- Object scanning, indoor navigation, gesture recognition.

- Improve existing camera sensors for 3D imaging, and improve AR experiences.

- Improve photography, particularly in portrait mode, as well as improving photos in low-light situations, by helping the camera sensors to focus in darkness.

To make this happen, a ToF sensor consists of the following components:

- Illumination unit: This is used to illuminate the scene. This is done using modulated light sources with phase detector imagers using LEDs or laser diodes are feasible, or single pulse illuminator.

- Optics The lens in use to gather the reflected light from the scene. The optical band only collects the light that travel with the same wavelength as the illumination unit to reduce noise.

- Image sensor This is to dedicate unique data to each pixel, based on the measurements of the light that traveled back to the optics, from the illumination unit.

- Driver electronics This is used to control and synchronize the high speed signals. For real-time data and high resolution, the driver electronics can calculate the high speed signals down to picoseconds.

- Computation/Interface The distance and also the results are calculated and generated by algorithms.

These components working together seamlessly can make ToF a huge helping hand to existing camera sensors.

The resulting image/video should "theoretically" improve. Theoretically because the process still requires software magic, and in the end, it's up to the vendor to decide how it applies the data that the ToF camera collects.

Apple With ToF: Coming A Bit Late

With high-end Android phones were already equipped with ToF sensors, Apple fans were expecting ToF, like when was about to introduce the iPhone 11 series back in 2019. The smartphones unfortunately don't come with ToF, with Apple instead put more focus on putting a third camera on the Pro series.

In 2020 when Apple announced the fourth-generation iPad Pro, it came to a surprise to many that the company finally embedded its own ToF sensor.

But unlike its Android counterparts, Apple went a step further with its ToF, as the company is instead putting a LiDAR (Light Detection and Ranging).

The main difference in this technology is that, Apple's first step into the ToF bandwagon uses point-by-point with a laser beam to scan an entire scene, rather than using a laser or an laser-pulsed LED.

The 2020 iPad Pro's LiDAR sensor is actually a ToF, because it also uses light to measure distance.

But since it uses point-by-point laser beam to illuminate its target, its LiDAR 'ToF' sensor is capable of measuring objects that are up to 5 meters away, as opposed to the 'regular' ToF sensors that can only operate within the distance of around two meters.

Meanwhile, Apple also claims that its LiDAR system can operate “at the photon level at nano-second speeds.”

The Cupertino-based company believes that its LiDAR sensor can immensely benefit Augmented Reality (AR) experiences. AR is better known as the technology that overlays information and virtual objects on real-world scenes in real-time.

Apple has been pushing the use of AR on the iPad and iPhone for quite some time. Using ARKit for example, which the company introduced back in 2017, Apple has provided developers the way to create AR apps. And with the avail of LiDAR, Apple should take this a step further.

Starting the 2020 iPad Pro, Apple is putting AR in a bigger priority. This is also to populate the market where ToF has already become a common hardware configuration on smartphones, and to further Apple's distance from Google, which failed in bringing AR to smartphones with its Project Tango.

Unlike Google that pushed the technology earlier than most, the hardware for Project Tango was only found in a limited number of smartphones. Google ultimately realized that it made much more sense to bring AR apps to the phone people already own rather than requiring people to purchase a specific smartphone to get those capabilities.

This why Google in 2018 transitioned Project Tango to ARCore, a tool set that allows developers bring AR apps to existing Android phones without specific hardware.

Apple's strategy is quite different than Google or other Android vendors. As opposed to pushing new hardware and max their limits in order to sell them at a high price, Apple went a bit slower.

This is usual for Apple's mobile devices that seem to lag behind in some technology if compared to "cheaper" Android phones. But Apple took the wait to mature and polish the technology first, before pushing it out to its products. And in this LiDAR case, Apple waited until AR apps had made their way to the iPhone with ARKit, before launching a more specialized hardware for AR.

This strategy that Apple follows give developers a few years to learn how to create apps with Apple's existing software, before providing them the hardware to do the job.